Deploy LLMs from the Hugging Face Hub¶

Premium

Deploying LLMs from the Hugging Face Hub requires access to premium features for GenAI experimentation and GPU inference. Contact your DataRobot representative or administrator for information on enabling the required features.

Use the workshop to create and deploy popular open source LLMs from the Hugging Face Hub, securing your AI apps with enterprise-grade GenAI observability and governance in DataRobot. The new [GenAI] vLLM Inference Server execution environment and vLLM Inference Server Text Generation Template provide out-the-box integration with the GenAI monitoring capabilities and Bolt-on Governance API provided by DataRobot.

This infrastructure uses the vLLM library, an open source framework for LLM inference and serving, to integrate with Hugging Face libraries to seamlessly download and load popular open source LLMs from Hugging Face Hub. To get started, customize the text generation model template. It uses Llama-3.1-8b LLM by default; however, you can change the selected model by modifying the engine_config.json file to specify the name of the OSS model you would like to use.

Prerequisites¶

Before you begin assembling a Hugging Face LLM in DataRobot, obtain the following resources:

-

Accessing gated models requires a Hugging Face account and Hugging Face access token with

READpermissions. In addition to the Hugging Face access token, request model access permissions from the model author.

Create a text generation custom model¶

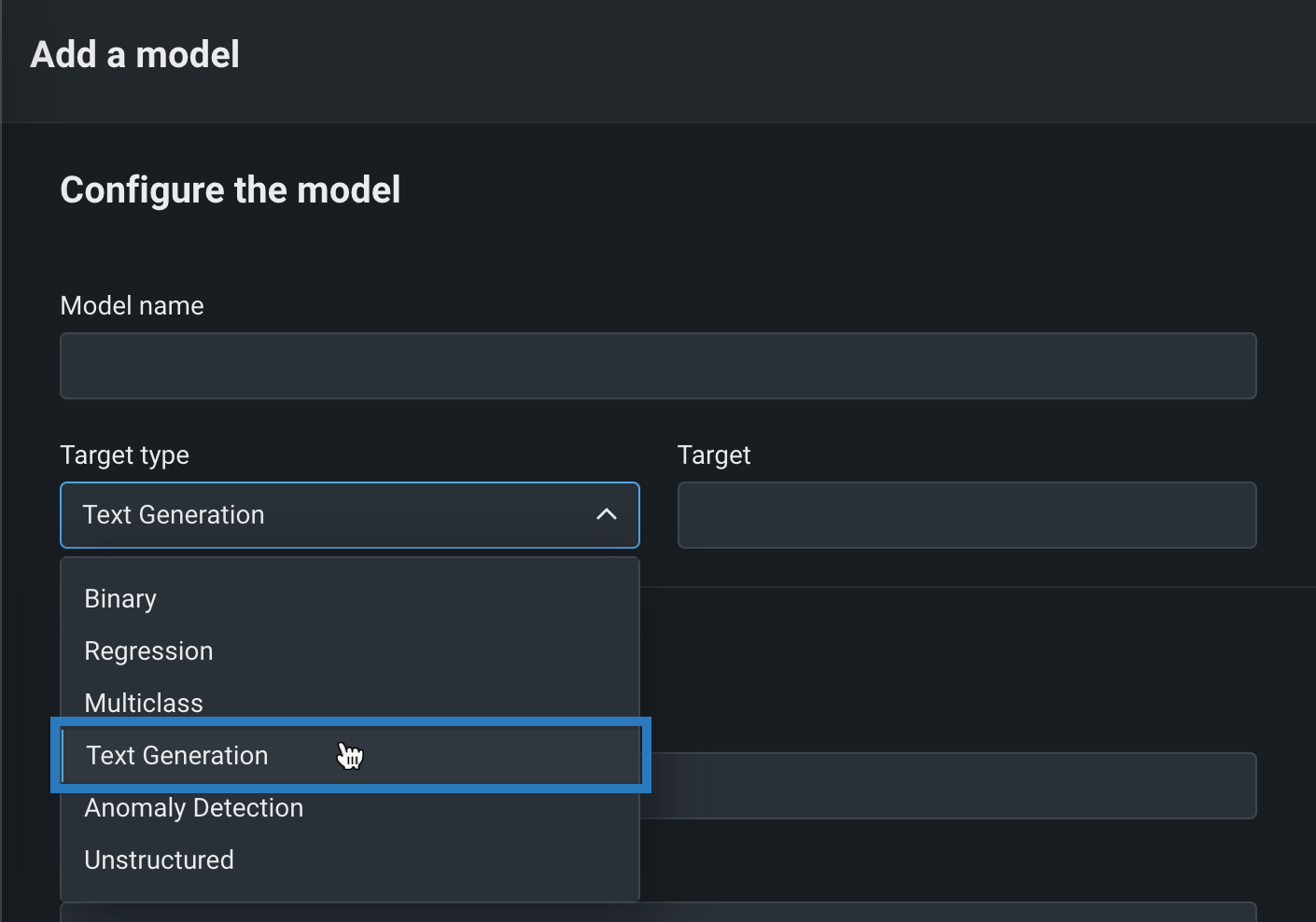

To create a custom LLM, in the workshop, create a new custom text generation model. Set the Target type to Text Generation and define the Target.

Before uploading the custom LLM's required files, select the GenAI vLLM Inference Server from the Base environment list. The model environment is used for testing the custom model and deploying the registered custom model.

What if the required environment isn't available?

If the GenAI vLLM Inference Server environment isn't available in the Base environment list, you can add a custom environment using the resources provided in the DRUM repository's public_dropin_gpu_environments/vllm directory.

Assemble the custom LLM¶

To assemble the custom LLM, use the custom model files provided in the DRUM repository's model_templates/gpu_vllm_textgen directory. You can add the model files manually, or pull them from the DRUM repository.

After adding the model files, you can select the Hugging Face model to load. By default, this text generation example uses the Llama-3.1-8b model. To change the selected model, click edit next to the engine_config.json file to modify the --model argument.

{

"args": [

"--model", "meta-llama/Llama-3.1-8B-Instruct"

]

}

Configure the required runtime parameters¶

After the custom LLM's files are assembled, configure the runtime parameters defined in the custom model's model-metadata.yaml file. The following runtime parameters are Not set and require configuration:

| Runtime parameter | Description |

|---|---|

HuggingFaceToken |

A DataRobot API Token credential, created on the Credentials Management page, containing a Hugging Face access token with at least READ permission. |

system_prompt |

A "universal" prompt prepended to all individual prompts for the custom LLM. It instructs and formats the LLM response. The system prompt can impact the structure, tone, format, and content that is created during the generation of the response. |

In addition, you can update the default values of the following runtime parameters.

| Runtime parameter | Description |

|---|---|

max_tokens |

The maximum number of tokens that can be generated in the chat completion. This value can be used to control costs for text generated via the API. |

max_model_len |

The model context length. If unspecified, this parameter is automatically derived from the model configuration. |

prompt_column_name |

The name of the input column containing the LLM prompt. |

gpu_memory_utilization |

The fraction of GPU memory to use for the model executor, which can range from 0 to 1. For example, a value of 0.5 indicates 50% GPU memory utilization. If unspecified, uses the default value of 0.9. |

Advanced configuration

For more in-depth information on the runtime parameters and configuration options available for the [GenAI] vLLM Inference Server execution environment, see the environment README.

Configure the custom model resources¶

In the custom model resources, select an appropriate GPU bundle (at least GPU - L / gpu.large):

To estimate the required resource bundle, use the following formula:

Where each variable represents the following:

| Variable | Description |

|---|---|

| \(M\) | The GPU memory required in gigabytes (GB). |

| \(P\) | The number of parameters in the model (e.g., Llama-3.1-8b has 8 billion parameters). |

| \(Q\) | The number of bits used to load the model (e.g., 4, 8, or 16). |

Learn more about manual calculation

For more information, see Calculating GPU memory for serving LLMs.

Calculate automatically in Hugging Face

You can also calculate GPU memory requirements in Hugging Face.

For our example model, Llama-3.1-8b, this evaluates to the following:

Therefore, our model requires 19.2 GB of memory, indicating that you should select the GPU - L bundle (1 x NVIDIA A10G | 24GB VRAM | 8 CPU | 32GB RAM).

Deploy and run the custom LLM¶

After you assemble and configure the custom LLM, do the following:

-

Test the custom model (for text generation models, the testing plan only includes the startup and prediction error tests).

Use the Bolt-on Governance API with the deployed LLM¶

Because the resulting LLM deployment is using DRUM, by default, you can use the Bolt-on Governance API which implements the OpenAI chat completion API specification using the OpenAI Python API library. For more information, see the OpenAI Python API library documentation.

On the lines highlighted in the code snippet below, the {DATAROBOT_DEPLOYMENT_ID} and {DATAROBOT_API_KEY} placeholders should be replaced with the LLM deployment's ID and your DataRobot API key.

| Call the Chat API for the deployment | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

Test locally with Docker¶

To test the custom model locally with Docker, you can use the following commands:

export HF_TOKEN=<INSERT HUGGINGFACE TOKEN HERE>

cd ~/datarobot-user-models/public_dropin_gpu_environments/vllm

cp ~/datarobot-user-models/model_templates/gpu_vllm_textgen/* .

docker build -t vllm .

docker run -p8080:8080 \

--gpus 'all' \

--net=host \

--shm-size=8GB \

-e DATAROBOT_ENDPOINT=https://app.datarobot.com/api/v2 \

-e DATAROBOT_API_TOKEN=${DATAROBOT_API_TOKEN} \

-e MLOPS_DEPLOYMENT_ID=${DATAROBOT_DEPLOYMENT_ID} \

-e TARGET_TYPE=textgeneration \

-e TARGET_NAME=completions \

-e MLOPS_RUNTIME_PARAM_HuggingFaceToken="{\"type\": \"credential\", \"payload\": {\"credentialType\": \"api_token\", \"apiToken\": \"${HF_TOKEN}\"}}" \

vllm

Using multiple GPUs

The --shm-size argument is only needed if you are trying to utilize multiple GPUs to run your LLM.