Set up custom metrics monitoring¶

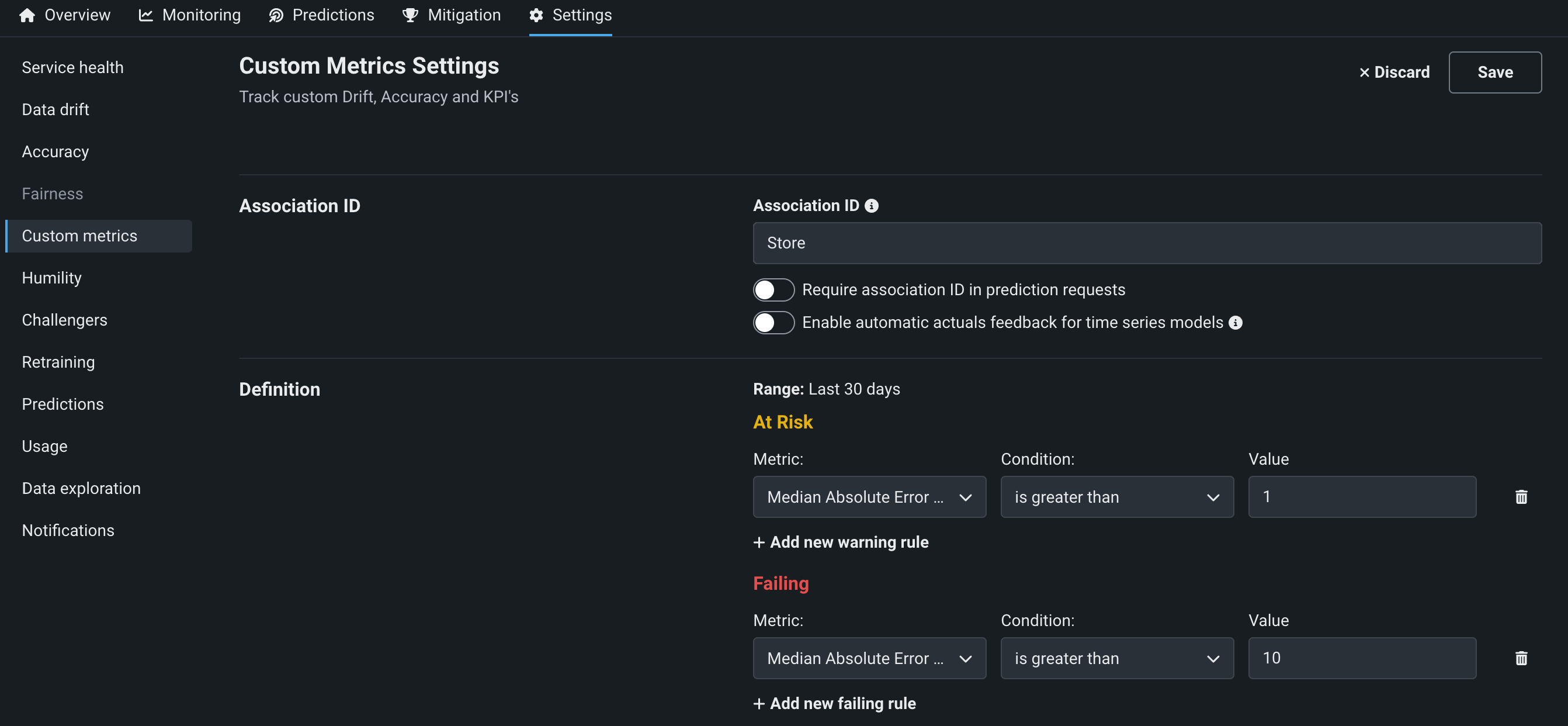

On a deployment's Settings > Custom metrics tab, you can define "at risk" and "failing" thresholds to monitor the status of the custom metrics you created on the Custom metrics tab.

On a deployment's Custom Metrics Settings page, you can configure the following settings:

| Field | Description |

|---|---|

| Association ID | |

| Association ID | Defines the name of the column that contains the association ID in the prediction dataset for your model. Association IDs function as an identifier for your prediction dataset so you can later match up outcome data (also called "actuals") with those predictions. |

| Require association ID in prediction requests | Requires your prediction dataset to have a column name that matches the name you entered in the Association ID field. When enabled, you will get an error if the column is missing. This cannot be enabled with Enable automatic association ID generation for prediction rows. |

| Enable automatic association ID generation for prediction rows | With an association ID column name defined, allows DataRobot to automatically populate the association ID values. This cannot be enabled with Require association ID in prediction requests. |

| Enable automatic actuals feedback for time series models | For time series deployments that have indicated an association ID. Enables the automatic submission of actuals, so that you do not need to submit them manually via the UI or API. Once enabled, actuals can be extracted from the data used to generate predictions. As each prediction request is sent, DataRobot can extract an actual value for a given date. This is because when you send prediction rows to forecast, historical data is included. This historical data serves as the actual values for the previous prediction request. |

| Segmented analysis | |

| Track attributes for segmented analysis of training data and predictions | Enables DataRobot to monitor deployment predictions by segment, for example by categorical features. For more information, see the segmented analysis settings documentation. |

| Definition | |

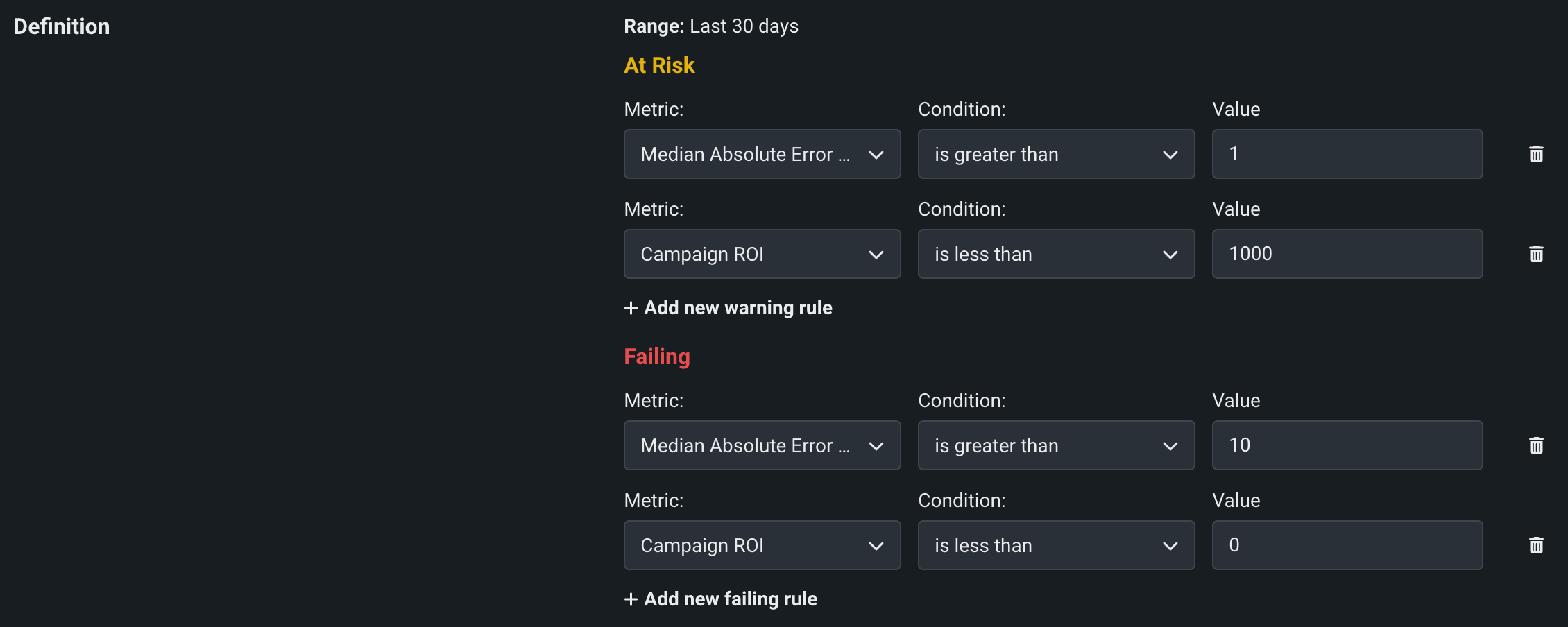

| At Risk / Failing | Enables DataRobot to apply logical statements to calculated custom metric values. You can define threshold statements for a custom metric to categorize the deployment as "at risk" or "failing" if either statement evaluates to true. |

Define custom metric monitoring¶

Configure thresholds to alert you when a deployed model is "at risk" or "failing" to meet the standards you set for the selected custom metric.

Note

To access the settings in the Definition section, configure and save a metric on the Custom Metrics tab. Only deployment Owners can modify custom metric monitoring settings; however, Users can configure the conditions under which notifications are sent to them. Consumers cannot modify monitoring or notification settings.

You can customize the rules used to calculate the custom metrics status for your deployment on the Custom Metrics Settings page:

-

In a deployment you want to monitor custom metrics for, click Settings > Custom metrics.

-

In the Definition section, define logical statements for any of your custom metrics' At Risk and Failing thresholds:

-

For At Risk, click + Add new warning rule.

-

For Failing, click + Add new failing rule.

Setting Description Metric Select the custom metric to add a threshold definition for. Category For a categorical custom metric, select which category (i.e., class) in the metric to add a threshold definition for. Condition Select one of the following condition statements to set the custom metric's threshold for At Risk or Failing: - is less than

- is less than or equal to

- is greater than

- is greater than or equal to

Value Enter a numeric value to set the custom metric's threshold for At Risk or Failing. For example, a statement could be: "The ad campaign is

At Riskif theCampaign ROIisless than10000."Remove a rule

To remove a rule, click the delete icon next to that rule.

-

-

After adding custom metrics monitoring definitions, click Save.