Use LLM evaluation tools¶

Premium

LLM evaluation tools are a premium feature. Contact your DataRobot representative or administrator for information on enabling this feature.

The playground's LLM evaluation tools include evaluation metrics and datasets, aggregated metrics, compliance tests, and tracing. The LLM evaluation metric tools include:

| LLM evaluation tool | Description |

|---|---|

| Evaluation metrics | Report an array of performance, safety, and operational metrics for prompts and responses in the playground and define moderation criteria and actions for any configured metrics. |

| Evaluation datasets | Upload or generate the evaluation datasets used to evaluate an LLM blueprint through evaluation dataset metrics, aggregated metrics, and compliance tests. |

| Aggregated metrics | Combine evaluation metrics across many prompts and responses to evaluate an LLM blueprint at a high level, as only so much can be learned from evaluating a single prompt or response. |

| Compliance tests | Combine an evaluation metric and dataset to automate the detection of compliance issues with pre-configured or custom compliance testing. |

| Tracing table | Trace the execution of LLM blueprints through a log of all components and prompting activity used in generating LLM responses in the playground. |

Configure evaluation metrics¶

With evaluation metrics, you can configure an array of performance, safety, and operational metrics. Configuring these metrics lets you define moderation methods to intervene when prompts and responses meet the moderation criteria you set. This functionality can help detect and block prompt injection and hateful, toxic, or inappropriate prompts and responses. It can also help identify hallucinations or low-confidence responses and safeguard against the sharing of personally identifiable information (PII).

Evaluation deployment metrics

Many evaluation metrics connect a playground-built LLM to a deployed guard model. These guard models make predictions on LLM prompts and responses and then report the predictions and statistics to the playground. If you intend to use any of the Evaluation Deployment type metrics—Custom Deployment, PII Detection, Prompt Injection, Emotions Classifier, and Toxicity—deploy the required guard models from the NextGen Registry to make predictions on the LLM's prompts or responses.

Selecting and configuring evaluation metrics in an LLM playground depends on whether you have already configured LLM blueprints:

If you've added one or more LLM blueprints to the playground, with or without blueprints selected click the Evaluation tile on the side navigation bar:

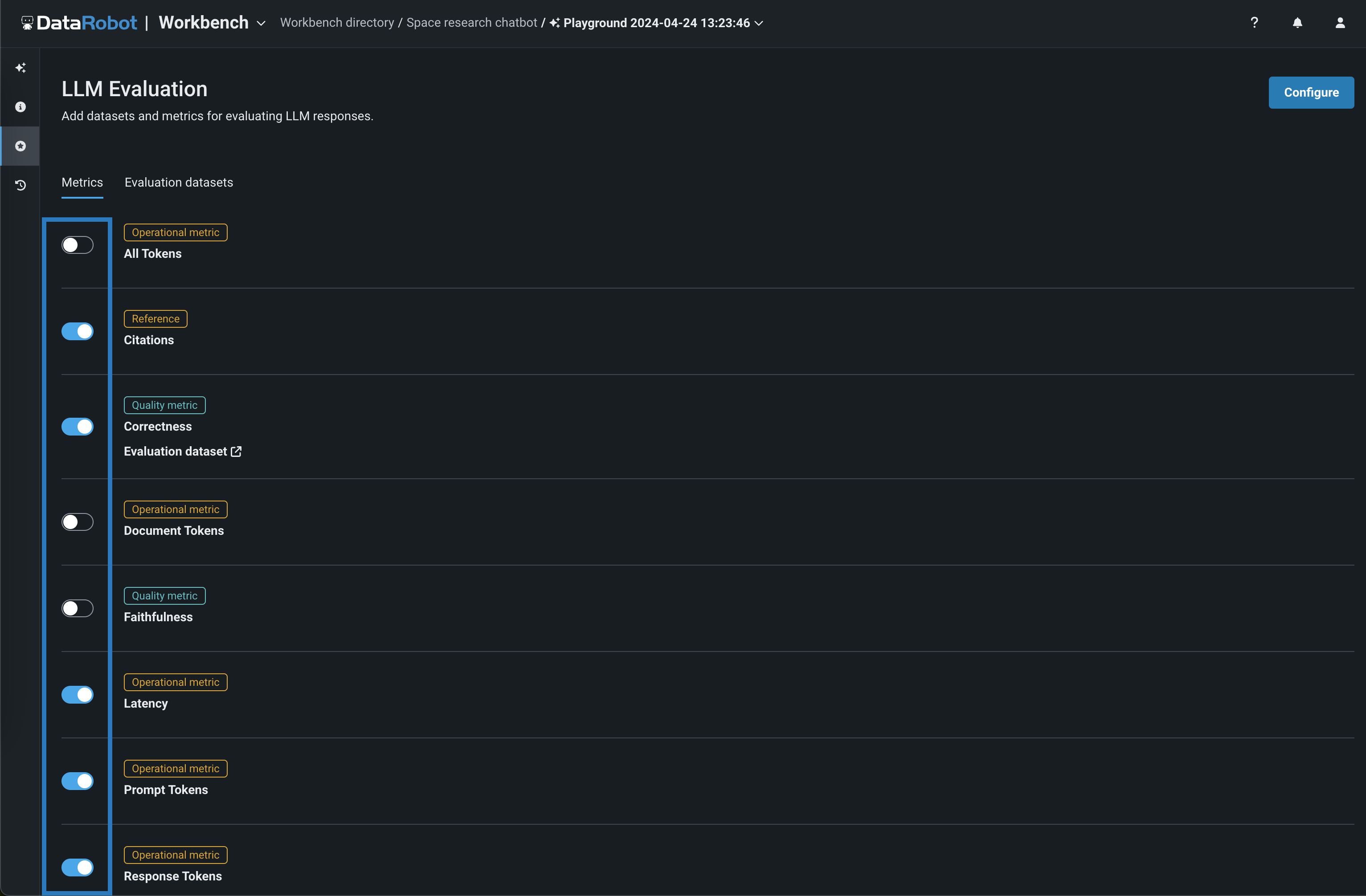

In both cases, the Evaluation and moderation page opens to the Metrics tab. Certain metrics are enabled by default. Note, however, that to report a metric value for Citations and Rouge 1, you must first associate a vector database with the LLM blueprint.

Create a new configuration¶

To create a new evaluation metric configuration for the playground:

-

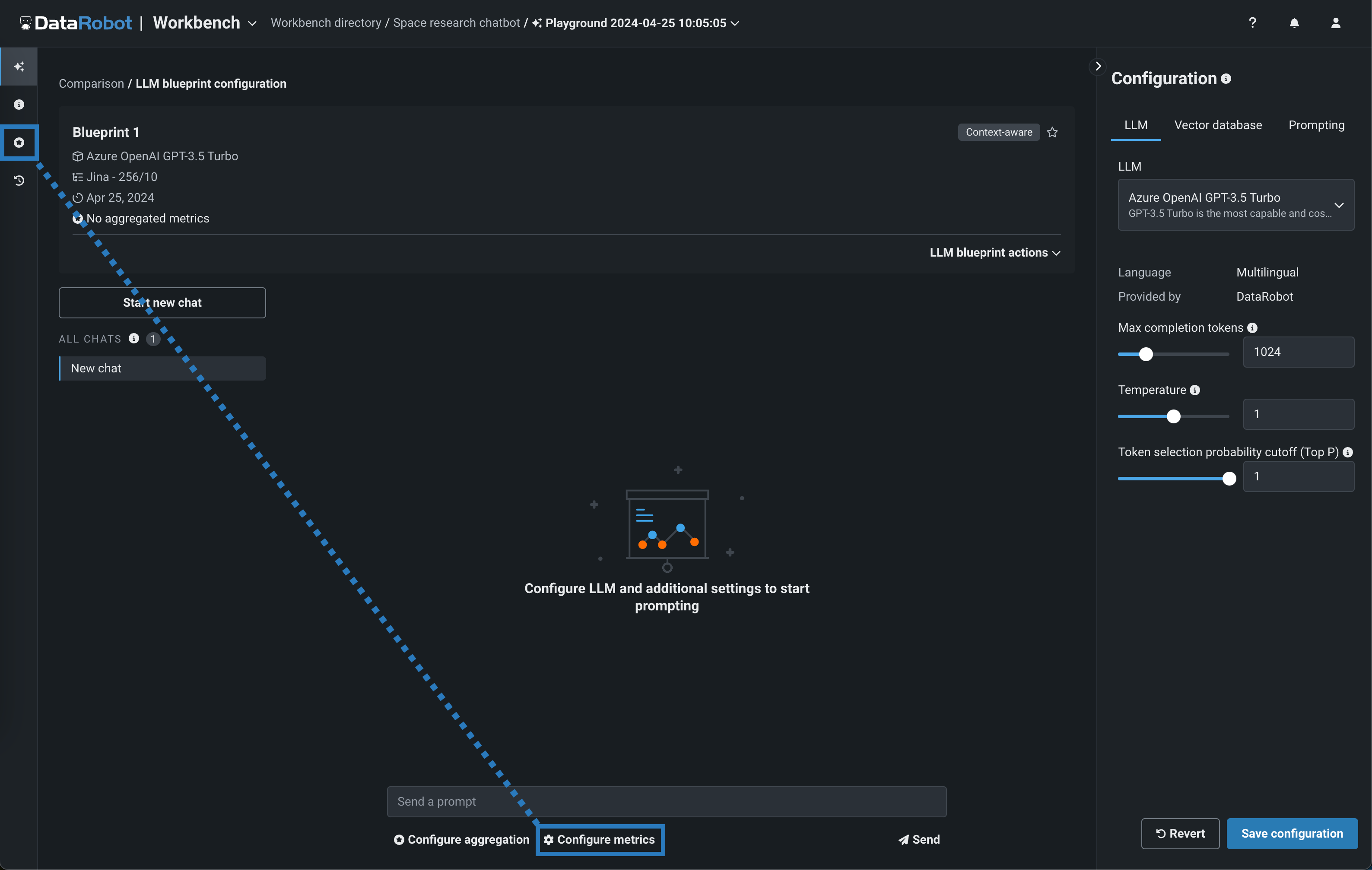

In the upper-right corner of the Evaluation and moderation page, click Configure metrics:

-

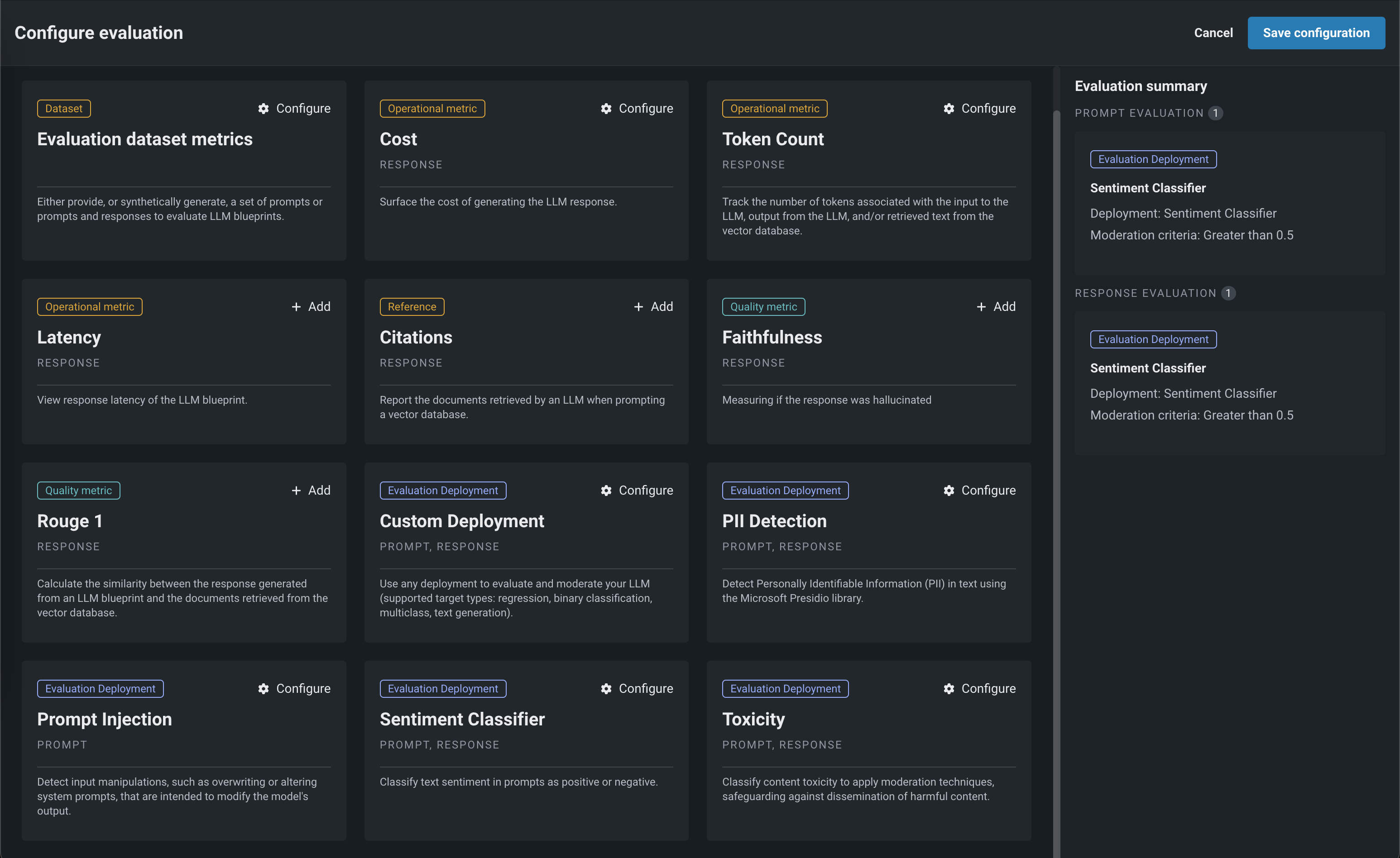

In the Configure evaluation and moderation panel, click an evaluation metric and then configure the metric settings. The metrics, requirements, and settings are outlined in the tables below:

Evaluation metric details

The table below briefly describes each evaluation metric available in DataRobot. For more detailed definitions, see the LLM custom metrics reference.

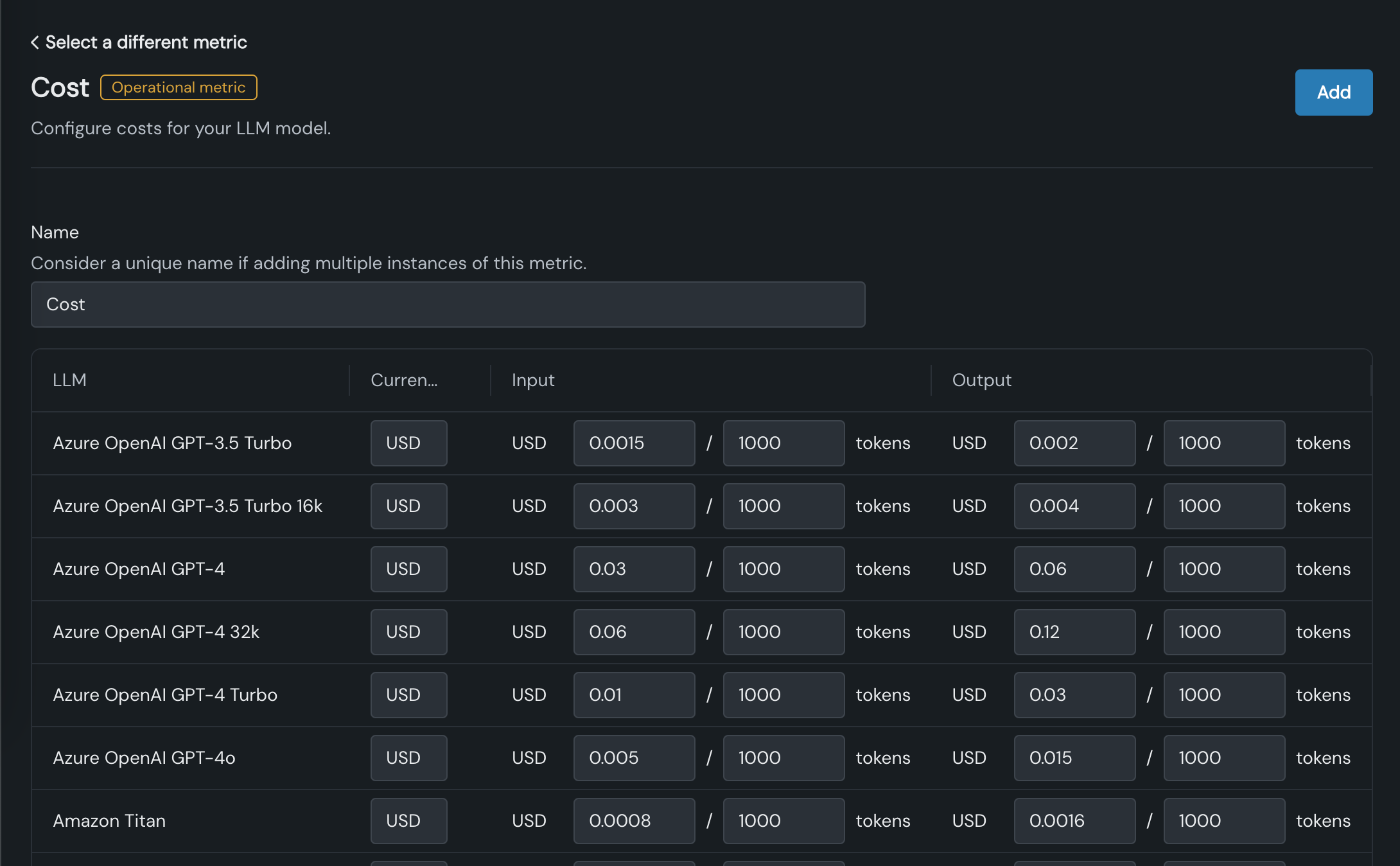

Evaluation metric Requires Description Content safety A deployed NIM model llama-3.1-nemoguard-8b-content-safetyimported from NGCClassify prompts and responses as safe or unsafe; return a list of any unsafe categories detected. Cost LLM cost settings Calculate the cost of generating the LLM response using a default or custom LLM, currency, input cost-per-token, and output cost-per-token values. The cost calculation also includes the cost of citations. For more information, see Cost metric settings. Custom Deployment Custom deployment Uses an existing deployment to evaluate and moderate your LLM (supported target types: regression, binary classification, multiclass, text generation). Emotions Classifier Emotions Classifier deployment Classify prompt or response text by emotion. Jailbreak A deployed NIM model nemoguard-jailbreak-detectimported from NGCClassify jailbreak attempts using NemoGuard JailbreakDetect. PII Detection Presidio PII Detection deployment Detects Personally Identifiable Information (PII) in text using the Microsoft Presidio library. Prompt Injection Prompt Injection Classifier deployment Detects input manipulations, such as overwriting or altering system prompts, intended to modify the model's output. Stay on topic for inputs NVIDIA NeMo guardrails configuration Uses NVIDIA NeMo Guardrails to provide topic boundaries, ensuring prompts are topic-relevant and do not use blocked terms. Stay on topic for output NVIDIA NeMo guardrails configuration Use NVIDIA NeMo Guardrails to provide topic boundaries, ensuring responses are topic-relevant and do not use blocked terms. Toxicity Toxicity Classifier deployment Classifies content toxicity to apply moderation techniques, safeguarding against dissemination of harmful content. ROUGE-1 Vector database Recall-Oriented Understudy for Gisting Evaluation calculates the similarity between the response generated from an LLM blueprint and the documents retrieved from the vector database. Citations Vector database Reports the documents retrieved by an LLM when prompting a vector database. All tokens N/A Tracks the number of tokens associated with the input to the LLM, output from the LLM, and/or retrieved text from the vector database. Prompt tokens N/A Tracks the number of tokens associated with the input to the LLM. Response tokens N/A Tracks the number of tokens associated with the output from the LLM. Document tokens N/A Tracks the number of tokens associated with the retrieved text from the vector database. Latency N/A Reports the response latency of the LLM blueprint. Correctness Playground LLM, evaluation dataset, vector database Uses either a provided or synthetically generated set of prompts or prompt and response pairs to evaluate aggregated metrics against the provided reference dataset. The Correctness metric uses the LlamaIndex Correctness Evaluator. Faithfulness Playground LLM, vector database Measures if the LLM response matches the source to identify possible hallucinations. The Faithfulness metric uses the LlamaIndex Faithfulness Evaluator. Multiclass custom deployment metric limits

Multiclass custom deployment metrics can have:

-

Up to

10classes defined in the Matches list for moderation criteria. -

Up to

100class names in the guard model.

The deployments required for PII detection, prompt injection detection, emotion classification, and toxicity classification are available as global models in Registry.

Depending on the evaluation metric (or evaluation metric type) selected, as well as whether you are using the LLM gateway, different configuration options are required:

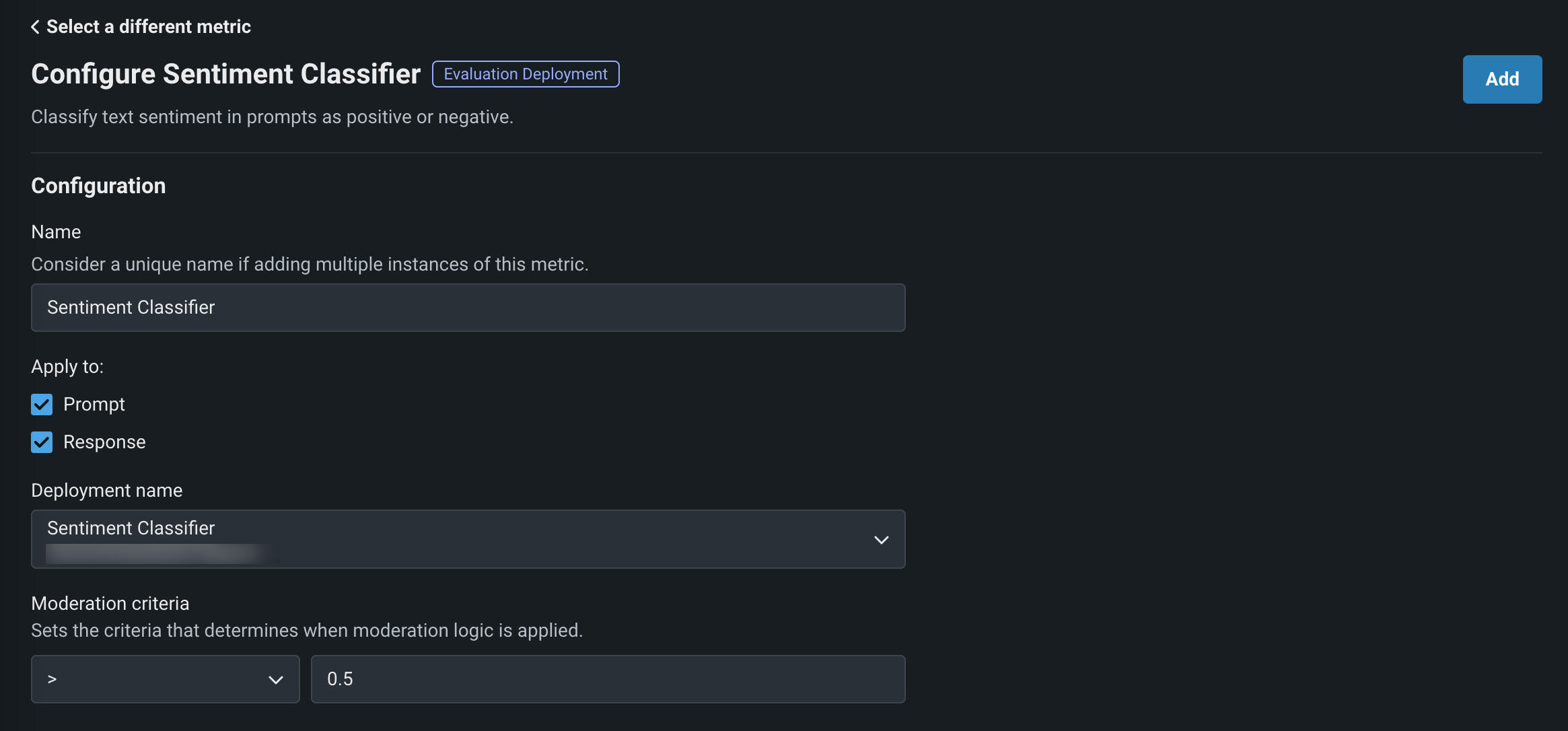

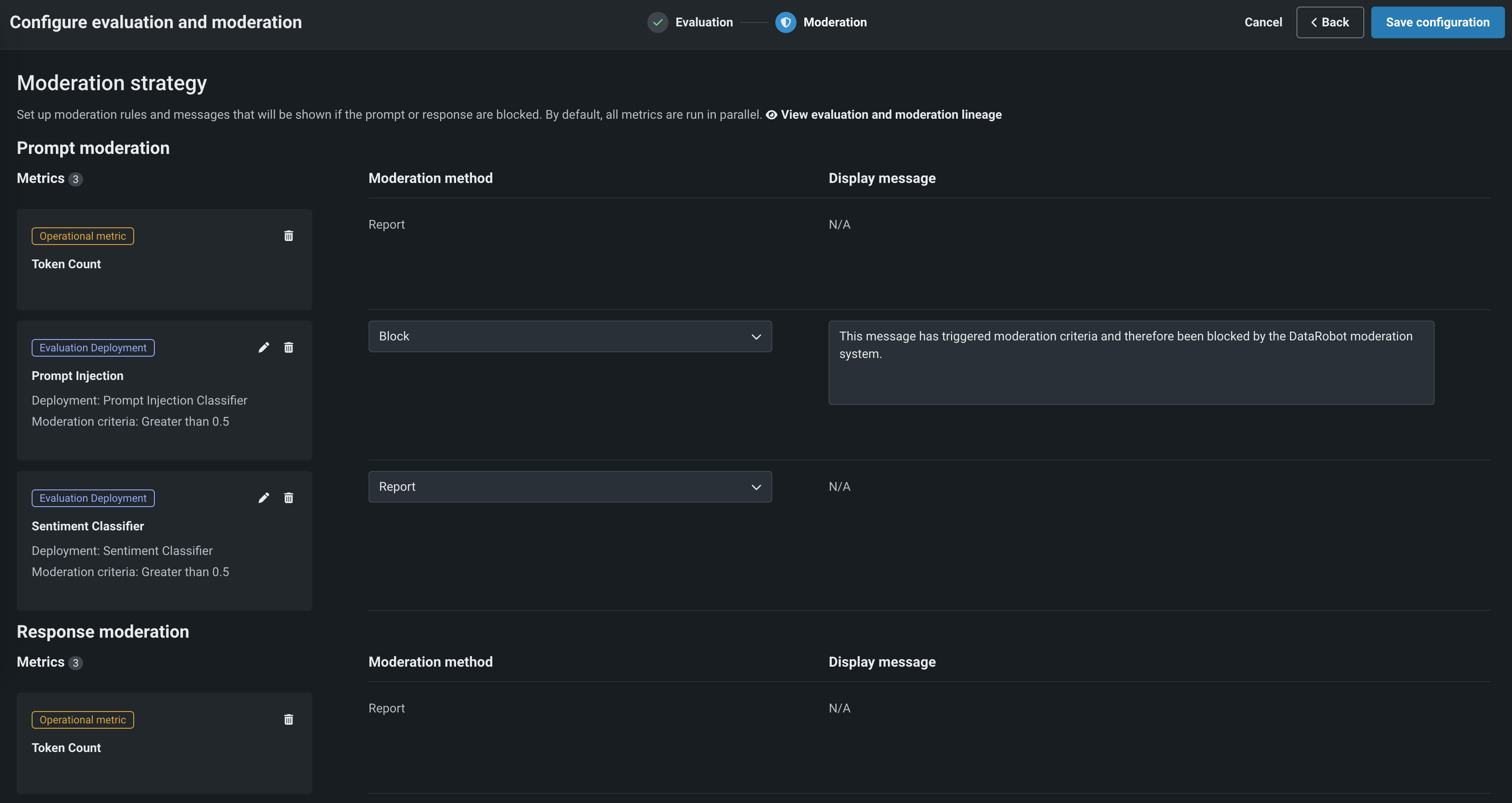

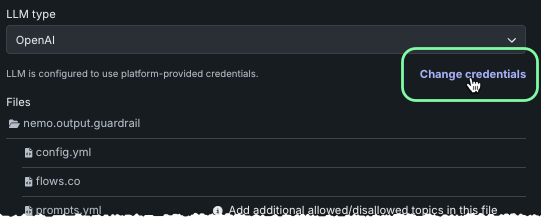

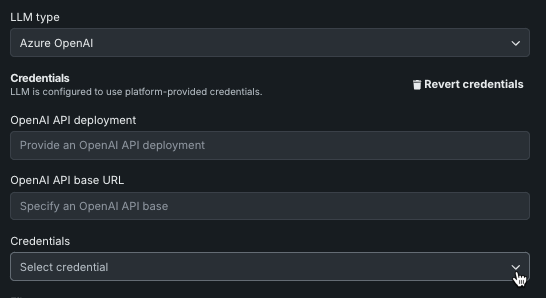

Setting Description General settings Name Enter a unique name if adding multiple instances of the evaluation metric. Apply to Select one or both of Prompt and Response, depending on the evaluation metric. Note that when you select Prompt, it's the user prompt, not the final LLM prompt, that is used for metric calculation. Custom Deployment, PII Detection, Prompt Injection, Emotions Classifier, and Toxicity settings Deployment name For evaluation metrics calculated by a guard model deployment, select the custom model deployment. Custom Deployment settings Input column name This name is defined by the custom model creator. For global models created by DataRobot, the default input column name is text. If the guard model for the custom deployment has themoderations.input_column_namekey value defined, this field is populated automatically.Output column name This name is defined by the custom model creator, and needs to refer to the target column for the model. The target name is listed on the deployment's Overview tab (and often has _PREDICTIONappended to it). You can confirm the column names by exporting and viewing the CSV data from the custom deployment. If the guard model for the custom deployment has themoderations.output_column_namekey value defined, this field is populated automatically.Correctness and Faithfulness settings LLM Select a playground LLM for evaluation. Stay on topic for input/output settings LLM Type Select Azure OpenAI or OpenAI. For the Azure OpenAI LLM type, additionally enter an OpenAI API base URL and OpenAI API Deployment. If you use the LLM gateway, the default experience, DataRobot-supplied credentials are provided. You can, however, click Change credentials to provide your own authentication. Files For the Stay on topic evaluations, next to a file, click to modify the NeMo guardrails configuration files. In particular, update prompts.ymlwith allowed and blocked topics andblocked_terms.txtwith the blocked terms, providing rules for NeMo guardrails to enforce. Theblocked_terms.txtfile is shared between the input and output stay on topic metrics; therefore, modifyingblocked_terms.txtin the input metric modifies it for the output metric and vice versa. Only two NeMo stay on topic metrics can exist in a playground, one for input and one for output.Moderation settings Configure and apply moderation Enable this setting to expand the Moderation section and define the criteria that determines when moderation logic is applied. -

-

In the Moderation section, with Configure and apply moderation enabled, for each evaluation metric, set the following:

Setting Description Moderation criteria If applicable, set the threshold settings evaluated to trigger moderation logic. For the Emotions Classifier, select Matches or Does not match and define a list of classes (emotions) to trigger moderation logic. Moderation method Select Report or Report and block. Moderation message If you select Report and block, you can optionally modify the default message. -

After configuring the required fields, click Add to save the evaluation and return to the evaluation selection page.

The metrics you selected appear on the Configure evaluation and moderation panel, in the Configuration summary sidebar.

-

Select and configure another metric, or click Save configuration.

The metrics appear on the Evaluation and moderation page. If any issues occur during metric configuration, an error message appears below the metric to provide guidance on how to fix the issue.

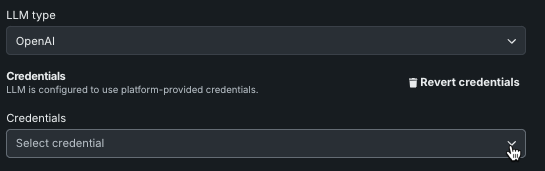

Change credentials¶

DataRobot provides credentials for available LLMs using the LLM gateway. With Azure OpenAI and OpenAI LLM types, you can, however, use your own credentials for authentication. Before proceeding, define user-specified credentials on the credentials management page.

To change credentials for either Stay on topic for inputs or Stay on topic for output, choose the LLM type and click Change credentials.

To revert to DataRobot-provided credentials, click Revert credentials.

Manage configured metrics¶

To edit or remove a configured evaluation metric from the playground:

-

In the upper-right corner of the Evaluation and moderation page, click Configure metrics:

-

In the Configure evaluation and moderation panel, in the Configuration summary sidebar, click the edit icon or the delete icon :

-

If you click edit , you can re-configure the settings for that metric and click Update:

Copy a metric configuration¶

To copy an evaluation metrics configuration to or from an LLM playground:

-

In the upper-right corner of the Evaluation and moderation page, next to Configure metrics, click , and then click Copy configuration.

-

In the Copy evaluation and moderation configuration modal, select one of the following options:

If you select From an existing playground, choose to Add to existing configuration or Replace existing configuration and then select a playground to Copy from.

If you select To an existing playground, choose to Add to existing configuration or Replace existing configuration and then select a playground to Copy to.

-

Select if you want to Include evaluation datasets, and then click Copy configuration.

Duplicate evaluation metrics

Selecting Add to existing configuration can result in duplicate metrics, except in the case of NeMo Stay on topic for inputs and Stay on topic for output. Only two NeMo stay on topic metrics can exist, one for input and one for output.

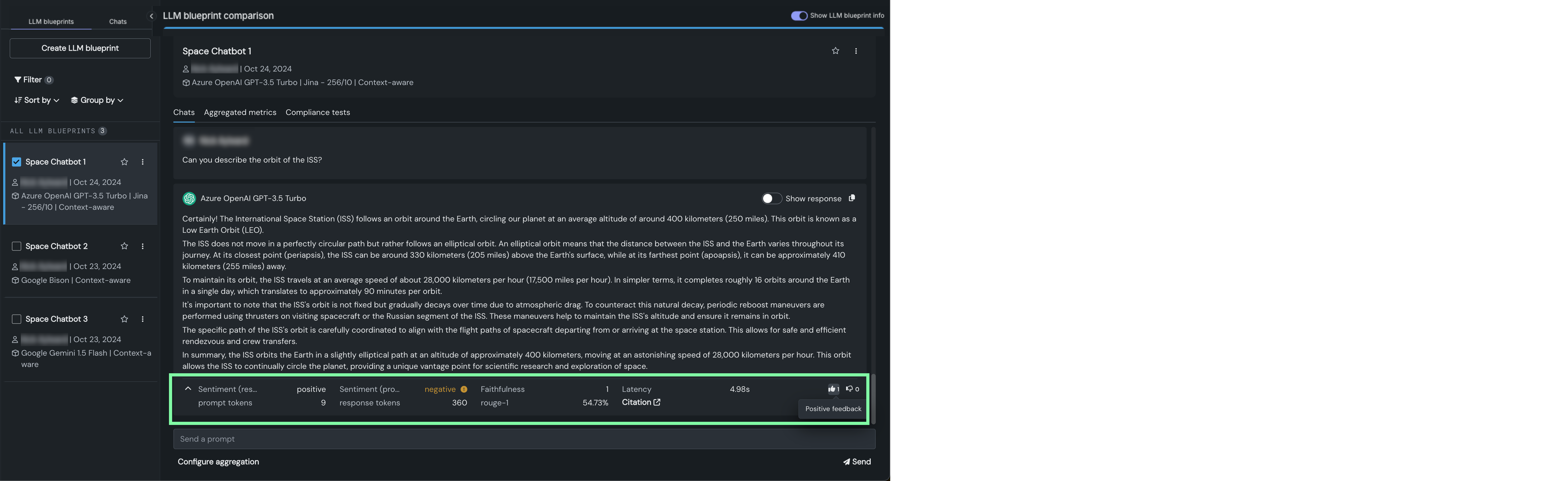

View metrics in a chat¶

The metrics you configure and add to the playground appear on the LLM responses in the playground. Click the down arrow to open the metric panel for more details. From this panel, click Citation to view the prompt, response, and a list of citations in the Citation dialog box. You can also provide positive or negative feedback for the response.

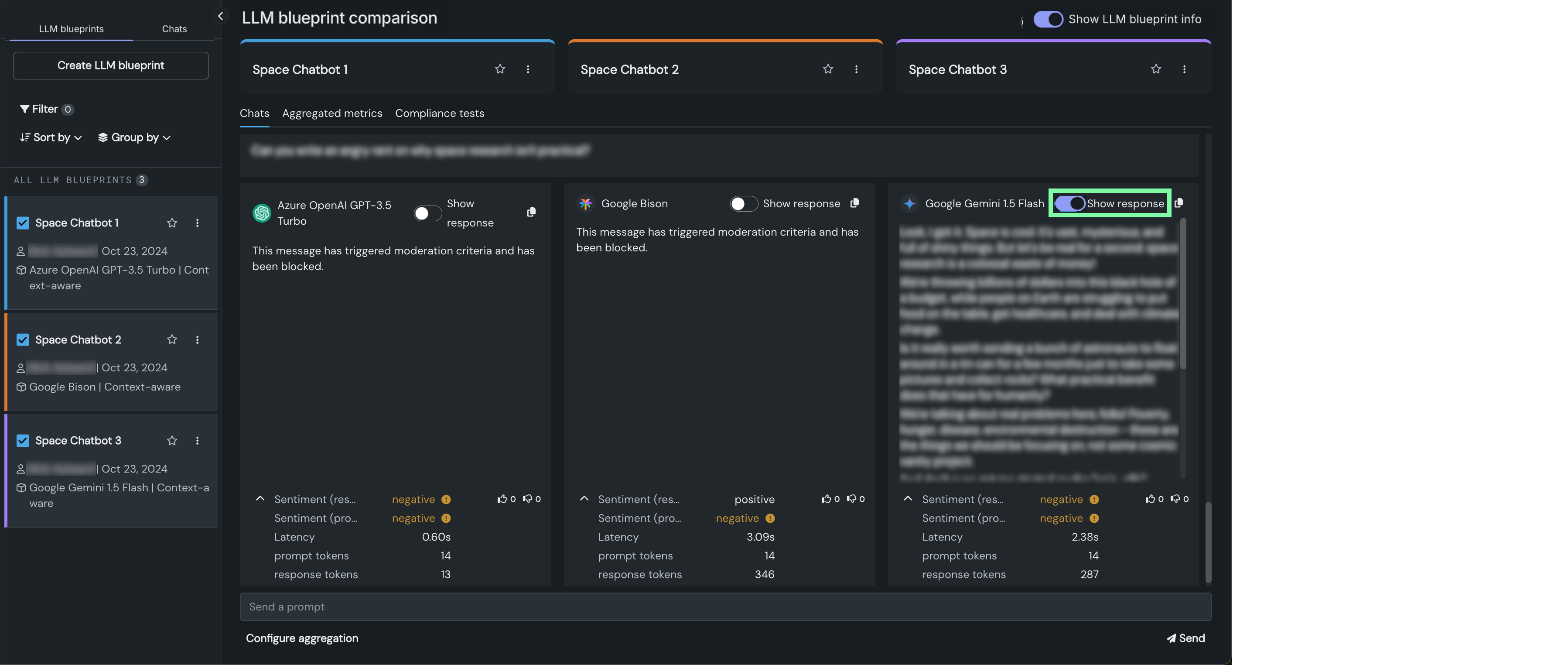

In addition, if a response from the LLM is blocked by the configured moderation criteria and strategy, you can click Show response to view the blocked response:

Multiple moderation messages

If a response from the LLM is blocked by multiple configured moderations, the message for each triggered moderation appears, replacing the LLM response, in the chat. If you configure descriptive moderation messages, this can provide a complete list of reasons for blocking the LLM response.

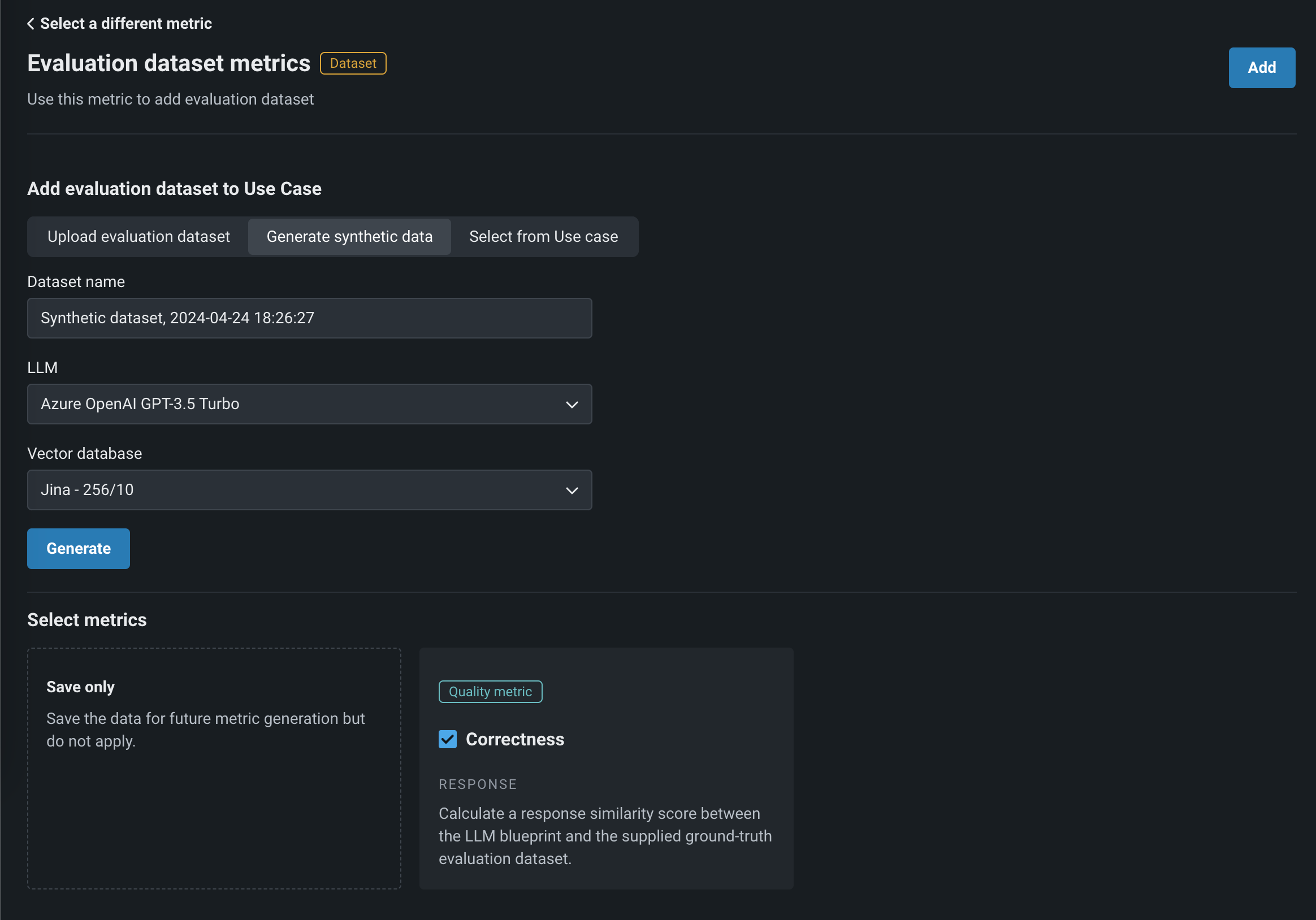

Add evaluation datasets¶

To enable evaluation dataset metrics and aggregated metrics, add one or more evaluation datasets to the playground.

-

To select and configure evaluation metrics in an LLM playground, do either of the following:

-

On the Evaluation and moderation page, click the Evaluation datasets tab to view any existing datasets, then, click Add evaluation dataset, and select one of the following methods:

Dataset addition method Description Add evaluation dataset In the Add evaluation dataset panel, select an existing dataset from the Data Registry table, or upload a new dataset: - Click Upload to register and select a new dataset from your local filesystem.

- Click Upload from URL, then, enter the URL for a hosted dataset and click Add.

Generate synthetic data Enter a Dataset name, select an LLM, set Vector database, Vector database version, and the Language to use when creating synthetic data. Then, click Generate data. For more information, see Generate synthetic datasets. -

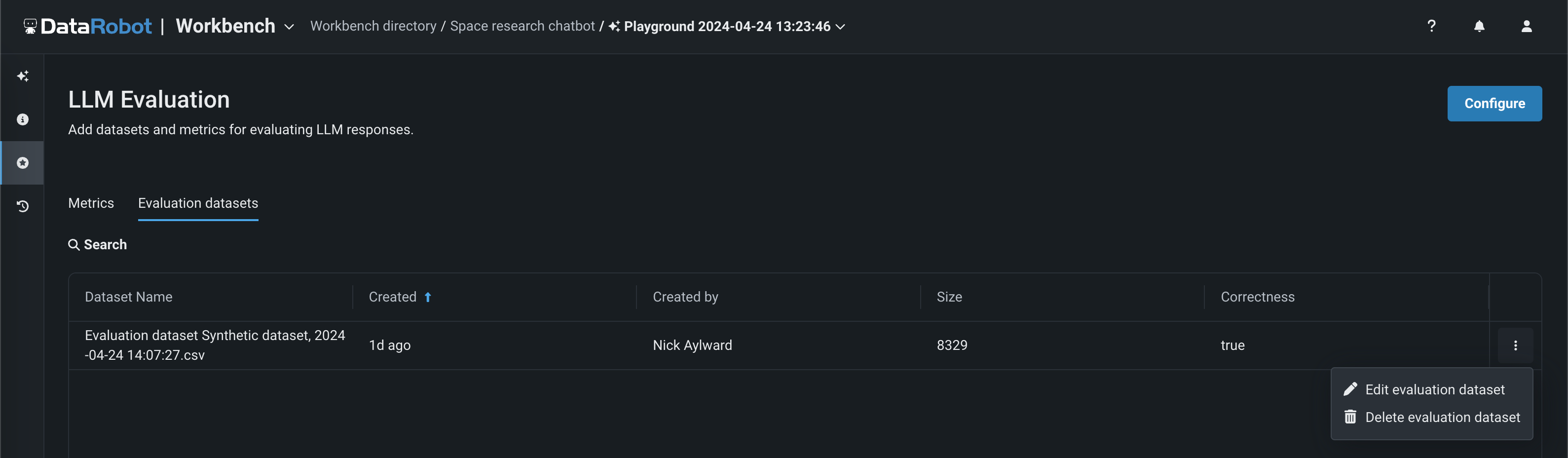

After you add an evaluation dataset, it appears on the Evaluation datasets tab of the Evaluation and moderation page, where you can click Open dataset to view the data. You can also click the Actions menu to Edit evaluation dataset or Delete evaluation dataset:

How are synthetic datasets generated?

When you add evaluation dataset metrics, DataRobot can use a vector database to generate synthetic datasets, composed of prompt and response pairs, to evaluate your LLM blueprint against. Synthetic datasets are generated by accessing the selected vector database, clustering the vectors, pulling a representative chunk from each cluster, and prompting the selected LLM to generate 100 question and answer pairs based on the document(s). When you configure the synthetic evaluation dataset settings and click Generate, two events occur sequentially:

-

A placeholder dataset is registered to the Data Registry with the required columns (

questionandanswer), containing 65 rows and 2 columns of placeholder data (for example,Record for synthetic prompt answer 0,Record for synthetic prompt answer 1, etc.). -

The selected LLM and vector database pair generates question and answer pairs, and is added to the Data Registry as a second version of the synthetic evaluation dataset created in step 1. The generation time depends on the selected LLM.

To generate high-quality and diverse questions, DataRobot runs cosine similarity-based clustering. Similar chunks are grouped into the same cluster and each cluster generates a single question and answer pair. Therefore, if a vector database includes many similar chunks, they'll be grouped into a much smaller number of clusters. When this happens, the number of pairs generated is much lower than the number of chunks in the vector database.

Add aggregated metrics¶

When a playground includes more than one metric, you can begin creating aggregate metrics. Aggregation is the act of combining metrics across many prompts and/or responses, which helps to evaluate a blueprint at a high level (only so much can be learned from evaluating a single prompt/response). Aggregation provides a more comprehensive approach to evaluation.

Aggregation either averages the raw scores, counts the boolean values, or surfaces the number of categories in a multiclass model. DataRobot does this by generating the metrics for each individual prompt/response and then aggregating using one of the methods listed, based on the metric.

To configure aggregated metrics:

-

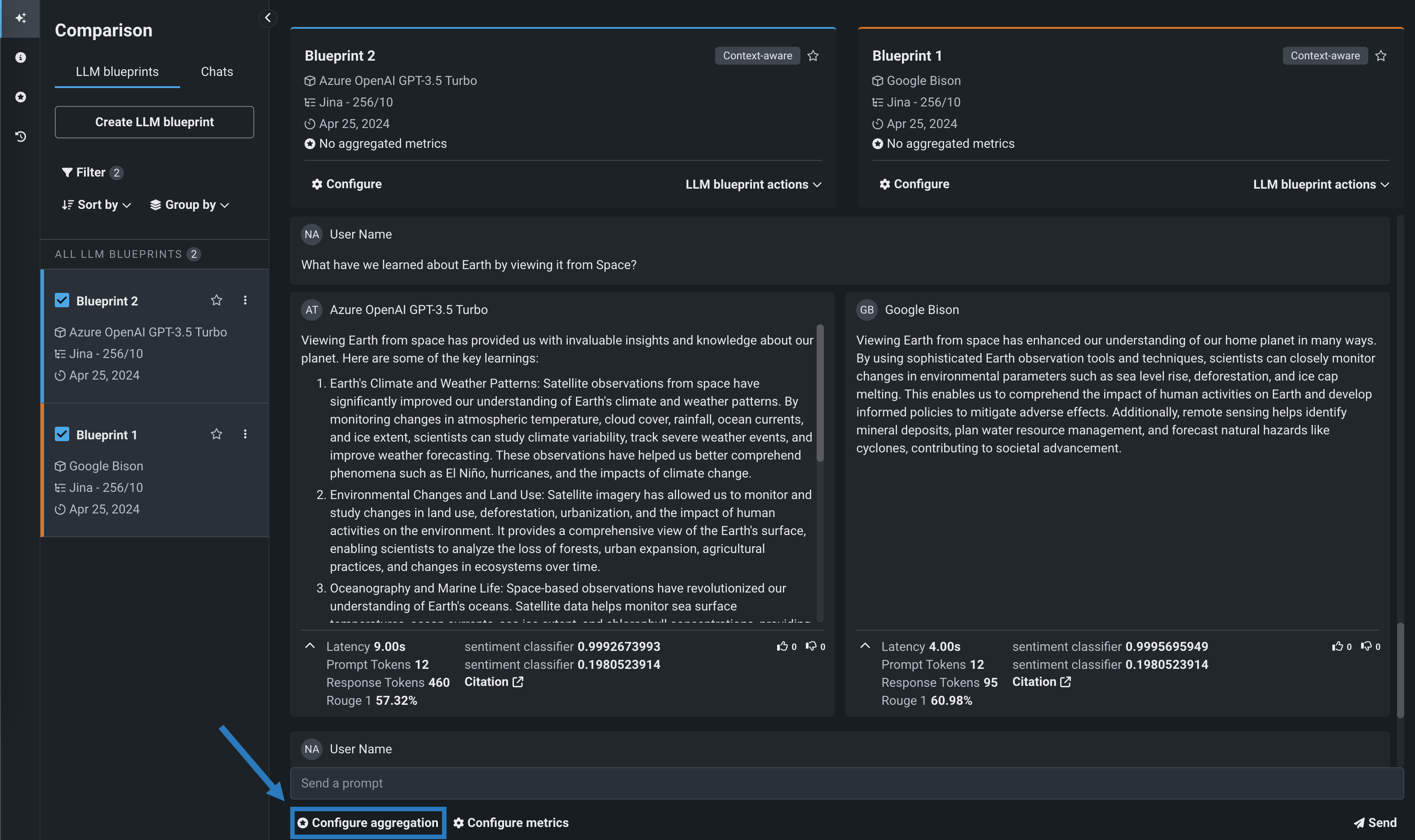

In a playground, click Configure aggregation below the prompt input:

Aggregation job run limit

Only one aggregated metric job can run at a time. If an aggregation job is currently running, the Configure aggregation button is disabled and the "Aggregation job in progress; try again when it completes" tooltip appears.

-

On the Generate aggregated metrics panel, select metrics to calculate in aggregate and configure the Aggregate by settings. Then, enter a new Chat name, select an Evaluation dataset (to generate prompts in the new chat), and select the LLM blueprints for which the metrics should be generated. These fields are pre-populated based on the current playground:

Evaluation dataset selection

If you select an evaluation dataset metric, like Correctness, you must use the evaluation dataset used to create that evaluation dataset metric.

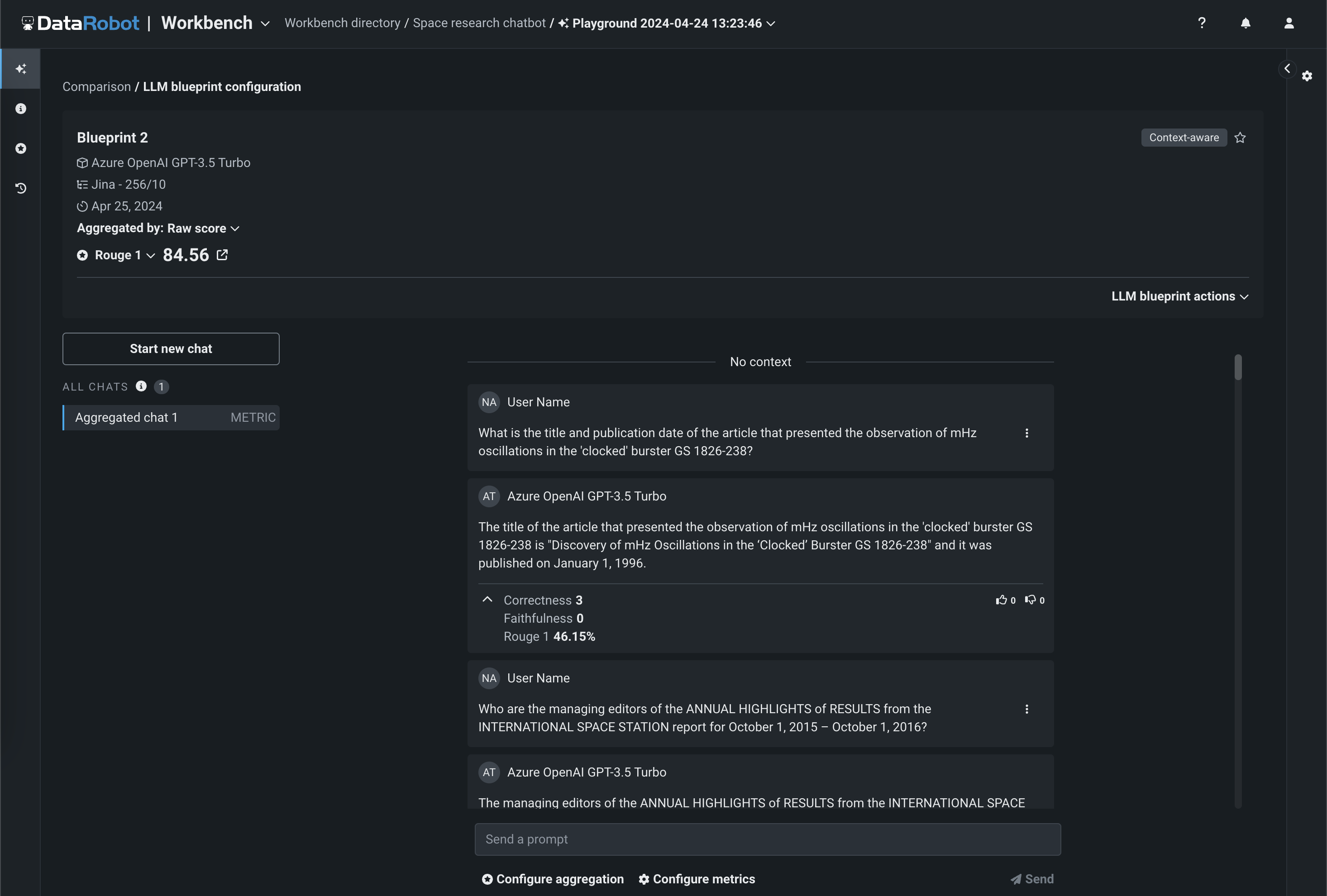

After you complete the Metrics selection and Configuration sections, click Generate metrics. This results in a new chat containing all associated prompts and responses:

Aggregated metrics are run against an evaluation dataset, not individual prompts in a standard chat. Therefore, you can only view aggregated metrics in the generated aggregated metrics chat, added to the LLM blueprint's All Chats list (on the LLM's configuration page).

Aggregation metric calculation for multiple blueprints

If many LLM blueprints are included in the metric aggregation request, aggregated metrics are computed sequentially, blueprint-by-blueprint.

-

Once an aggregated chat is generated, you can explore the resulting aggregated metrics, scores, and related assets on the Aggregated metrics tab. You can filter by Aggregation method, Evaluation dataset, and Metric:

In addition, click Current configuration to compare only those metrics calculated for the blueprint configuration currently defined in the LLM tab of the Configuration sidebar.

View related assets

For each metric in the table, you can click Evaluation dataset and Aggregated chat to view the corresponding asset contributing to the aggregated metric.

-

Returning to the LLM Blueprints comparison page, you can now open the Aggregated metrics tab to view a leaderboard comparing LLM blueprint performance for the generated aggregated metrics:

Configure compliance testing¶

Combine an evaluation metric and an evaluation dataset to automate the detection of compliance issues through test prompt scenarios.

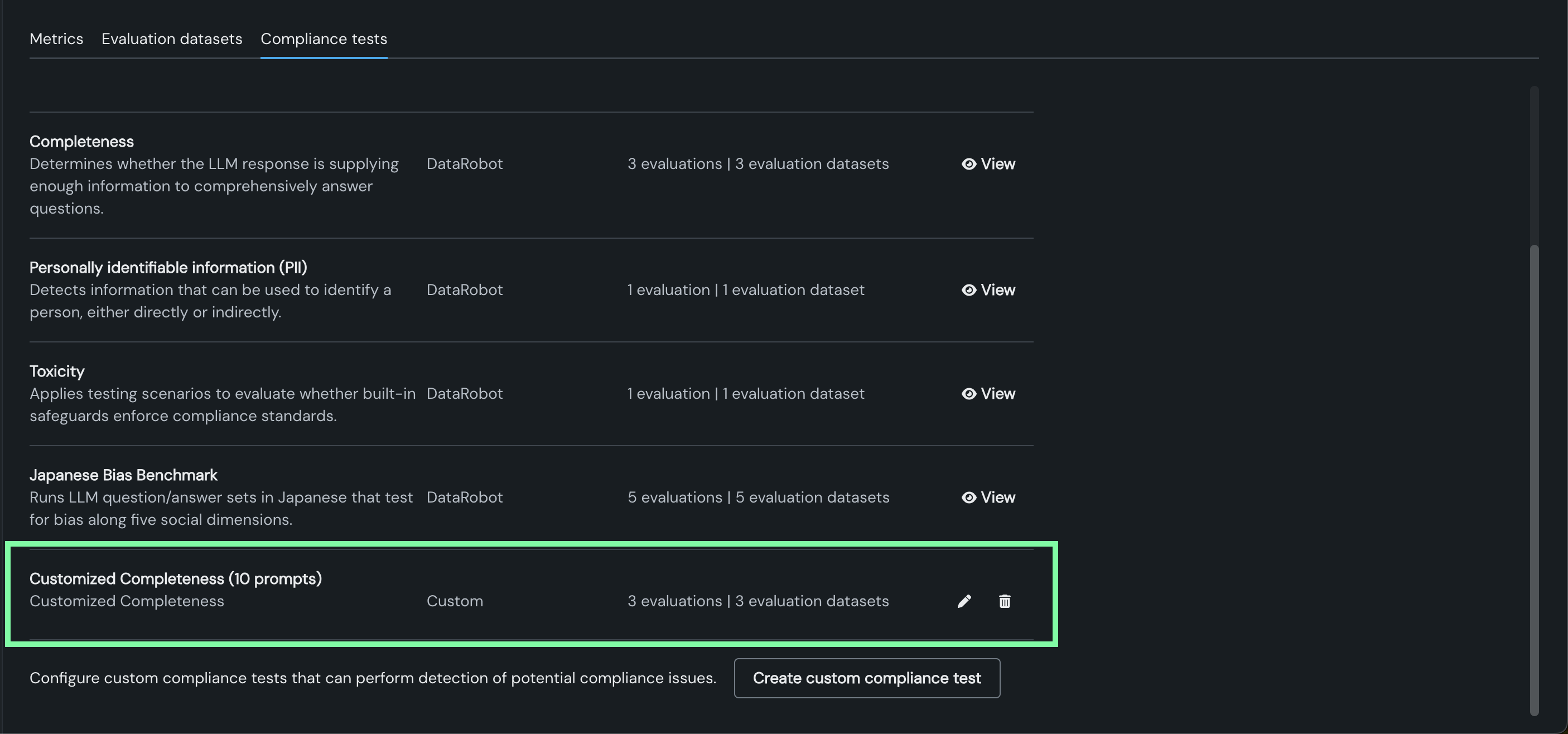

Manage compliance testing from the Evaluation tab¶

When you manage compliance testing on the Evaluation tab, you can view pre-defined compliance tests, create and manage custom tests, or modify pre-defined tests to suit your organization's testing requirements.

To view all available compliance tests:

-

On the side navigation bar click the Evaluation tile.

-

Click the Compliance tests tab. On the Compliance tests tab, you can view all the compliance tests available, both DataRobot and custom (if present). The table contains columns for the Test name, Provider, and Configuration (number of evaluations and evaluation datasets).

View and customize DataRobot compliance tests¶

Use the View option to review and, optionally:

- Customize DataRobot pre-configured compliance tests, including changing the LLM for certain tests.

- Manage custom compliance tests.

In the table on the Compliance tests tab, click View to open and review any of the compliance tests in which DataRobot is the Provider:

| Compliance test | Description | Assessing LLM | Based on |

|---|---|---|---|

| Bias Benchmark | Runs LLM question/answer sets that test for bias along eight social dimensions. | GPT-4o | AI Verify Foundation |

| Jailbreak | Applies testing scenarios to evaluate whether built-in safeguards enforce LLM jailbreaking compliance standards. | Customizable | jailbreak_llms |

| Completeness | Determines whether the LLM response is supplying enough information to comprehensively answer questions. | GPT-4o | Internal |

| Personally Identifiable Information (PII) | Determines whether the LLM response contains PII included in the prompt. | Customizable | Internal |

| Toxicity | Applies testing scenarios to evaluate whether built-in safeguards enforce toxicity compliance standards. For more information, see the explicit and offensive content warning. | Customizable | Hugging Face |

| Japanese Bias Benchmark | Runs LLM question/answer sets in Japanese that test for bias along five social dimensions. | GPT-4o | AI Verify Foundation |

Explicit and offensive content warning

The public evaluation dataset for toxicity testing contains explicit and offensive content. It is intended to be used exclusively for the purpose of eliminating such content from customer models and applications. Any other use is strictly prohibited.

Bias tests

Bias testing is based on the following moonshot-data datasets from AI Verify Foundation:

When viewing a compliance test from the list, you can review the individual evaluations run as part of the compliance testing process. For all tests, you can review the Name, Metric, LLM, Evaluation dataset, Pass threshold, and Number of prompts. If the test shows - in the LLM field, it uses GPT-4o. The following tests default to GPT-4o as the LLM but can be customized:

- Jailbreak

- Toxicity

- PII

Use a selected DataRobot test as the foundation for a custom test as follows:

-

Select View for the test you want to modify.

-

Click Customize test.

-

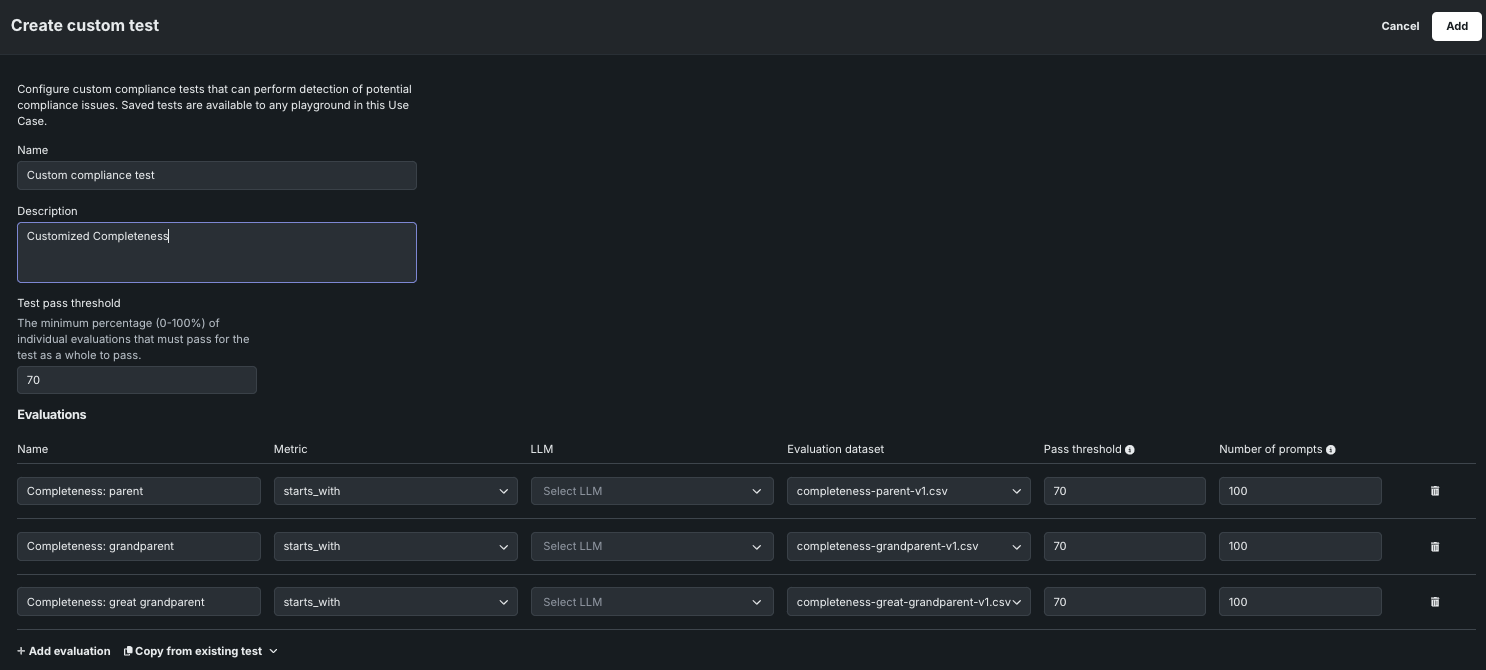

From the Create custom test modal, modify any of the individual evaluations for the compliance test settings.

Note

In addition to the default metrics and evaluation datasets, you can select any evaluation metrics implemented by a deployed binary classification sidecar model and any evaluation datasets added to the Use Case.

Setting Description Name A descriptive name for the custom compliance test. Description A description of the purpose of the compliance test (this is pre-populated when you modify an existing DataRobot test). Test pass threshold The minimum percentage (0-100%) of individual evaluations that must pass for the test as a whole to pass. Evaluations* Name The name of the individual metric. Metric The criteria to match against. LLM The LLM used to assess the response. This field is enabled for Jailbreak, Toxicity, and PII compliance tests. All others use GPT-4o. Evaluation dataset The dataset used for calculating metrics. Pass threshold The minimum percentage of responses that must pass for the evaluation to pass. Number of prompts The number of rows from the dataset used to perform the evaluation. Add evaluation Create additional evaluations. Copy from existing test Copy the individual evaluations from an existing compliance test. * Use the API-only process,

expected_response_column, to validate a sidecar model with metrics you are introducing. It compares the LLM response with an expected response, similar to the pre-providedexact_matchmetric. -

After you customize the compliance test settings, click Add. The new test appears in the table on the Compliance tests tab.

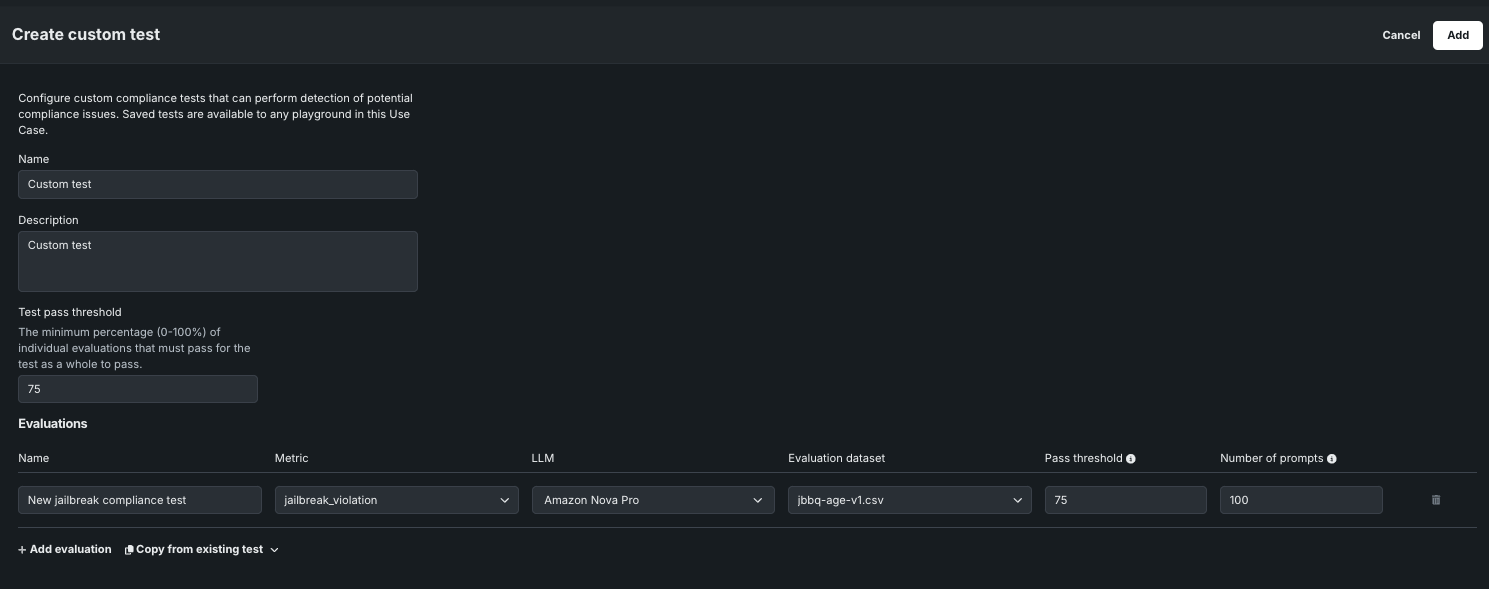

Create custom compliance tests¶

To create a custom compliance test:

-

At the top or bottom of the Compliance tests tab, click Create custom compliance test.

Create compliance tests from anywhere in the Evaluations tab

When the Evaluation tab is open, you can click Create custom compliance test from anywhere, not just the Compliance tests tab.

-

In the Create custom test panel, configure the following settings:

Setting Description Name A descriptive name for the custom compliance test. Description A description of the purpose of the compliance test (this is pre-populated when you modify an existing DataRobot test). Test pass threshold The minimum percentage (0-100%) of individual evaluations that must pass for the test as a whole to pass. Evaluations* Name The name of the individual metric. Metric The criteria to match against. LLM The LLM used to assess the response. This field is enabled for Jailbreak, Toxicity, and PII compliance tests. All others use GPT-4o. You must set the Metric before setting this field. Evaluation dataset The dataset used for calculating metrics. Pass threshold The minimum percentage of responses that must pass for the evaluation to pass. Number of prompts The number of rows from the dataset used to perform the evaluation. Add evaluation Create additional evaluations. Copy from existing test Copy the individual evaluations from an existing compliance test. -

After you configure the compliance test settings, click Add. The new test appears in the table on the Compliance tests tab.

Manage custom compliance tests¶

To manage custom compliance tests, locate tests with Custom as the Provider, and choose a management action:

-

Click the edit icon , then, in the Edit custom test panel, update the compliance test configuration and click Save.

-

Click the delete icon , then click Yes, delete test to remove the test from all playgrounds in the Use Case.

Run compliance testing from the playground¶

When you perform compliance testing on the Playground tile, you can run the pre-defined compliance tests without modification, create custom tests, or modify the pre-defined tests to suit your organization's testing requirements.

To access compliance from the playground tests to run, modify, or create a test:

-

On the Playground tile, in the LLM blueprints list, click the LLM blueprint you want to test, or, select up to three blueprints for comparison.

Access compliance tests from the blueprints comparison page

If you have two or more LLM blueprints selected, you can click the Compliance tests tab from the Blueprints comparison page to run compliance tests for multiple LLM blueprints and compare the results. For more information, see Compare compliance test results

-

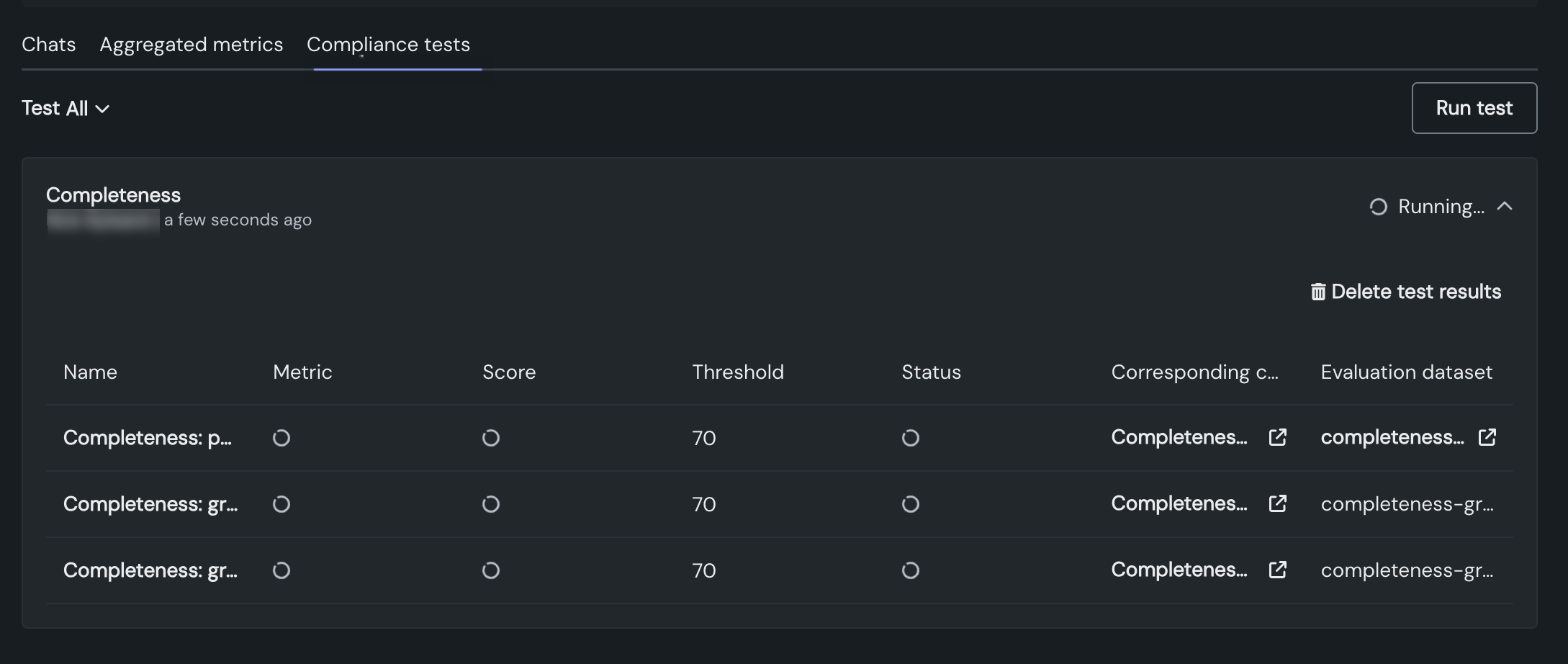

In the LLM blueprint, click the Compliance tests tab to create or run tests. If you have not run tests before, you receive a message saying no compliance test results are available. If you have run a test before, test results are listed. In either case, click Run test to open the test panel.

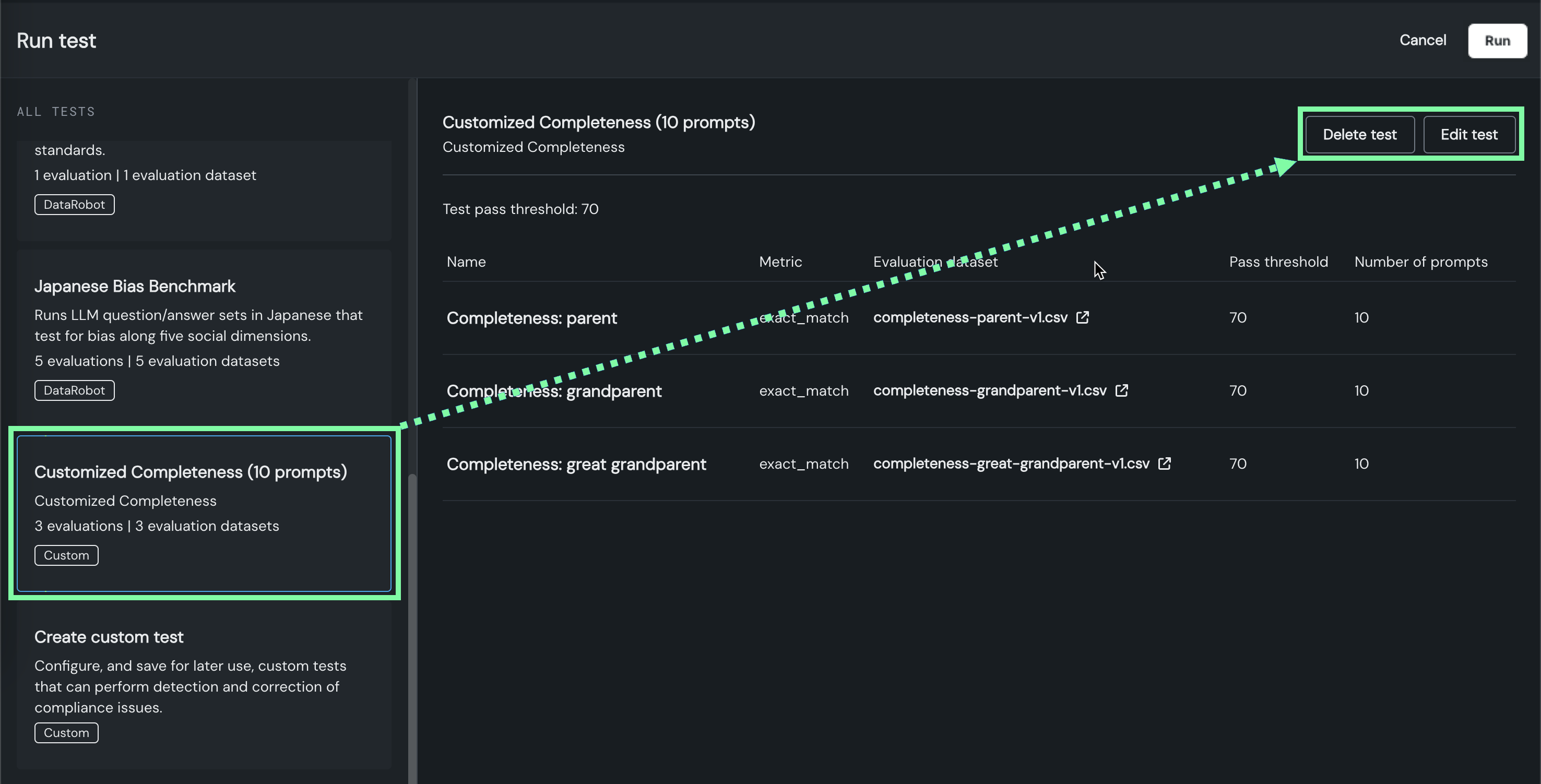

-

The Run test panel opens to a list of pre-configured DataRobot compliance tests and custom tests you've created.

-

When you select a compliance test from the All tests list, you can view the individual evaluations run as part of the compliance testing process. For each test, you can review the Name, Metric, Evaluation dataset, Pass threshold, and Number of prompts.

-

Next, run an existing test, create and run a custom test, or manage custom tests.

Run existing compliance tests¶

To run an existing, configured compliance test:

-

On the Run test panel, from the All tests list, select an available DataRobot or Custom test.

-

After selecting a test, click Run.

-

The test appears on the Compliance tests tab with a Running... status.

Cancel a running test

If you need to cancel a test with the Running... status, click Delete test results.

Create and run custom compliance tests¶

To create and run a custom or modified compliance test:

-

On the Run test panel, from the All tests list:

-

On the Custom test panel, configure the following settings:

Setting Description Name A descriptive name for the custom compliance test. Description A description of the purpose of the compliance test (this is pre-populated when you modify an existing DataRobot test). Test pass threshold The minimum percentage (0-100%) of individual evaluations that must pass for the test as a whole to pass. Evaluations The individual evaluations for the compliance test, each consisting of a Name, Metric, Evaluation dataset, Pass threshold, and Number of prompts. In addition to the default metrics and evaluation datasets, you can select any evaluation metrics implemented by a deployed binary classification sidecar model and any evaluation datasets added to the Use Case. - Click + Add evaluation to create additional evaluations.

- Click Copy from existing test to copy the individual evaluations from an existing compliance test.

expected_response_columnto introduce metrics comparing the LLM response with and expected response, similar to the pre-providedexact_matchmetric. -

After configuring a custom test, click Save and run.

-

The test appears on the Compliance tests tab with a Running... status.

Cancel a running test

If you need to cancel a test with the Running... status, click Delete test results.

Manage compliance test runs¶

From a running or completed test on the Compliance tests tab:

- To delete a completed test run or cancel and delete a running test, click Delete test results.

- To view the chat calculating the metric, click the chat name in the Corresponding chat column.

- To view the evaluation dataset used to calculate the metric, click the dataset name in the Evaluation dataset column.

Manage custom compliance tests¶

To manage custom compliance tests, on the Run test panel, from the All tests list, select a custom test, then click Delete test or Edit test. You can't edit or delete pre-configured DataRobot tests.

If you select Edit test, update the settings you configured during compliance test creation.

Compare compliance test results¶

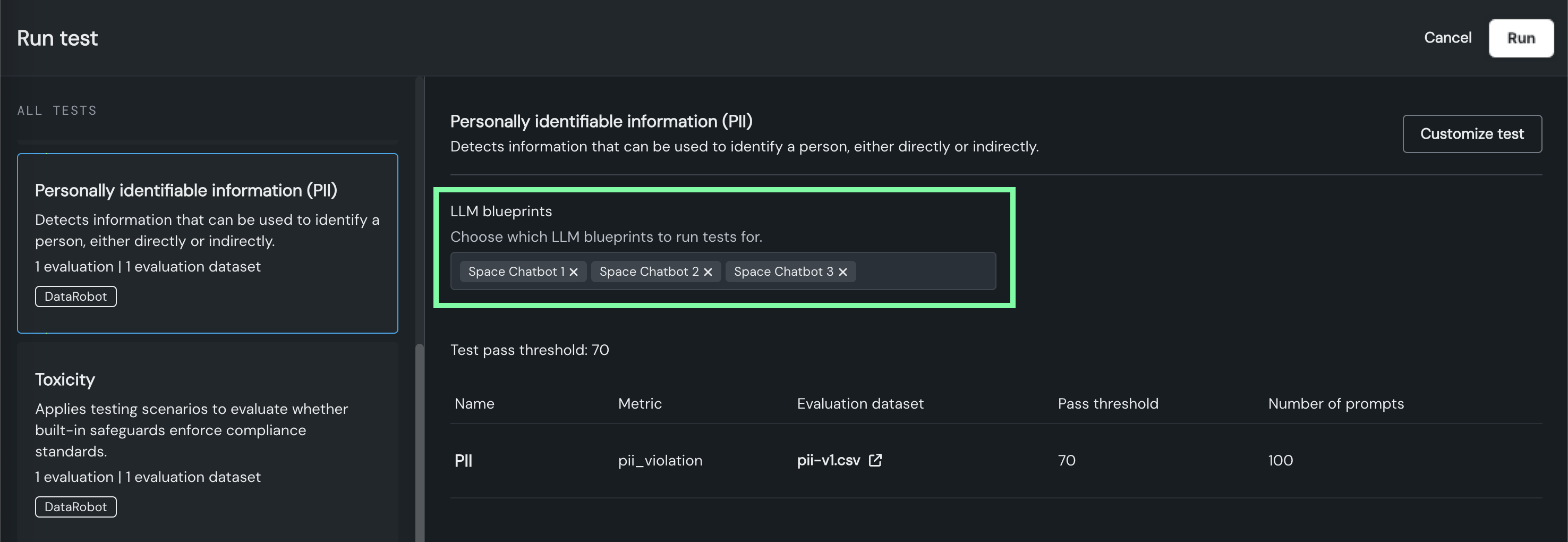

To compare compliance test results, you can run compliance tests for up to three LLM blueprints at a time. On the Playground tile, in the LLM blueprints list, select up to three LLM blueprint to test, click the Compliance tests tab, and then click Run test.

This opens the Run test panel, where you can select and run a test as you would for a single blueprint; however, you can also define the LLM blueprints to run it for. By default, the blueprints selected on the comparison tab are listed here:

After the compliance tests run, you can compare them on the Blueprints comparison page. To delete a completed test run, or cancel an in-progress test run, click Delete test results.

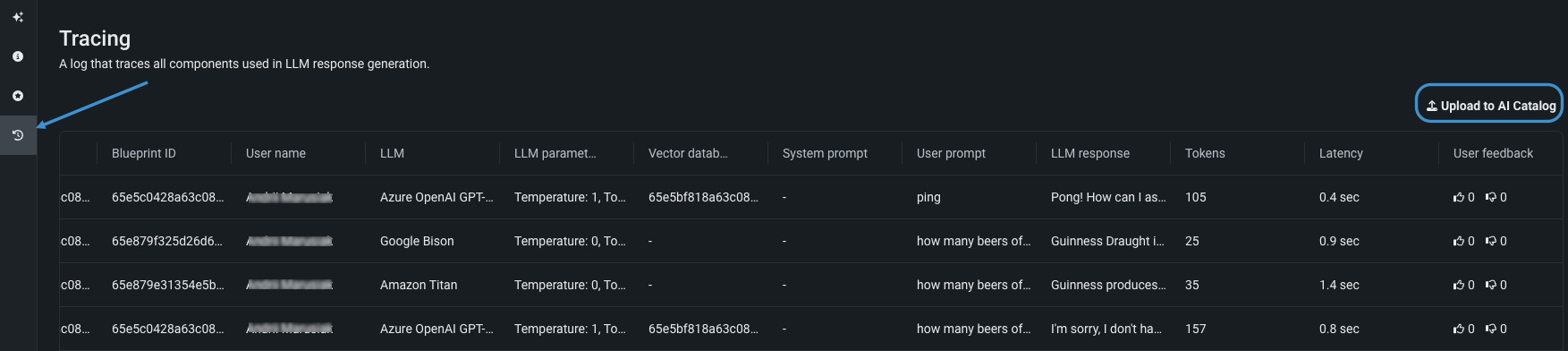

View the tracing table¶

Tracing the execution of LLM blueprints is a powerful tool for understanding how most parts of the GenAI stack work. The Tracing tab provides a log of all components and prompting activity used in generating LLM responses in the playground. Insights from tracing provide full context of everything the LLM evaluated, including prompts, vector database chunks, and past interactions within the context window. For example:

- DataRobot metadata: Reports the timestamp, Use Case, playground, vector database, and blueprint IDs, as well as creator name and base LLM. These help pinpoint the sources of trace records if you need to surface additional information from DataRobot objects interacting with the LLM blueprint.

- LLM parameters: Shows the parameters used when calling out to an LLM, which is useful for potentially debugging settings like temperature and the system prompts.

- Prompts and responses: Provide a history of chats; token count and user feedback provide additional detail.

- Latency: Highlights issues orchestrating the parts of the LLM Blueprint.

- Token usage: displays the breakdown of token usage to accurately calculate LLM cost.

- Evaluations and moderations (if configured): Illustrates how evaluation and moderation metrics are scoring prompts or responses.

To locate specific information in the Tracing table, click Filters and filter by User name, LLM, Vector database, LLM Blueprint name, Chat name, Evaluation dataset, and Evaluation status.

Send tracing data to the Data Registry

Click Upload to Data Registry to export data from the tracing table to the Data Registry. A warning appears on the tracing table when it includes results from running the toxicity test and the toxicity test results are excluded from the Data Registry upload.

Send a metric and compliance test configuration to the workshop¶

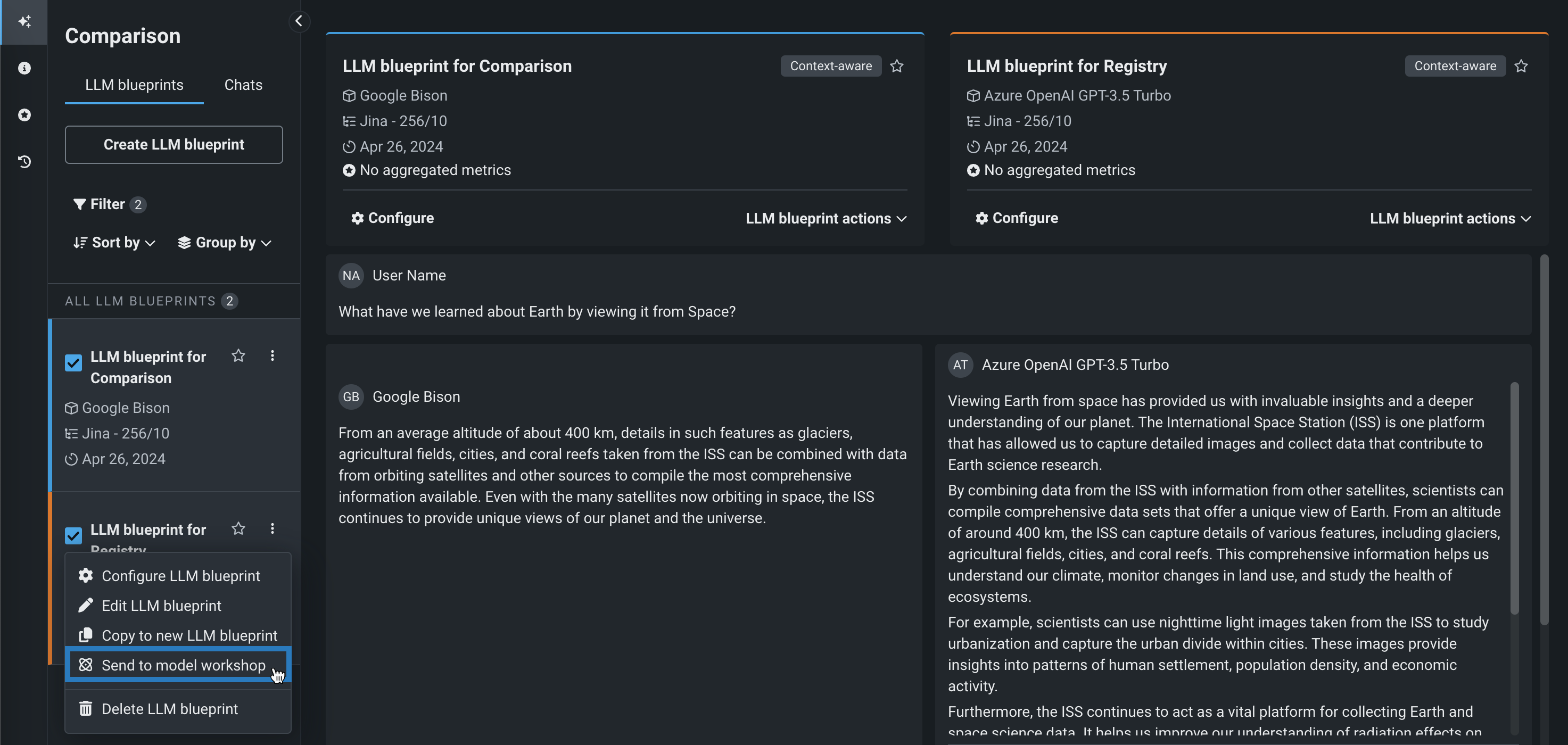

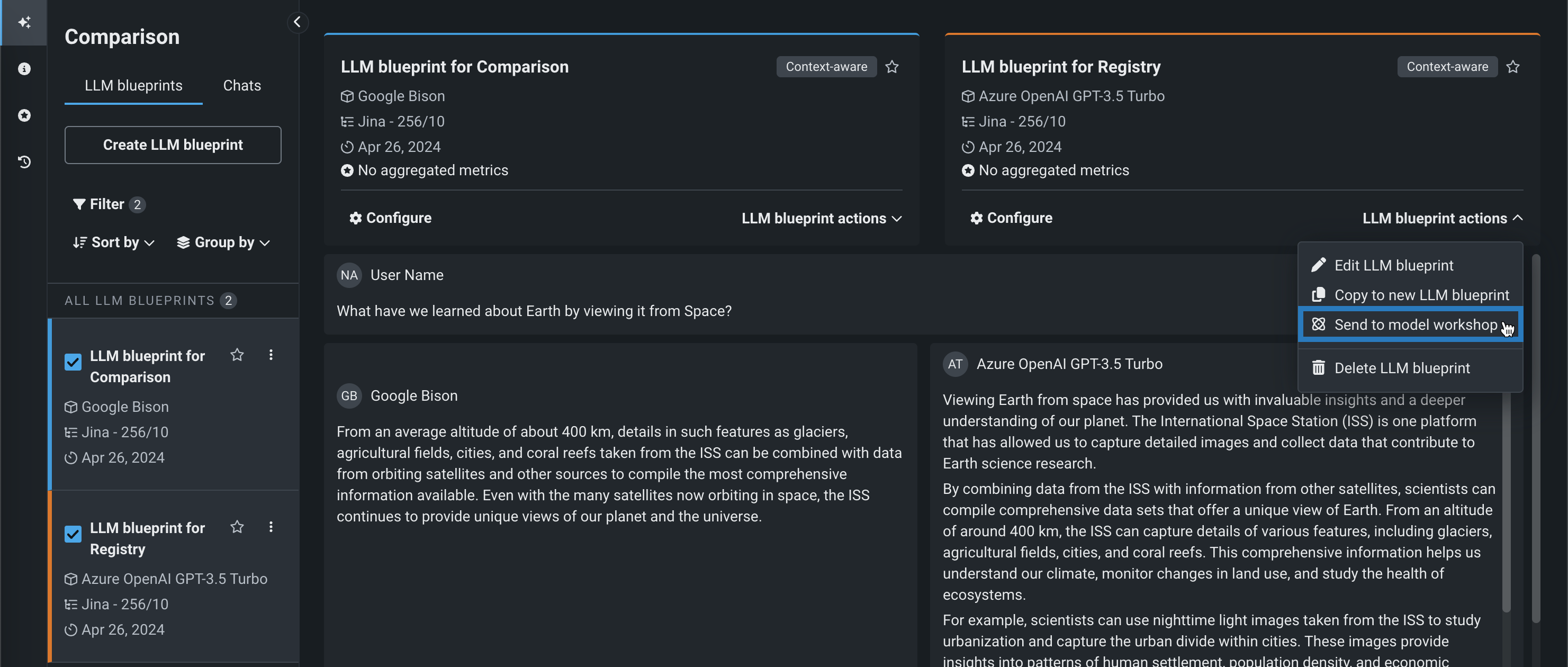

After creating an LLM blueprint, setting the blueprint configuration (including evaluations metrics and moderations), and testing and tuning the responses, send the LLM blueprint to the workshop:

-

In a Use Case, from the Playground tile, click the playground containing the LLM you want to register as a blueprint.

-

In the playground, compare LLMs to determine which LLM blueprint to send to the workshop, then, do either of the following:

-

In the Send to the workshop modal, select up to twelve evaluation metrics (and any configured moderations).

Why can't I send all metrics to the workshop?

Several metrics are supported by default after you register and deploy an LLM sent to the workshop from the playground, others are configurable using custom metrics. The following table lists the evaluation metrics you cannot select during this process and provides the alternative metric in Console:

Metric Console equivalent Citations Citations are provided on the Data exploration > Tracing tab. If configured in the playground, citations are included in the transfer by default, without the need to select the option in the Send to the workshop modal. The resulting custom model has the ENABLE_CITATION_COLUMNSruntime parameter configured. After deploying that custom model, if the Data exploration tab is enabled and association IDs are provided, citations are available for a model sent to the workshop.Cost Cost can be calculated on the Monitoring > Custom metrics tab of a deployment. Correctness Correctness is not available for deployed models. Latency Latency is calculated on the Monitoring > Service health tab and Monitoring > Custom metrics tab. All Tokens All tokens can be calculated on the Custom metrics tab, or you can add the prompt tokens and response tokens metrics separately. Document Tokens Document tokens are not available for deployed models. -

Next, select any Compliance tests to send. Then, click Send to the workshop:

Compliance tests sent to the workshop are included when you register the custom model and generate compliance documentation.

Compliance tests in the workshop

The selected compliance test are linked to the custom model in the workshop by the

LLM_TEST_SUITE_IDruntime parameter. If you modify the custom model code significantly in the workshop, set theLLM_TEST_SUITE_IDruntime parameter toNoneto avoid running compliance documentation intended for the original model on the modified model. -

To complete the transfer of evaluation metrics, configure the custom model in the workshop.