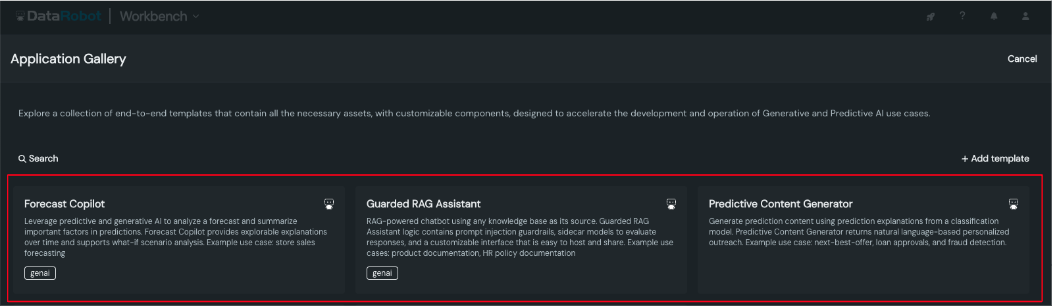

What are Application Templates and why should I add them?¶

Application Templates are comprehensive, end-to-end "recipes" designed to accelerate the development and operation of Generative and Predictive AI use cases. These templates include all necessary assets, with customizable components, enabling teams to quickly implement tailored AI solutions. They cover three core elements: AI logic, app logic, and infrastructure as code to manage resources, providing a ready-to-use foundation for all three. Most Application Templates are freely available to all customers, but some require access to specific features, like GenAI or Time Series, for full functionality. Additionally, certain templates are only available with premium SKUs, such as the SAP integration SKU.

The officially supported templates available in DataRobot are: - Guarded RAG Assistant - Forecast Assistant - Predictive Content Generator - Predictive AI Starter - Talk to My Data Agent - Talk to My Docs Agent

Who is this install guide for?¶

This guide provides instructions for making DataRobot-built Application Templates available for self-managed customers using DataRobot in air-gapped environments (no internet access). it's intended for organizations with internal air-gapped GitHub/GitLab.

Where do Templates come from?¶

The officially supported DataRobot templates are installed at the same time DataRobot is installed, so there is no need to run migrations or other scripts to get started with them.

How do I add my organization's own Templates to the Gallery in the product?¶

You can download and zip / tar the available templates using the URLs for each template repository below. Verify the URL is pointing to the right release branch, e.g. release/11.1.

* Guarded RAG Assistant

* Forecast Assistant

* Predictive Content Generator

* Predictive AI Starter

* Talk to My Data Agent

* Talk to My Docs Agent

A list of all required dependencies are listed in each template's requirements.txt file.

How do I add the Templates to the Gallery in the product?¶

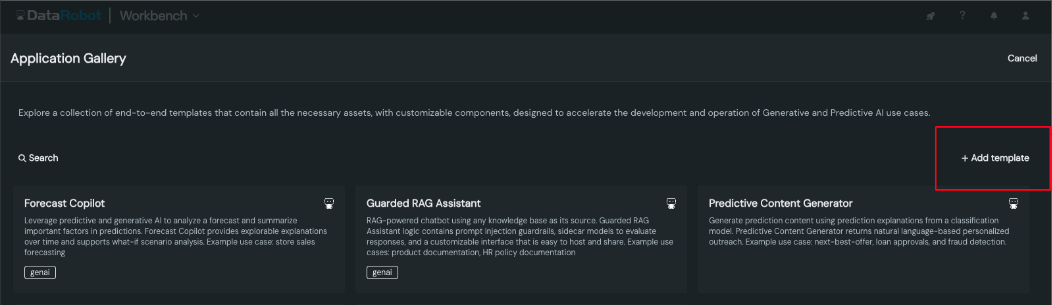

Below are two methods for adding templates to the Gallery, allowing users to discover them easily in the product experience: via terminal or via UI.

Via terminal¶

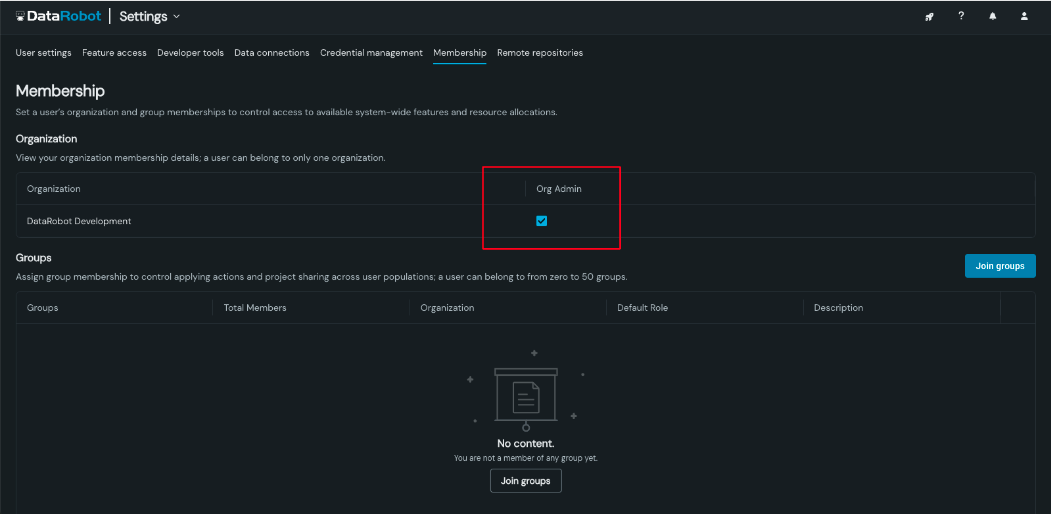

To add an app template, you'll need an org admin API token and the template details. Then, you can upload the template using a cURL request like this:

curl --location '<YOUR-HOST>/api/v2/applicationTemplates/' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer <TOKEN>' \

--data '{"name": "SAP Integration Template", "description": "A template for enabling users to rapidly integrate with SAP", "tags": ["genai", "sap"], "repository": {"url": "https://github.com/datarobot/SapIntegration", "tag": "11.1", "isPublic": true}}' \

--form 'readme=@"path/to/readme/file"' \

--form 'media=@"path/to/media.gif"'

media is optional and may be added later.

Next, all templates come with some media (usually an animated gif). This media is important because it's part of a "hook" for getting users curious and excited about the template. To add media to a template (if you didn't add it on-create), you can run:

curl --location '<YOUR-HOST>/api/v2/applicationTemplates/<TEMPLATE-ID>/media/' \

--header 'Authorization: Bearer <TOKEN>' \

--form 'file=@"<PATH-TO-YOUR-FILE>"'

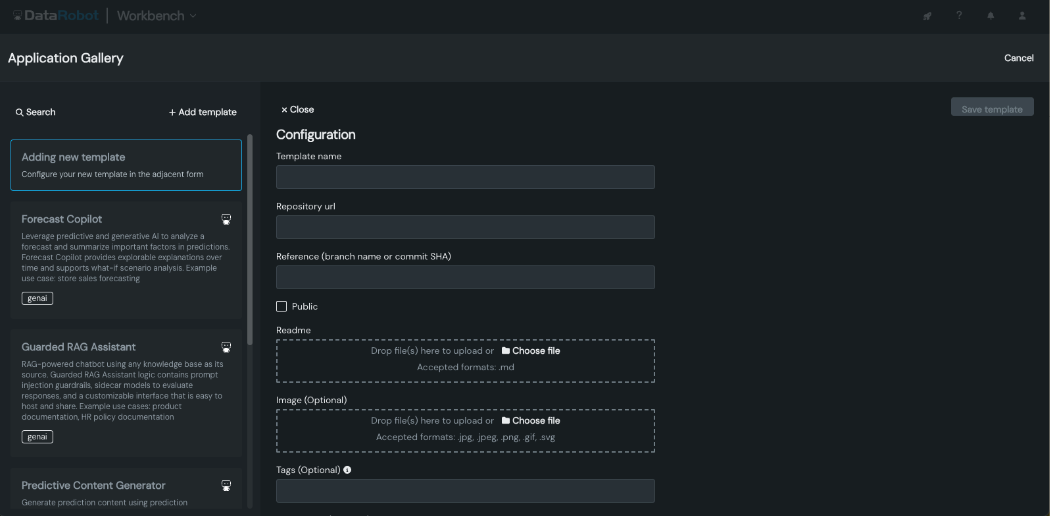

Via UI¶

Recommended reference metadata for the officially supported DataRobot templates¶

| Field | Example |

|---|---|

| Template name | Talk to My Data Agent |

| Repository URL | URL of the internal air-gapped GitHub/GitLab repository |

| Reference (branch name or commit SHA) | e.g., release/11.1 |

| Public | Leave unchecked if the repository is private. |

| Readme | Upload the README.md file from the provided template repo directory. |

| Image (optional) | Already included in the readmes so this can be left blank. |

| Tags (optional) | |

| Description (optional) | Transform thousands to billions of rows of data into actionable insights. Prompt for agent-recommended analyses supported by charts, tables, and code to uncover hidden answers in your data. For example, leverage fast and efficient insights for sales, marketing, financial, and supply chain analysis. |

How do I remove some or all officially supported DataRobot templates in an environment or install?¶

Some templates may require GenAI or Time Series which might not be in an on-prem customer's purchase. If you need to remove an officially supported DataRobot template from the list of available templates, you can add:

export DISABLED_APP_TEMPLATES='["<id-of-template-here>", "<id-of-other-template>"]'

If you want to remove all global templates you can define:

export DISABLED_APP_TEMPLATES='["*"]'

Additional Information¶

- Repository Structure: Each template repository contains a

requirements.txtfile that lists the necessary dependency pins. These are included to facilitate security and compatibility approvals, ensuring that all required components are correctly identified for your environment. - Repository Packaging: All repositories is provided as compressed files (tarred/zipped) and brought into your environment during the DataRobot installation process. This ensures that the entire package is ready for deployment in environments with restricted internet access.

- Template Gallery: The template gallery is configured to prevent links to public GitHub repositories. This ensures there are no broken links or inaccessible content in air-gapped environments, providing a seamless experience.

In the coming months, work is ongoing to ensure that each repository is bundled with CVE-scanned Docker images that include all necessary dependencies and the full template source code. These images are preconfigured, enabling immediate use post-installation, which simplifies and secures the setup process.

Performance Considerations¶

- Application Resource Bundle: Consider increasing the Application Resource Bundle Size with more CPU and Memory for large dataset processing. The default Bundle size is XL. For dataset bigger than 200 MB with Talk to My Data Agent Application, consider using the Remote Registry option with Spark SQL query.

- Application Replicas: Consider increasing Replicas of Applications to support concurrent users. Consider enabling session affinity with multiple replicas, so that each user's request can go to the specific replica.

- Custom Model Scaling: LLM performance can vary from model to model. LLM inference time is typically around 20 to 25 seconds with GPT models. Consider tuning the Autoscaling settings of the Custom Model if latency is high to wait for the LLM response.