Models in production¶

DataRobot MLOps provides a central hub to deploy, monitor, manage, and govern all your models in production, regardless of how they were created or when and where they were deployed. MLOps helps improve and maintain the quality of your models using health monitoring that accommodates changing conditions via continuous, automated model competitions (challenger models). It also ensures that all centralized production machine learning processes work under a robust governance framework across your organization, leveraging and sharing the burden of production model management.

With MLOps, you can deploy any model to your production environment of choice. By instrumenting the MLOps agent, you can monitor any existing production model already deployed for live updates on behavior and performance from a single and centralized machine learning operations system. MLOps makes it easy to deploy models written in any open-source language or library and expose a production-quality, REST API to support real-time or batch predictions. MLOps also offers built-in write-back integrations to systems such as Snowflake and Tableau.

MLOps provides constant monitoring and production diagnostics to improve the performance of your existing models. Automated best practices enable you to track service health, accuracy, and data drift to explain why your model is degrading. You can build your own challenger models or use Automated Machine Learning to build them for you and test them against your current champion model. This process of continuous learning and evaluation enables you to avoid surprise changes in model performance.

The tools and capabilities of every deployment are determined by the data available to it: training data, prediction data, and outcome data (also referred to as actuals).

Example deployment workflow¶

The primary deployment workflow is deploying a DataRobot model to a DataRobot prediction environment. You can complete the standard DataRobot deployment process in five steps listed below:

For alternate workflow examples, see the MLOps deployment workflows documentation.

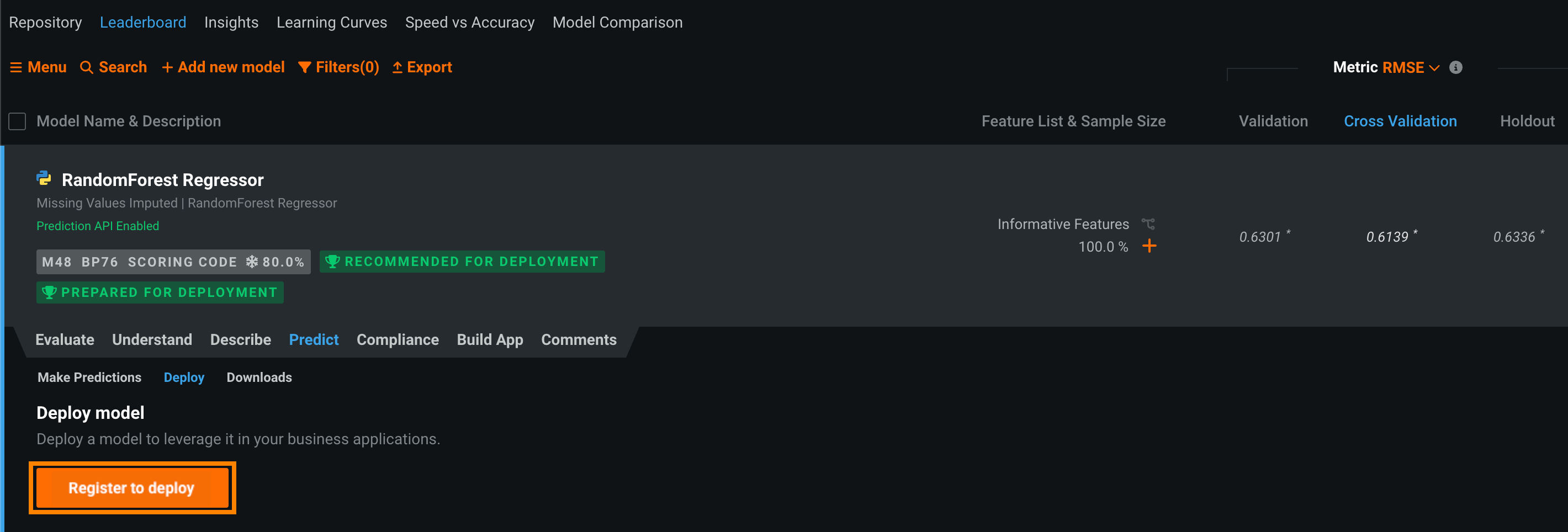

1: Register a model¶

DataRobot AutoML automatically generates models and displays them on the Leaderboard. The model recommended for deployment appears at the top of the page. You can register this (or any other) model from the Leaderboard. Once the model is registered, you can create a deployment to start making and monitoring predictions.

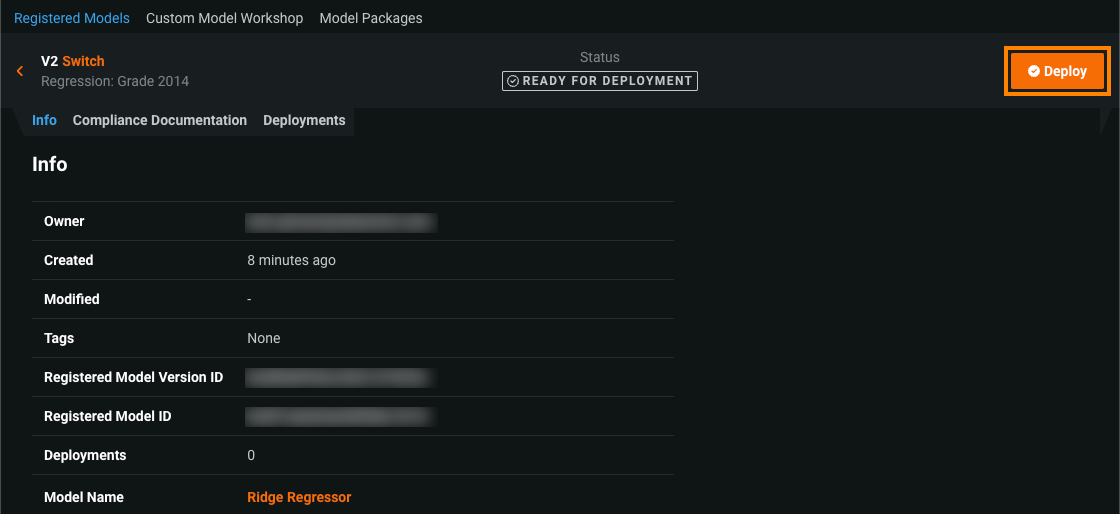

2: Deploy a model¶

After you've added a model to the Model Registry, you can deploy it at any time to start making and monitoring predictions.

3: Configure a deployment¶

The deployment information page outlines the capabilities of your current deployment based on the data provided, for example, training data, prediction data, or actuals. It populates fields for you to provide details about the training data, inference data, model, and your outcome data.

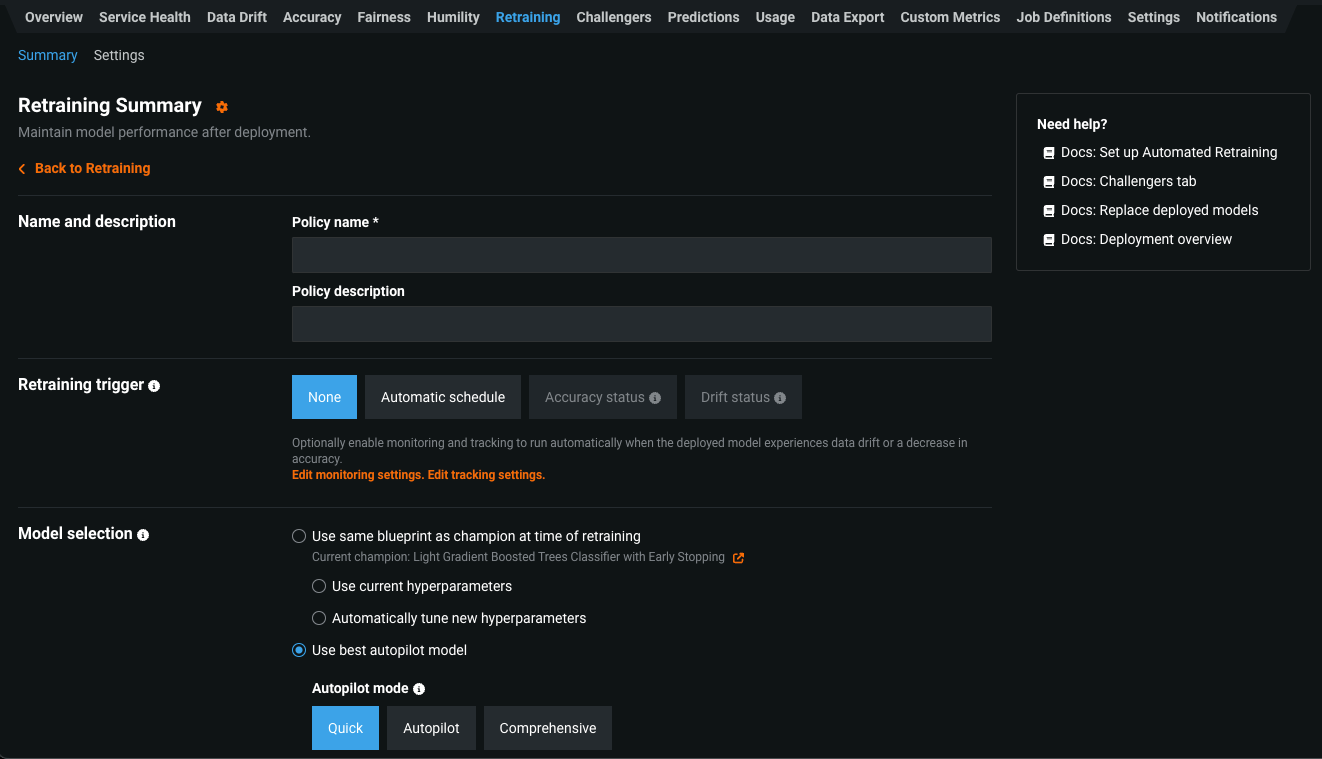

4: Set up model retraining and replacement¶

To maintain model performance after deployment without extensive manual work, DataRobot provides an automatic retraining capability for deployments. Upon providing a retraining dataset registered in the AI Catalog, you can define up to five retraining policies on each deployment, each consisting of a trigger, a modeling strategy, modeling settings, and a replacement action. When triggered, retraining will produce a new model based on these settings and notify you to consider promoting it. If necessary, you can manually replace a deployed model.

Set up retraining and replacement

5: Monitor model performance¶

To trust a model for mission-critical operations, users must have confidence in all aspects of model deployment. Model monitoring is the close tracking of the performance of ML models in production used to identify potential issues before they impact the business. Monitoring ranges from whether the service is reliably providing predictions in a timely manner and without errors to ensuring the predictions themselves are reliable. DataRobot automatically monitors model deployments and offers a central hub for detecting errors and model accuracy decay as soon as possible. For each deployment, DataRobot provides a status banner—model-specific information is also available on the Deployments inventory page.