Purchase card fraud detection¶

In this use case you will build a model that can review 100% of purchase card transactions and identify the riskiest for further investigation via manual inspection. In addition to automating much of the resource-intensive tasks of reviewing transactions, this solution can also provide high-level insights such as aggregating predictions at the organization level to identify problematic departments and agencies to target for audit or additional interventions.

Sample training data used in this use case:

Click here to jump directly to the hands-on sections that begin with working with data. Otherwise, the following several paragraphs describe the business justification and problem framing for this use case.

Background¶

Many auditor’s offices and similar fraud shops rely on business rules and manual processes to manage their operations of thousands of purchase card transactions each week. For example, an office reviews transactions manually in an Excel spreadsheet, leading to many hours of review and missed instances of fraud. They need a way to simplify this process drastically while also ensuring that instances of fraud are detected. They also need a way to seamlessly fold each transaction’s risk score into a front-end decision application that will serve as the primary way to process their review backlog for a broad range of users.

Key use case takeaways:

Strategy/challenge: Organizations that employ purchase cards for procurement have difficulty monitoring for fraud and misuse, which can comprise 3% or more of all purchases. Much of the time spent by examiners is quite manual and involves sifting through mostly safe transactions looking for clear instances of fraud or applying rules-based approaches that miss out on risky activity.

Model solution: ML models can review 100% of transactions and identify the riskiest for further investigation. Risky transactions can be aggregated at the organization level to identify problematic departments and agencies to target for audit or additional interventions.

Use case applicability¶

The following table summarizes aspects of this use case:

| Topic | Description |

|---|---|

| Use case type | Public Sector / Banking & Finance / Purchase Card Fraud Detection |

| Target audience | Auditor’s office or fraud investigation unit leaders, fraud investigators or examiners, data scientists |

| Desired outcomes |

|

| Metrics/KPIs |

|

| Sample dataset | synth_training_fe.csv.zip |

The solution proposed requires the following high-level technical components:

-

Extract, Transform, Load (ETL): Cleaning of purchase card data (feed established with bank or processing company, e.g., TSYS) and additional feature engineering.

-

Data science: Modeling of fraud risk using AutoML, selection/downweighting of features, tuning of prediction threshold, deployment of model and monitoring via MLOps.

-

Front-end app development: Embedding of data ingest and predictions into a front-end application (e.g., Streamlit).

Solution value¶

The primary issues and corresponding opportunities that this use case addresses include:

| Issue | Opportunity |

|---|---|

| Government accountability / trust | Reviewing 100% of procurement transactions to increase public trust in government spending. |

| Undetected fraudulent activity | Identifying 40%+ more risky transactions ($1M+ value, depending on organization size). |

| Staff productivity | Increasing personnel efficiency by manually reviewing only the riskiest transactions. |

| Organizational visibility | Providing high-level insight into areas of risk within the organization. |

Sample ROI calculation¶

Calculating ROI for this use case can be broken down into two main components:

- Time saved by pre-screening transactions

- Detecting additional risky transactions

Note

As with any ROI or valuation exercise, the calculations are "ballpark" figures or ranges to help provide an understanding of the magnitude of the impact, rather than an exact number for financial accounting purposes. It is important to consider the calculation methodology and any uncertainty in the assumptions used as it applies to your use case.

Time savings from pre-screening transactions¶

Consider how much time can be saved by a model automatically detecting True Negatives (correctly identified as "safe"), in contrast to an examiner manually reviewing transactions.

Input Variables¶

| Variable | Value |

|---|---|

| Model's True Negative + False Negative Rate This is the number of transactions that will now be automatically reviewed (False Positives and True Positives still require manual review, and so do not have a time savings component) |

95% |

| Number of transactions per year | 1M |

| Percent (%) of transactions manually reviewed | 25% (assumes the other 75% are not reviewed) |

| Average time spent on manual review (per transaction) | 2 minutes |

| Hourly wage (fully loaded FTE) | $30 |

Calculations¶

| Variable | Formula | Value |

|---|---|---|

| Transactions reviewed manually by examiner today | 1M * 25% | 250,000 |

| Transactions pre-screened by model as not needing review | 1M * 95% | 950,000 |

| Transactions identified by model as needing manual review | 1M - 950,000 | 50,000 |

| Net transactions no longer needing manual review | 250,000 - 50,000 | 200,000 |

| Hours of transactions reviewed manually per year | 200,000 * (2 minutes / 60 minutes) | 6,667 hours |

| Cost savings per year | 6,667 * $30 | $200,000 |

Calculating additional fraud detected annually¶

Input Variables¶

| Variable | Value |

|---|---|

| Number of transactions per year | 1M |

| Percent (%) of transactions manually reviewed | 25% (assumes the other 75% are not reviewed) |

| Average transaction amount | $300 |

| Model True Positive rate | 2% (assume the model detects “risky” not necessarily fraud) |

| Model False Negative rate | 0.5% |

| Percent (%) of risky transactions that are actually fraud | 20% |

Calculations¶

| Variable | Formula | Value |

|---|---|---|

| Number of transactions that are now reviewed by model that were not previously | 1M * (100%-25%) | 750,000 |

| Number of transactions that are accurately identified as risky | 750k * 2% | 15,000 |

| Percent (%) of risky transactions that are fraud | 15,000 * 20% | 3,000 |

| Value ($) of newly identified fraud | 3,000 * $300 | $900,000 |

| Number of transactions that are False Negatives (for risk of fraud) | 0.5% * 1M | 5,000 |

| Number of False Negatives that would have been manually reviewed | 5,000 * 25% | 1,250 |

| Number of False Negative transactions that are actually fraud | 1,250 * 20% | 250 |

| Value ($) of missed fraud | 250 * $300 | $75,000 |

| Net Value ($) | $900,000 - $75,000 | $825,000 |

Total annual savings estimate: $1.025M¶

Tip

Communicate the model’s value in a range to convey the degree of uncertainty based on assumptions taken. For the above example, you might convey an estimated range of $0.8M - $1.1M.

Considerations¶

There may be other areas of value or even potential costs to implementing this model.

-

The model may find cases of fraud that were missed in the manual review by an examiner.

-

There may be additional cost to reviewing False Positives and True Positives that would not otherwise have been reviewed before. That said, this value is typically dwarfed by the time savings from the number of transactions that no longer need review.

-

To reduce the value lost from False Negatives, where the model misses fraud that an examiner would have found, a common strategy is to optimize your prediction threshold to reduce False Negatives so that these situations are less likely to occur. Prediction thresholding should closely follow the estimated cost of a False Negative versus a False Positive (in this case, the former is much more costly).

Data¶

The linked synthetic dataset illustrates a purchase card fraud detection program. Specifically, the model is detecting fraudulent transactions (purchase card holders making non-approved/non-business related purchases).

The unit of analysis in this dataset is one row per transaction. The dataset must contain transaction-level details, with itemization where available:

- If no child items present, one row per transaction.

- If child items present, one row for parent transaction and one row for each underlying item purchased with associated parent transaction features.

Data preparation¶

Consider the following when working with the data:

Define the scope of analysis: For initial model training, the amount of data needed depends on several factors, such as the rate at which transactions occur or the seasonal variability in purchasing and fraud trends. This example case uses 6 months of labeled transaction data (or approximately 300,000 transactions) to build the initial model.

Define the target: There are several options for setting the target, for example:

risky/not risky(as labeled by an examiner in an audit function).fraud/not fraud(as recorded by actual case outcomes).- The target can also be multiclass/multilabel, with transactions marked as

fraud,waste, and/orabuse.

Other data sources: In some cases, other data sources can be joined in to allow for the creation of additional features. This example pulls in data from an employee resource management system as well as timecard data. Each data source must have a way to join back to the transaction level detail (e.g., Employee ID, Cardholder ID).

Features and sample data¶

Most of the features listed below are transaction or item-level fields derived from an industry-standard TSYS (DEF) file format. These fields may also be accessible via bank reporting sources.

To apply this use case in your organization, your dataset should contain, minimally, the following features:

Target:

risky/not risky(or an option as described above)

Required features:

- Transaction ID

- Account ID

- Transaction Date

- Posting Date

- Entity Name (akin to organization, department, or agency)

- Merchant Name

- Merchant Category Code (MCC)

- Credit Limit

- Single Transaction Limit

- Date Account Opened

- Transaction Amount

- Line Item Details

- Acquirer Reference Number

- Approval Code

Suggested engineered features:

- Is_split_transaction

- Account-Merchant Pair

- Entity-MCC pair

- Is_gift_card

- Is_holiday

- Is_high_risk_MCC

- Num_days_to_post

- Item Value Percent of Transaction

- Suspicious Transaction Amount (multiple of $5)

- Less than $2

- Near $2500 Limit

- Suspicious Transaction Amount (whole number)

- Suspicious Transaction Amount(ends in 595)

- Item Value Percent of Single Transaction Limit

- Item Value Percent of Account Limit

- Transaction Value Percent of Account Limit

- Average Transaction Value over last 180 days

- Item Value Percentage of Average Transaction Value

Other helpful features to include are:

- Merchant City

- Merchant ZIP

- Cardholder City

- Cardholder ZIP

- Employee ID

- Sales Tax

- Transaction Timestamp

- Employee PTO or Timecard Data

- Employee Tenure (in current role)

- Employee Tenure (in total)

- Hotel Folio Data

- Other common features

- Suspicious Transaction timing (Employee on PTO)

Exploratory Data Analysis (EDA)¶

-

Smart downsampling: For large datasets with few labeled samples of fraud, use Smart Downsampling to reduce total dataset size by reducing the size of the majority class. (From the Data page, choose Show advanced options > Smart Downsampling and toggle on Downsample Data.)

-

Time aware: For longer time spans, time-aware modeling could be necessary and/or beneficial.

Check for time dependence in your dataset

You can create a year+month feature from transaction time stamps and perform modeling to try to predict this. If the top model performs well, it is worthwhile to leverage time-aware modeling.

-

Data types: Your data may have transaction features encoded as numerics but they must be transformed to categoricals. For example, while Merchant Category Code (MCC) is a four-digit number used by credit card companies to classify businesses, there is not necessarily an ordered relationship to the codes (e.g., 1024 is not similar to 1025).

Binary features must have either a categorical variable type or, if numeric, have values of

0or1. In the sample data, several binary checks may result from feature engineering, such asis_holiday,is_gift_card,is_whole_num, etc.

Modeling and insights¶

After cleaning the data, performing feature engineering, uploading the dataset to DataRobot (AI Catalog or direct upload), and performing the EDA checks above, modeling can begin. For rapid results/insights, Quick Autopilot mode presents the best ratio of modeling approaches explored and time to results. Alternatively, use full Autopilot or Comprehensive modes to perform thorough model exploration tailored to the specific dataset and project type. Once the appropriate modeling mode has been selected from the dropdown, start modeling.

The following sections describe the insights available after a model is built.

Model blueprint¶

The model blueprint, shown on the Leaderboard and sorted by a “survival of the fittest” scheme ranking by accuracy, shows the overall approach to model pipeline processing. The example below uses smart processing of raw data (e.g., text encoding, missing value imputation) and a robust algorithm based on a decision tree process to predict transaction riskiness. The resulting prediction is a fraud probability (0-100).

Feature Impact¶

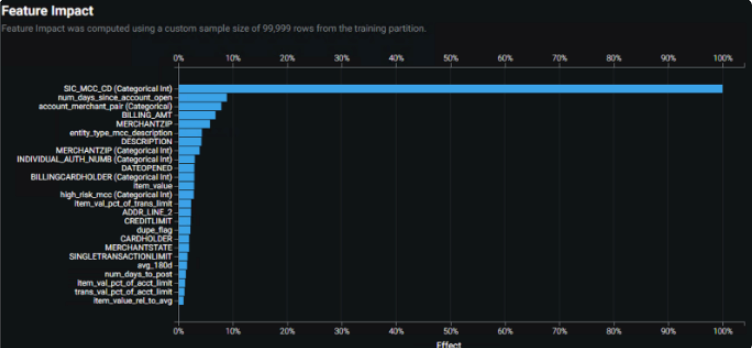

Feature Impact shows, at a high level, which features are driving model decisions.

The Feature Impact chart above indicates:

-

Merchant information (e.g., MCC and its textual description) tend to be impactful features that drive model predictions.

-

Categorical and textual information tend to have more impact than numerical features.

The chart provides a clear indication of over-dependence on at least one feature—Merchant Category Code (MCC). To effectively downweight the dependence, consider creating a feature list with this feature excluded and/or blending top models. These steps can balance feature dependence with comparable model performance. For example, this use case creates an additional feature list that excluded the MCC and an additional engineered feature based on MCCs recognized as high risk by SMEs.

Also, starting with a large number of engineered features may result in a Feature Impact plot that shows minimal amounts of reliance on many of the features. Retraining with reduced features may result in increased accuracy and will also reduce the computational demand of the model.

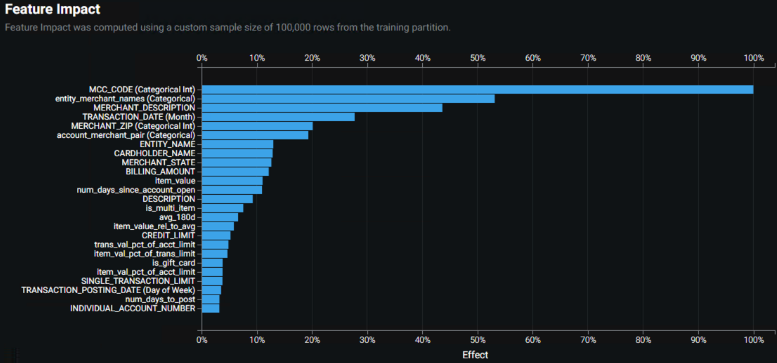

The final solution used a blended model created from combining the top model from each of these two modified feature lists. It achieved comparable accuracy to the MCC-dependent model but with a more balanced Feature Impact plot. Compare the plot below to the one above:

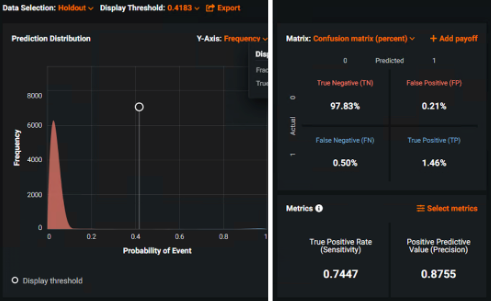

Confusion Matrix¶

Leverage the ROC Curve to tune the prediction threshold based on, for example, the auditor’s office desired risk tolerance and capacity for review. The Confusion Matrix and Prediction Distribution graph provide excellent tools for experimenting with threshold values and seeing the effects on False Positive and False Negative counts/percentages. Because the model marks transactions as risky and in need of further review, the preferred threshold prioritizes minimizing false negatives.

You can also use the ROC Curve tools to explain the tradeoff between optimization strategies. In this example, the solution mostly minimizes False Negatives (e.g., missed fraud) while slightly increasing the number of transactions needing review.

You can see in the example above that the model outputs a probability of risk.

-

Anything above the set probability threshold marks the transaction as risky (or needs review) and vice versa.

-

Most predictions have low probability of being risky (left, Prediction Distribution graph).

-

The best performance evaluators are Sensitivity and Precision (right, Confusion Matrix chart).

-

The default Prediction Distribution display threshold of 0.41 balances the False Positive and False Negative amounts (adjustable depending on risk tolerance).

Prediction Explanations¶

With each transaction risk score, DataRobot provides two associated Risk Codes generated by Prediction Explanations. These Risk Codes inform users which two features had the highest effect on that particular risk score and their relative magnitude. Inclusion of Prediction Explanations helps build trust by communicating the "why" of a prediction, which aids in confidence-checking model output and also identifying trends.

Predict and deploy¶

Use the tools above (blueprints on the Leaderboard, Feature Impact results, Confusion/Payoff matrices) to determine the best blueprint for the data/use case.

Deploy the model that serves risk score predictions (and accompanying prediction explanations) for each transaction on a batch schedule to a database (e.g., Mongo) that your end application reads from.

Confirm the ETL and prediction scoring frequency with your stakeholders. Often the TSYS DEF file is provided on a daily basis and contains transactions from several days prior to posting date. Generally daily scoring of the DEF is acceptable—the post-transaction review of purchases does not need to be executed in real-time. Point of reference, though—some cases can take up to 30 days post purchase to review transactions.

A no-code or Streamlit app can be useful for showing aggregate results of the model (e.g., risky transactions at an entity level). Consider building a custom application where stakeholders can interact with the predictions and record the outcomes of the investigation. A useful app will allow for intuitive and/or automated data ingestion and the review of individual transactions marked as risky, as well as organization- and entity-level aggregation.

Monitoring and management¶

Fraudulent behavior is dynamic as new schemes replace ones that have been mitigated. It is crucial to capture ground truth from SMEs/auditors to track model accuracy and verify the effectiveness of the model. Data drift, as well as concept drift, can pose significant risks.

For fraud detection, the process of retraining a model may require additional batches of data manually annotated by auditors. Communicate this process clearly and early in the project setup phase. Champion/challenger analysis suits this use-case well and should be enabled.

For models trained with target data labeled as risky (as opposed to confirmed fraud), it could be useful in the future to explore modeling confirmed fraud as the amount of training data grows. The model threshold serves as a confidence knob that may increase across model iterations while maintaining low false negative rates. Moving to a model that predicts the actual outcome as opposed to risk of the outcome also addresses the potential difficulty when retraining with data primarily labeled as the actual outcome (collected from the end-user app).