DataRobot Classic in 5¶

Building models in DataRobot—regardless of the data handling, modeling options, prediction methods, and deployment actions—comes down to the same five basic actions. Review the steps below to get a quick understanding of how to be successful in DataRobot Classic.

Datasets for testing¶

You can use the following hospital readmissions demo datasets to try out DataRobot functionality:

Download training data Download scoring data

These sample datasets are provided by BioMed Research International to study readmissions across 70,000 inpatients with diabetes. The researchers of the study collected this data from the Health Facts database provided by Cerner Corporation, which is a collection of clinical records across providers in the United States. Health Facts allows organizations that use Cerner’s electronic health system to voluntarily make their data available for research purposes. All the data was cleansed of PII in compliance with HIPAA.

1: Set up a new DataRobot project¶

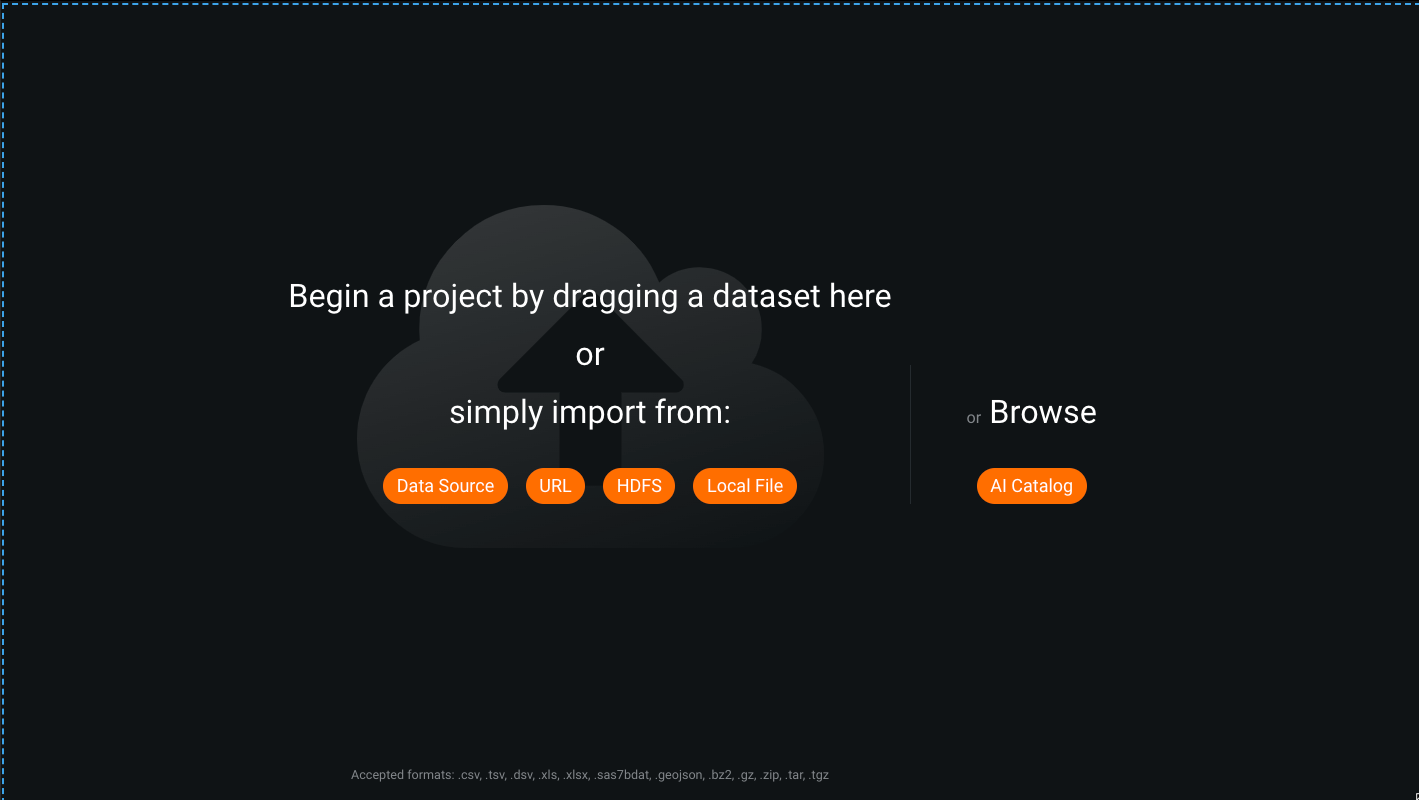

Add a dataset using any one of the methods on the new project page to create a new DataRobot project:

This includes from the AI Catalog, directly from a connected data source, or as a local file.

Learn more

To learn more about the topics discussed in this section, see:

To begin modeling, type the name of the target and configure the optional settings described below:

| Element | Description | |

|---|---|---|

| 1 | What would you like to predict? | Type the name of the target feature (the column in the dataset you would like to predict) or click Use as target next to the name in the feature list below. |

| 2 | No target? | Click to build an unsupervised model. |

| 3 | Secondary datasets | (Optional) Add a secondary dataset by clicking + Add datasets. DateRobot performs Feature Discovery and creates relationships to the datasets. |

| 4 | Feature list | Displays the feature list to be used for training models. |

| 5 | Optimization Metric | (Optional) Select an optimization metric to score models. DataRobot automatically selects a metric based on the target feature you select and the type of modeling project (i.e., regression, classification, multiclass, unsupervised, etc.). |

| 6 | Show advanced options | Specify modeling options such as partitioning, bias and fairness, and optimization metric (click Additional). |

| 7 | Time-Aware Modeling | Build time-aware models based on time features. |

Scroll down to see the list of available features. (Optional) Select a Feature List to be used for model training. Click View info in the Data Quality Assessment area on the right to investigate the quality of features.

After specifying the target feature, you can select a Modeling Mode to instruct DataRobot to build more or fewer models and click Start to begin modeling:

Tip

For large datasets, see the section on early target selection.

Or, you can set a variety of advanced options to fine-tune your project's model-building process:

DataRobot prepares the project (EDA2) and starts running models. A progress indicator for running models is displayed in the Worker Queue on the right of the screen. Depending on the size of the dataset, it may take several minutes to complete the modeling process. The results of the modeling process are displayed in the model Leaderboard, with the best-performing models (based on the chosen optimization metric) at the top of the list.

Learn more

To learn more about the topics discussed in this section, see:

2: Review model details¶

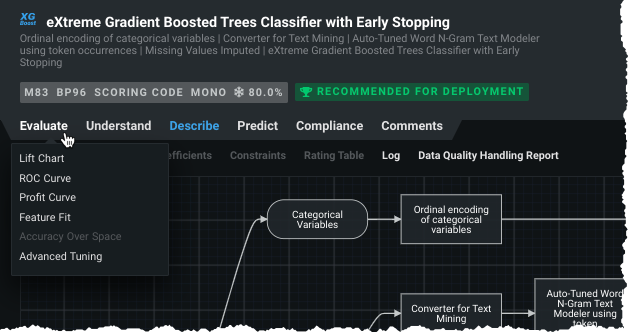

On the Leaderboard, click a model to display the model blueprint and access the many tabs available for investigating model information and insights.

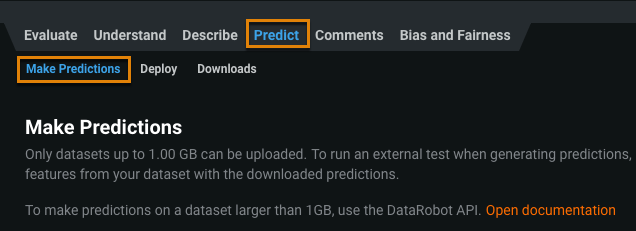

You can test and generate predictions from any model manually without deploying to production via Predict > Make Predictions. Provide a dataset by drag-and-dropping a file onto the screen or use a method from the dropdown. Once data upload completes, click Compute Predictions to generate predictions for the new dataset and Download, when complete, to view the results in a CSV file.

3: Deploy a model¶

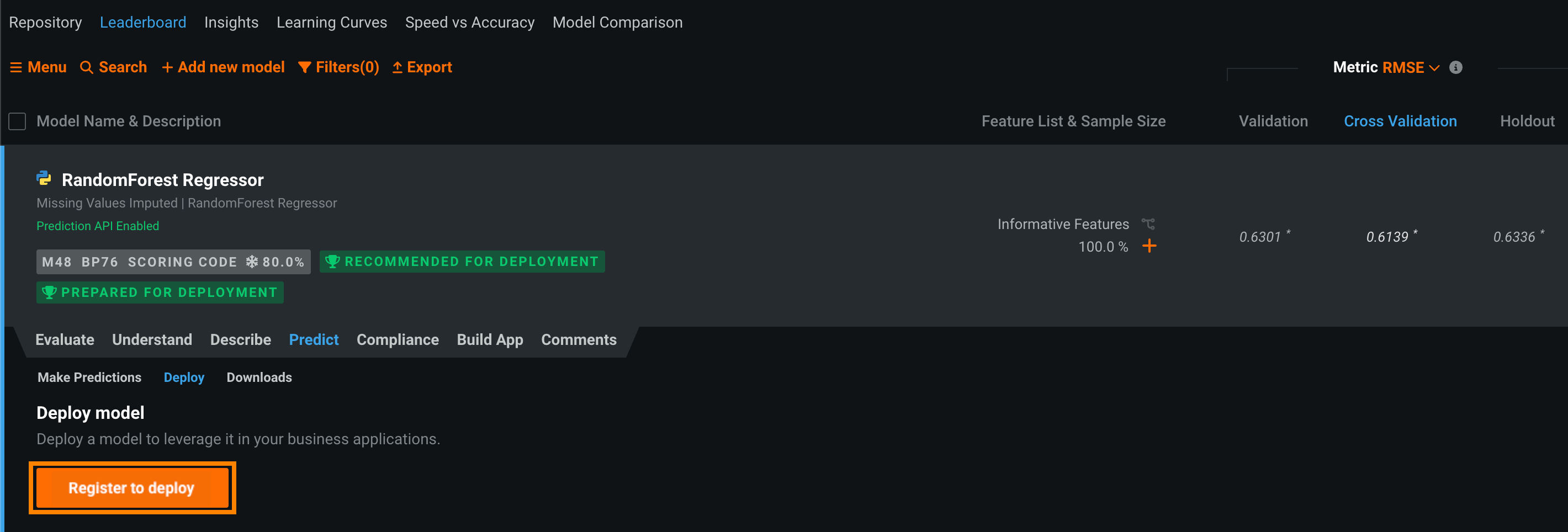

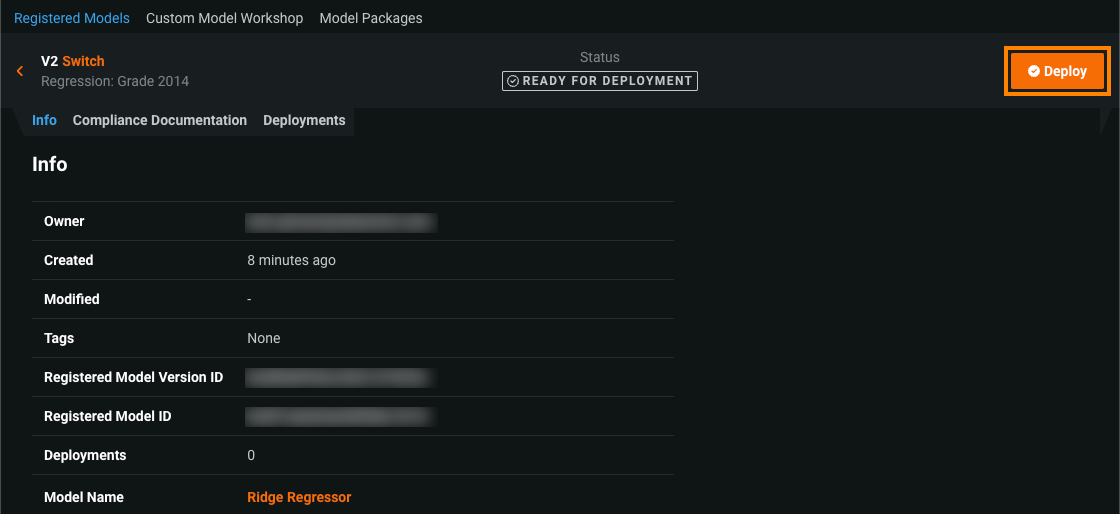

DataRobot AutoML automatically generates models and displays them on the Leaderboard. The model recommended for deployment appears at the top of the page. You can register this (or any other) model from the Leaderboard. Once the model is registered, you can create a deployment to start making and monitoring predictions.

Learn more

Learn about the how to register a model.

After you've added a model to the Model Registry, you can deploy it at any time to start making and monitoring predictions.

Learn more

Learn about the how to deploy a model.

The deployment information page outlines the capabilities of your current deployment based on the data provided, for example, training data, prediction data, or actuals. It populates fields for you to provide details about the training data, inference data, model, and your outcome data.

Learn more

Learn about the how to configure a deployment.

4: Set up model retraining and replacement¶

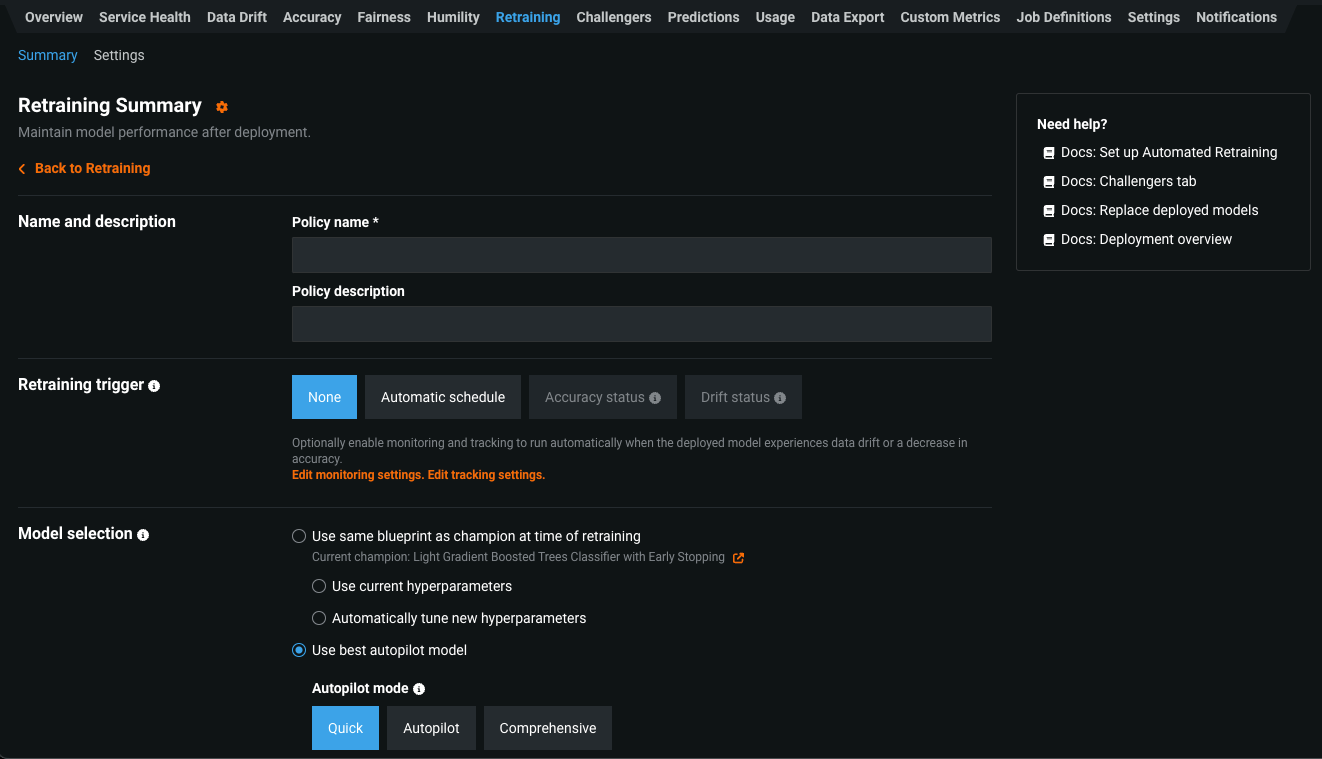

To maintain model performance after deployment without extensive manual work, DataRobot provides an automatic retraining capability for deployments. Upon providing a retraining dataset registered in the AI Catalog, you can define up to five retraining policies on each deployment, each consisting of a trigger, a modeling strategy, modeling settings, and a replacement action. When triggered, retraining will produce a new model based on these settings and notify you to consider promoting it. If necessary, you can manually replace a deployed model.

Learn more

Learn about the how to set up retraining and replacement.

5: Monitor model performance¶

To trust a model for mission-critical operations, users must have confidence in all aspects of model deployment. Model monitoring is the close tracking of the performance of ML models in production used to identify potential issues before they impact the business. Monitoring ranges from whether the service is reliably providing predictions in a timely manner and without errors to ensuring the predictions themselves are reliable. DataRobot automatically monitors model deployments and offers a central hub for detecting errors and model accuracy decay as soon as possible. For each deployment, DataRobot provides a status banner—model-specific information is also available on the Deployments inventory page.

Learn more

Learn about the how to monitor model performance.