Path-based routing to PPSs on AWS¶

Using DataRobot MLOps, users can deploy DataRobot models into their own Kubernetes clusters—managed or Self-Managed AI Platform—using Portable Prediction Servers (PPSs). A PPS is a Docker container that contains a DataRobot model with a monitoring agent, and can be deployed using container orchestration tools such as Kubernetes. Then you can use the monitoring and governance capabilities of MLOps.

When deploying multiple PPSs in the same Kubernetes cluster, you often want to have a single IP address as the entry point to all of the PPSs. A typical approach to this is path-based routing, which can be achieved using different Kubernetes Ingress Controllers. Some of the existing approaches to this include Traefik, HAProxy, and NGINX.

The following sections describe how to use the NGINX Ingress controller for path-based routing to PPSs deployed on Amazon EKS.

Before you start¶

There are some prerequisites to interacting with AWS and the underlying services. If any (or all) of these tools are already installed and configured for you, you can skip the corresponding steps. See Getting started with Amazon EKS – eksctl for detailed instructions.

Install necessary tools¶

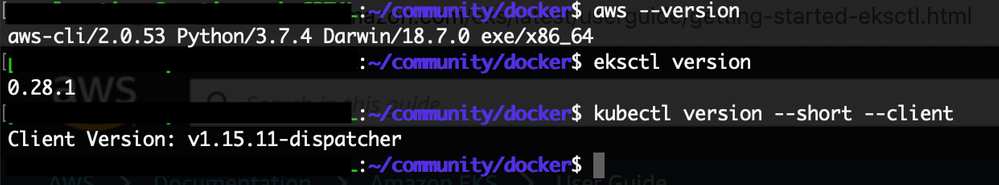

-

Install the AWS CLI, version 2.

-

Configure your AWS CLI credentials.

-

Install eksctl.

-

Install and configure kubectl (CLI for Kubernetes clusters).

-

Check that you successfully installed the tools.

Set up PPS containers¶

This procedure assumes that you have created and locally tested PPS containers for DataRobot AutoML models and pushed them to Amazon Elastic Container Registry (ECR). See Deploy models on AWS EKS for instructions.

This walkthrough is based on two PPSs created with the models of linear regression and image classification use cases, using a Kaggle housing prices dataset and Food 101 dataset, respectively.

The first PPS (housing prices) contains an eXtreme Gradient Boosted Trees Regressor (Gamma Loss) model. The second PPS (image binary classification - hot dog not hot dog), contains a SqueezeNet Image Pretrained Featurizer + Keras Slim Residual Neural Network Classifier using Training Schedule model.

The latter model has been trained using DataRobot Visual AI functionality .

Create an Amazon EKS cluster¶

With the Docker images stored in ECR, you can spin up an Amazon EKS cluster. The EKS cluster needs a VPC with either of the following:

- Two public subnets and two private subnets

- Three public subnets

Amazon EKS requires subnets in at least two Availability Zones. A VPC with public and private subnets is recommended so that Kubernetes can create public load balancers in the public subnets to control traffic to the pods that run on nodes in private subnets.

-

(Optional) Create or choose two public and two private subnets in your VPC. Make sure that “Auto-assign public IPv4 address” is enabled for the public subnets.

Note

The eksctl tool creates all necessary subnets behind the scenes if you don’t provide the corresponding

--vpc-private-subnetsand--vpc-public-subnetsparameters. -

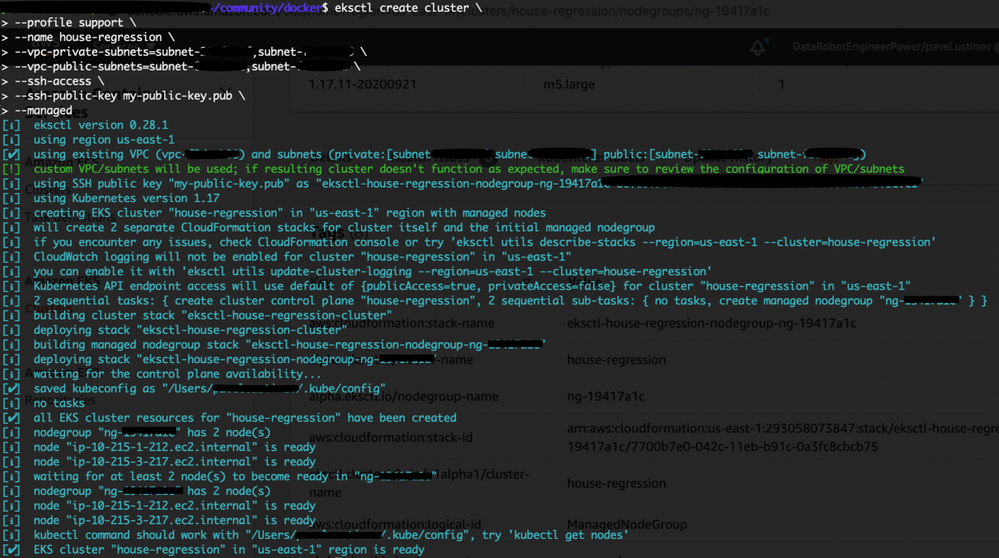

Create the cluster.

eksctl create cluster \ --name multi-app \ --vpc-private-subnets=subnet-XXXXXXX,subnet-XXXXXXX \ --vpc-public-subnets=subnet-XXXXXXX,subnet-XXXXXXX \ --nodegroup-name standard-workers \ --node-type t3.medium \ --nodes 2 \ --nodes-min 1 \ --nodes-max 3 \ --ssh-access \ --ssh-public-key my-public-key.pub \ --managed

Notes

* Usage of the `--managed` parameter enables [Amazon EKS-managed nodegroups](https://docs.aws.amazon.com/eks/latest/userguide/managed-node-groups.html){ target=_blank }. This feature automates the provisioning and lifecycle management of nodes (EC2 instances) for Amazon EKS Kubernetes clusters. You can provision optimized groups of nodes for their clusters. EKS will keep their nodes up-to-date with the latest Kubernetes and host OS versions. The **eksctl** tool makes it possible to choose the specific size and instance type family via command line flags or config files.

* Although `--ssh-public-key` is optional, it is highly recommended that you specify it when you create your node group with a cluster. This option enables SSH access to the nodes in your managed node group. Enabling SSH access allows you to connect to your instances and gather diagnostic information if there are issues. You cannot enable remote access after the node group is created.

Cluster provisioning usually takes between 10 and 15 minutes and results in the following:

-

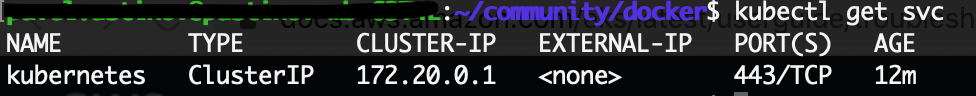

When your cluster is ready, test that your kubectl configuration is correct:

kubectl get svc

Deploy the NGINX Ingress controller¶

AWS Elastic Load Balancing supports three types of load balancers: Application Load Balancers (ALB), Network Load Balancers (NLB), and Classic Load Balancers (CLB). See Elastic Load Balancing features for details.

The NGINX Ingress controller uses NLB on AWS. NLB is best suited for load balancing of TCP, UDP, and TLS traffic when extreme performance is required. Operating at the connection level (Layer 4 of the OSI model), NLB routes traffic to targets within Amazon VPC and is capable of handling millions of requests per second while maintaining ultra-low latencies. NLB is also optimized to handle sudden and volatile traffic patterns.

Deploy the NGINX Ingress controller (this manifest file also launches the NLB):

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-

nginx/master/deploy/static/provider/aws/deploy.yaml

Create and deploy services to Kubernetes¶

-

Create a Kubernetes namespace:

kubectl create namespace aws-tlb-namespace -

Save the following contents to a

yamlfile on your local machine (in this case,house-regression-deployment.yaml), replacing the values for your project, for example:apiVersion: apps/v1 kind: Deployment metadata: name: house-regression-deployment namespace: aws-tlb-namespace labels: app: house-regression-app spec: replicas: 3 selector: matchLabels: app: house-regression-app template: metadata: labels: app: house-regression-app spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/arch operator: In values: - amd64 containers: - name: house-regression-model image: <your_image_in_ECR> ports: - containerPort: 80 -

Save the following contents to a

yamlfile on your local machine (in this case,house-regression-service.yaml), replacing the values for your project, for example:apiVersion: v1 kind: Service metadata: name: house-regression-service namespace: aws-tlb-namespace labels: app: house-regression-app spec: selector: app: house-regression-app ports: - protocol: TCP port: 8080 targetPort: 8080 type: NodePort -

Create a Kubernetes service and deployment:

kubectl apply -f house-regression-deployment.yaml kubectl apply -f house-regression-service.yaml -

Save the following contents to a

yamlfile on your local machine (in this case,hot-dog-deployment.yaml), replacing the values for your project, for example:apiVersion: apps/v1 kind: Deployment metadata: name: hot-dog-deployment namespace: aws-tlb-namespace labels: app: hot-dog-app spec: replicas: 3 selector: matchLabels: app: hot-dog-app template: metadata: labels: app: hot-dog-app spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: beta.kubernetes.io/arch operator: In values: - amd64 containers: - name: hot-dog-model image: <your_image_in_ECR> ports: - containerPort: 80 -

Save the following contents to a

yamlfile on your local machine (in this case,hot-dog-service.yaml), replacing the values for your project, for example:apiVersion: v1 kind: Service metadata: name: hot-dog-service namespace: aws-tlb-namespace labels: app: hot-dog-app spec: selector: app: hot-dog-app ports: - protocol: TCP port: 8080 targetPort: 8080 type: NodePort -

Create a Kubernetes service and deployment:

kubectl apply -f hot-dog-deployment.yaml kubectl apply -f hot-dog-service.yaml -

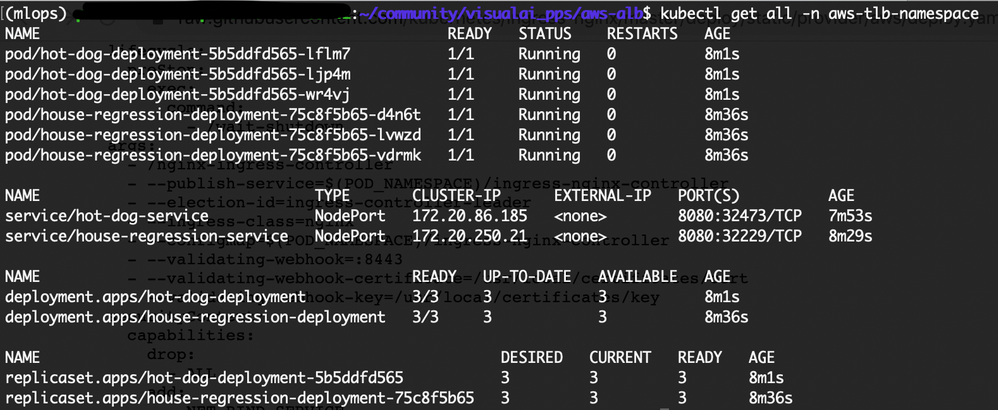

View all resources that exist in the namespace:

kubectl get all -n aws-tlb-namespace

Create and deploy Ingress resource for path-based routing¶

-

Save the following contents to a

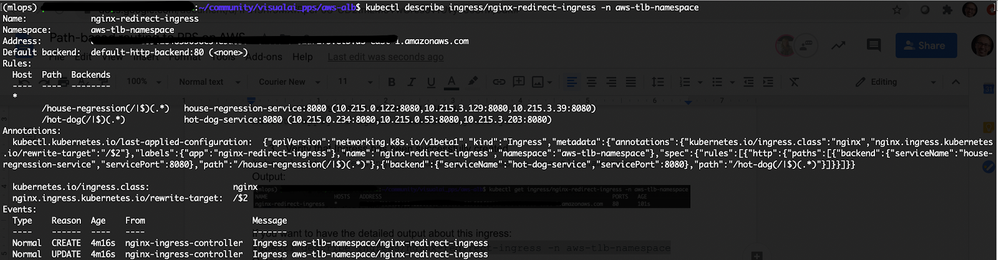

yamlfile on your local machine (in this case,nginx-redirect-ingress.yaml), replacing the values for your project, for example:apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: nginx-redirect-ingress namespace: aws-tlb-namespace annotations: kubernetes.io/ingress.class: nginx nginx.ingress.kubernetes.io/rewrite-target: /$2 labels: app: nginx-redirect-ingress spec: rules: - http: paths: - path: /house-regression(/|$)(.*) backend: serviceName: house-regression-service servicePort: 8080 - path: /hot-dog(/|$)(.*) backend: serviceName: hot-dog-service servicePort: 8080Note

The

nginx.ingress.kubernetes.io/rewrite-targetannotation rewrites the URL before forwarding the request to the backend pods. As a result, the paths /house-regression/some-house-path and /hot-dog/some-dog-path transform to /some-house-path and /some-dog-path, respectively. -

Create Ingress for path-based routing:

kubectl apply -f nginx-redirect-ingress.yaml -

Verify that Ingress has been successfully created:

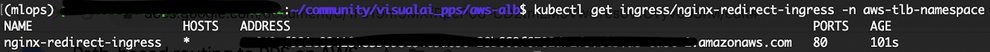

kubectl get ingress/nginx-redirect-ingress -n aws-tlb-namespace

-

(Optional) Use the following if you want to access the detailed output about this ingress:

kubectl describe ingress/nginx-redirect-ingress -n aws-tlb-namespace

Note the value of

Addressin the output for the next two scoring requests. -

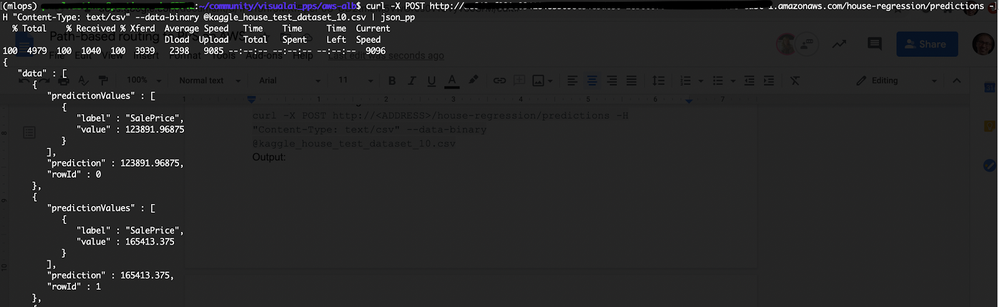

Score the house-regression model:

curl -X POST http://<ADDRESS>/house-regression/predictions -H "Content-Type: text/csv" --data-binary @kaggle_house_test_dataset_10.csv

-

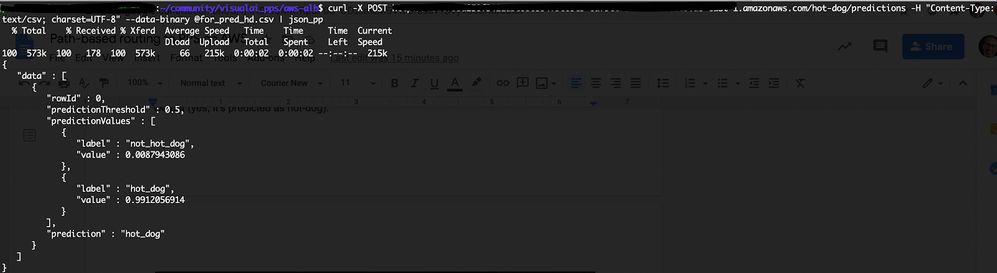

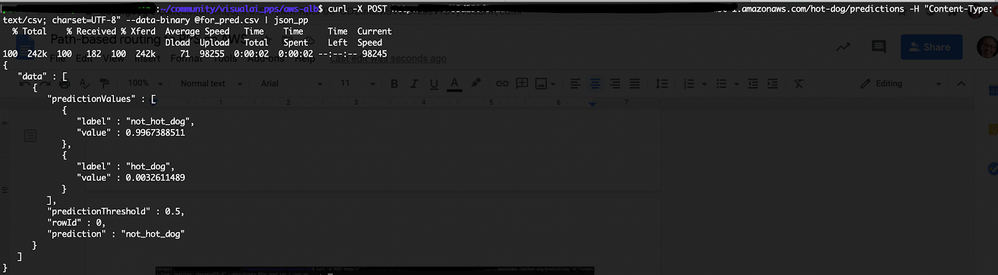

Score the hot-dog model:

curl -X POST http://<ADDRESS>/hot-dog/predictions -H "Content-Type: text/csv; charset=UTF-8" --data-binary @for_pred.csvNote

for_pred.csvis a CSV file containing one column with the header. The content for that column is a Base64 encoded image.Original photo for prediction (downloaded from here):

The image is predicted to be a hot dog:

Another photo for prediction (downloaded from here):

The image is predicted not to be a hot dog:

Clean up¶

-

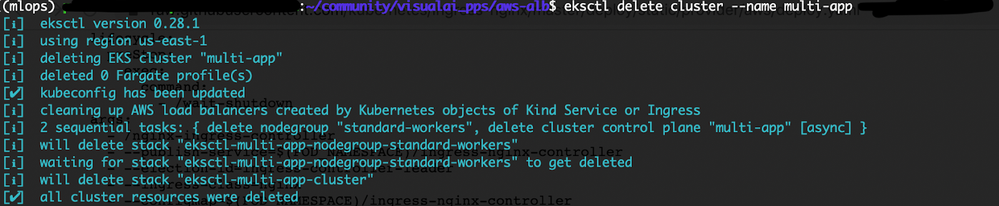

Remove the sample services, deployments, pods, and namespaces:

kubectl delete namespace aws-tlb-namespace kubectl delete namespace ingress-nginx -

Delete the cluster:

eksctl delete cluster --name multi-app

Wrap-up¶

The deployment of a few Kubernetes services behind the same IP address allows you to minimize the number of load balancers needed and facilitate the maintenance of the applications. Applying Kubernetes Ingress Controllers makes it possible.

This walkthrough described how to develop the path-based routing to a few Portable Prediction Servers (PPSs) deployed on the Amazon EKS platform. This solution has been implemented via NGINX Ingress Controller.