Observability subchart¶

This section shows how to configure the DataRobot chart to export platform telemetry to an observability backend with OpenTelemetry:

- The structure of the observability subchart, each of the instances that compose it, and the responsibilities of each

- How to install and configure the observability subchart, including the configuration of exporters of supported backends, as well as the provisioning of these for AWS, Azure and GCP

- How to optionally extend OTEL pipelines

- How to run helm tests to ensure that receivers are ready to accept telemetry データ

Platform telemetry encompasses logs, metrics, and traces to monitor the overall performance, availability, and resource utilization of the system, as well as troubleshooting. User level workload telemetry such as user workload logs or agentic traces aren't included.

Subchart structure¶

The observability sub-chart is named datarobot-observability-core, which is

in turn composed of several other charts:

kube-state-metrics: service that generates metrics about the state of the objects (chart)prometheus-node-exporter(daemonset): prometheus exporter for hardware and OS metrics exposed by *NIX kernels (chart)

And several OpenTelemetry collector instances (chart), broken down below by the telemetry they export:

| Collector subchart name | モード | ログ | 指標 | Traces |

|---|---|---|---|---|

opentelemetry-collector-daemonset |

daemonset | Events, pod logs | cAdvisor, kubelet stats | N/A |

opentelemetry-collector-deployment |

deployment | N/A | Application SDK instrumented | Application SDK instrumented |

opentelemetry-collector-scraper |

deployment | N/A | k8s service endpoints, control plane | N/A |

opentelemetry-collector-scraper-static |

deployment | N/A | kube-state-metrics | N/A |

opentelemetry-collector-statsd |

deployment | N/A | Application SDK instrumented | N/A |

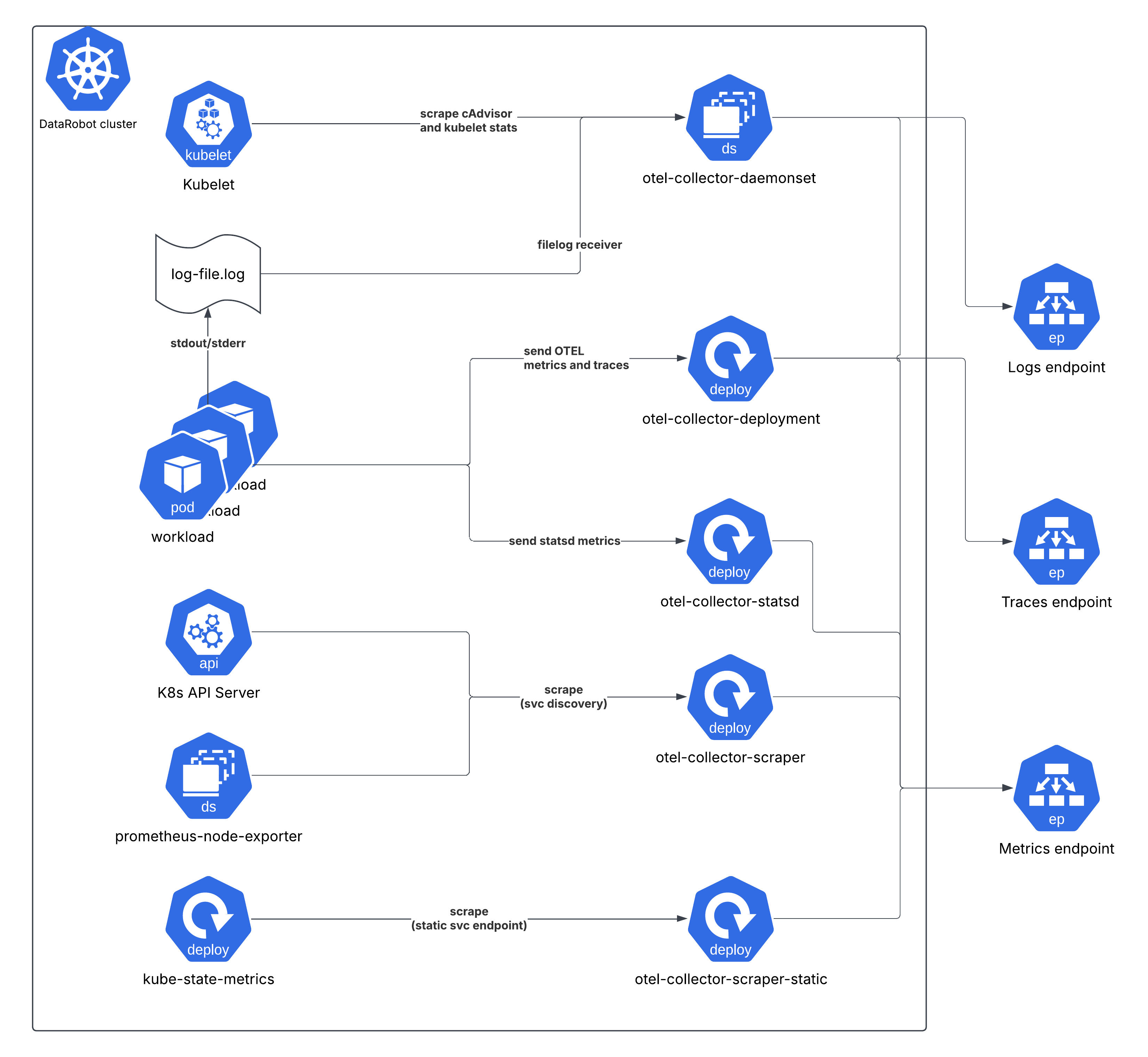

High level architecture¶

The following diagram shows a high level view of the whole setup for a DataRobot cluster.

The telemetry generated by the workloads (i.e. explicitly by the application

code) are either sent to the opentelemetry-collector-deployment service

endpoint (OTEL traces and metrics), opentelemetry-collector-statsd (statsd

metrics), or indirectly collected by the opentelemetry-collector-daemonset by

tailing log files for log entries output to stdout/stderr.

Cluster level metrics (cAdvisor, kube-state-metrics, node exporter, kubelet stats) are scraped on interval by other collector instances.

Each collector must then be configured with the endpoint to export the telemetry to. This is explained in detail in the corresponding section for the environment or observability backend.

Priority classes¶

The two sets of daemonsets (opentelemetry-collector-daemonset and

prometheus-node-exporter) have the priorityClassName:

"system-cluster-critical" assigned. This ensures these Pods have guaranteed

priority over all standard workloads in the cluster (those with no class

assigned or a lower custom value) for scheduling (prioritized by the scheduler)

or eviction (resistant to being evicted during resource pressure on the node).

This high priority is necessary because these agents are the definitive source of cluster health and status data. If the monitoring is compromised, the ability to diagnose any potential cluster issue is lost.

Refer also to kubernetes docs on PriorityClass

Cluster roles¶

In order to observe the cluster, it's required to define cluster roles and bind them to the collectors/metrics exposing services (kube-state-metrics and node exporter). This section shows a breakdown of the roles for each instance.

opentelemetry-collector-daemonset¶

ClusterRole Name: otel-collector

| API Group(s) | リソース | Verbs (Permissions) |

|---|---|---|

"" (Core) |

nodes, nodes/proxy, nodes/metrics, nodes/spec, services, endpoints, pods, pods/status, replicationcontrollers, replicationcontrollers/status, resourcequotas, events, namespaces, namespaces/status |

get, list, watch |

apps |

daemonsets, deployments, replicasets, statefulsets |

get, list, watch |

extensions |

daemonsets, deployments, replicasets |

get, list, watch |

batch |

jobs, cronjobs |

get, list, watch |

autoscaling |

horizontalpodautoscalers |

get, list, watch |

opentelemetry-collector-scraper¶

ClusterRole Name: otel-collector-scraper

| API Group(s) | リソース | Verbs (Permissions) |

|---|---|---|

"" (Core) |

nodes, nodes/proxy, nodes/metrics, nodes/spec, services, endpoints, pods, pods/status, replicationcontrollers, replicationcontrollers/status, resourcequotas, events, namespaces, namespaces/status |

get, list, watch |

apps |

daemonsets, deployments, replicasets, statefulsets |

get, list, watch |

extensions |

daemonsets, deployments, replicasets, ingresses |

get, list, watch |

| Non-Resource URLs | /metrics |

get |

opentelemetry-collector-scraper-static¶

ClusterRole Name: otel-collector-scraper-static

| API Group(s) | リソース | Verbs (Permissions) |

|---|---|---|

"" (Core) |

nodes, nodes/proxy, nodes/metrics, nodes/spec, services, endpoints, pods, pods/status, replicationcontrollers, replicationcontrollers/status, resourcequotas, events, namespaces, namespaces/status |

get, list, watch |

apps |

daemonsets, deployments, replicasets, statefulsets |

get, list, watch |

extensions |

daemonsets, deployments, replicasets, ingresses |

get, list, watch |

| Non-Resource URLs | /metrics |

get |

kube-state-metrics¶

| Resources (and API Group) | Non-Resource URLs | Resource Names | Verbs (Permissions) |

|---|---|---|---|

namespaces |

[] |

[] |

list, watch |

nodes |

[] |

[] |

list, watch |

persistentvolumeclaims |

[] |

[] |

list, watch |

persistentvolumes |

[] |

[] |

list, watch |

pods |

[] |

[] |

list, watch |

secrets |

[] |

[] |

list, watch |

services |

[] |

[] |

list, watch |

daemonsets.apps |

[] |

[] |

list, watch |

deployments.apps |

[] |

[] |

list, watch |

statefulsets.apps |

[] |

[] |

list, watch |

horizontalpodautoscalers.autoscaling |

[] |

[] |

list, watch |

jobs.batch |

[] |

[] |

list, watch |

daemonsets.extensions |

[] |

[] |

list, watch |

deployments.extensions |

[] |

[] |

list, watch |

ingresses.extensions |

[] |

[] |

list, watch |

ingresses.networking.k8s.io |

[] |

[] |

list, watch |

Environment specific configuration¶

The configuration varies depending on the chosen observability storage. There is an example values file for each supported observability backend with placeholders for a minimal installation (that's, without extending the pipelines with custom OTEL processors).

Refer to each of the specific sections depending on the cloud or observability provider. Each of these includes the complete minimal sample YAML files with the placeholders to update to export the telemetry.

備考

The datarobot-observability-core subchart and its subcharts are all

disabled by default in the datarobot-prime parent chart, and each of the

example overrides referenced below enable all of them by default.

Hyperscaler Managed services¶

The following sections describe the infrastructure requirements besides the exporter configuration, depending on the cloud DataRobot has been deployed on:

- AWS CloudWatch/X-Ray/Prometheus/Grafana

- Azure Monitor/Managed Prometheus and Grafana

- Google Cloud Monitoring

Observability vendors¶

For other observability vendors:

一般的な設定¶

Configuration that's not environment specific (OTEL pipeline extension, helm tests, etc.) can be found here.