Hugging FaceハブからのLLMのデプロイ¶

プレミアム機能

Hugging FaceハブからLLMをデプロイするには、GenAIエクスペリメントとGPU推論のためのプレミアム機能へのアクセスが必要です。 これらの機能を有効にするには、DataRobotの担当者または管理者にお問い合わせください。

Hugging Face Hubで一般的なオープンソースLLMを作成およびデプロイする際、ワークショップを利用できます。これにより、DataRobotでは、GenAIに対して提供されるエンタープライズグレードの可観測性とガバナンスによってAIアプリが保護されます。 新しい [GenAI] vLLM Inference Server実行環境および vLLM Inference Server Text Generation Templateは、DataRobotが提供するGenAIモニタリング機能およびボルトオンガバナンスAPIとすぐに統合できます。

このインフラストラクチャは、LLMの推論と提供のためのオープンソースフレームワークであるvLLMライブラリを使用して、Hugging Faceライブラリと連携し、一般的なオープンソースLLMをHugging Face Hubからシームレスにダウンロードして読み込みます。 まず、テキスト生成モデルのテンプレートをカスタマイズしてください。 デフォルトでは Llama-3.1-8bLLMを使用しますが、engine_config.jsonファイルを変更して使用するOSSモデル名を指定することで、選択するモデルを変更することができます。

前提条件¶

DataRobotでHugging Face LLMの組み立てを開始する前に、次のリソースを取得します。

-

ゲート付きモデルにアクセスするには、 Hugging Faceアカウントと

READ権限を備えた Hugging Faceアクセストークンが必要です。 Hugging Faceアクセストークンに加えて、モデルの作成者に モデルアクセス権限をリクエストします。

テキスト生成カスタムモデルの作成¶

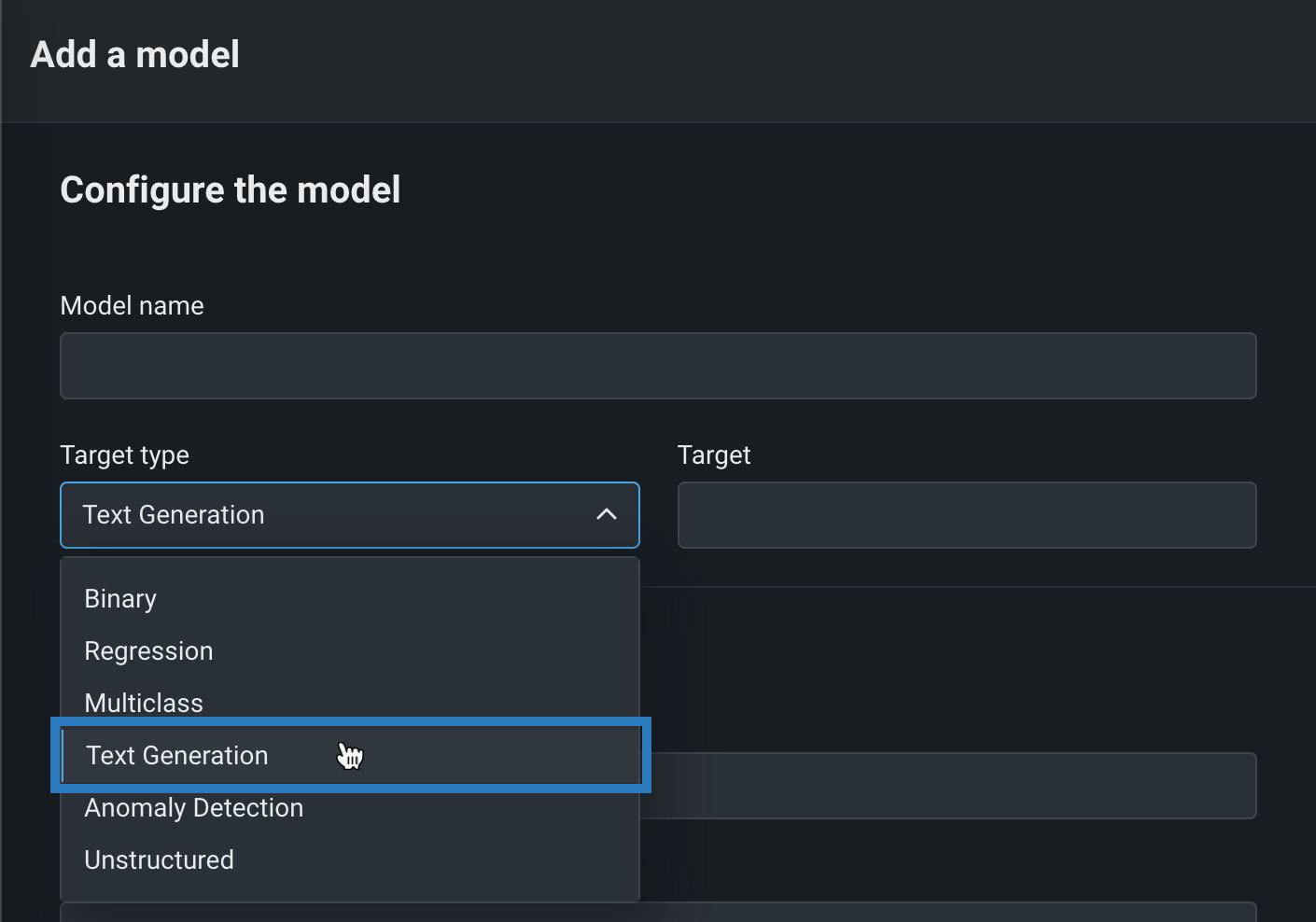

カスタムLLMを作成するには、ワークショップで新しいカスタムテキスト生成モデルを作成します。 ターゲットタイプをテキスト生成に設定し、ターゲットを定義します。

カスタムLLMの必要なファイルをアップロードする前に、GenAI vLLM推論サーバーを基本環境リストから選択します。 モデル環境は、カスタムモデルの テストと 登録済みカスタムモデルの デプロイに使用されます。

必要な環境が利用できない場合はどうなりますか?

GenAI vLLM推論サーバー環境が基本環境リストにない場合、DRUMリポジトリの public_dropin_gpu_environments/vllmディレクトリにあるリソースを使用して カスタム環境を追加することができます。

カスタムLLMのアセンブル¶

カスタムLLMをアセンブルするには、DRUMリポジトリの model_templates/gpu_vllm_textgenディレクトリにあるカスタムモデルファイルを使用します。 モデルファイルを手動で追加するか、 DRUMリポジトリからプルできます。

モデルファイルを追加した後、読み込むHugging faceモデルを選択できます。 デフォルトでは、このテキスト生成の例は Llama-3.1-8bモデルを使用します。 選択したモデルを変更するには、engine_config.jsonファイルの横にある edit をクリックして--model引数を変更します。

{

"args": [

"--model", "meta-llama/Llama-3.1-8B-Instruct"

]

}

必要なランタイムパラメーターの設定¶

カスタムLLMのファイルがアセンブルされた後、カスタムモデルのmodel-metadata.yamlファイルで定義されたランタイムパラメーターを設定します。 次のランタイムパラメーターは 未設定なので、設定が必要です。

| ランタイムパラメーター | 説明 |

|---|---|

HuggingFaceToken |

少なくともREAD権限を備えた Hugging Faceアクセストークンを含む、 資格情報管理ページで作成されたDataRobot APIトークン資格情報 |

system_prompt |

カスタムLLMのすべての個別プロンプトの前に付加される「ユニバーサル」プロンプト LLMのレスポンスを指示およびフォーマットします。 システムプロンプトは、レスポンス生成中に作成される構造、トーン、形式、コンテンツに影響を与えることがあります。 |

さらに、次のランタイムパラメーターのデフォルト値を更新できます。

| ランタイムパラメーター | 説明 |

|---|---|

max_tokens |

チャット補完で生成できるトークンの最大数 この値を使用して、APIを介して生成されたテキストのコストを制御できます。 |

max_model_len |

モデルのコンテキスト長 指定しない場合、このパラメーターはモデル設定から自動的に派生します。 |

prompt_column_name |

LLMプロンプトを含む入力列の名前 |

gpu_memory_utilization |

モデルの実行に使用するGPUメモリーの割合。0から1までの範囲で指定できます。たとえば、0.5という値は、GPUメモリー使用率50%を示します。 指定しない場合、デフォルト値の0.9が使用されます。 |

高度な設定

[GenAI] vLLM Inference Server実行環境で使用できるランタイムパラメーターと設定オプションの詳細については、環境 READMEを参照してください。

カスタムモデルのリソースの設定¶

カスタムモデルリソースで、適切なGPUバンドル(少なくともGPU - L / gpu.large)を選択します。

必要なリソースバンドルを推定するには、次の式を使用します。

ここで、各特徴量は以下を表します。

| 特徴量 | 説明 |

|---|---|

| \(M\) | ギガバイト (GB) 単位で必要なGPUメモリー |

| \(P\) | モデル内のパラメーターの数(たとえば、Llama-3.1-8bには80億個のパラメーターがあります)。 |

| \(Q\) | モデルのロードに使用されるビット数(4、8、または16など) |

手動計算の詳細

詳細については、 LLMに対応するためのGPUメモリーの計算を参照してください。

Hugging Faceでの自動計算

Hugging faceでGPUメモリー要件を計算することもできます。

この例のモデル Llama-3.1-8bの場合、次のように評価されます。

したがって、このモデルには19.2GBのメモリーが必要であり、GPU - Lバンドル (1 x NVIDIA A10G | 24GB VRAM | 8 CPU | 32GB RAM) を選択する必要があることを示しています。

カスタムLLMのデプロイと実行¶

カスタムLLMをアセンブルして設定した後、以下を実行します。

-

カスタムモデルをテストする(テキスト生成モデルの場合、テストプランには起動テストと予測エラーテストのみが含まれます)。

デプロイされたLLMでの追加のガバナンスAPIの使用¶

生成されるLLMデプロイではデフォルトでDRUMを使用するため、OpenAI Python APIライブラリを使用してOpenAIチャット補完API仕様を実装するボルトオンのガバナンスAPIを使用できます。 詳細については、 OpenAI Python APIライブラリのドキュメントを参照してください。

以下のコードスニペットで強調表示されている行では、{DATAROBOT_DEPLOYMENT_ID}および{DATAROBOT_API_KEY}のプレースホルダーをLLMデプロイのIDとDataRobot APIキーに置き換える必要があります。

| Call the Chat API for the deployment | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

Dockerを使用したローカルでのテスト¶

Dockerを使用してローカルでカスタムモデルをテストするには、次のコマンドを使用できます。

export HF_TOKEN=<INSERT HUGGINGFACE TOKEN HERE>

cd ~/datarobot-user-models/public_dropin_gpu_environments/vllm

cp ~/datarobot-user-models/model_templates/gpu_vllm_textgen/* .

docker build -t vllm .

docker run -p8080:8080 \

--gpus 'all' \

--net=host \

--shm-size=8GB \

-e DATAROBOT_ENDPOINT=https://app.datarobot.com/api/v2 \

-e DATAROBOT_API_TOKEN=${DATAROBOT_API_TOKEN} \

-e MLOPS_DEPLOYMENT_ID=${DATAROBOT_DEPLOYMENT_ID} \

-e TARGET_TYPE=textgeneration \

-e TARGET_NAME=completions \

-e MLOPS_RUNTIME_PARAM_HuggingFaceToken="{\"type\": \"credential\", \"payload\": {\"credentialType\": \"api_token\", \"apiToken\": \"${HF_TOKEN}\"}}" \

vllm

複数のGPUの使用

--shm-size引数は、複数のGPUを利用してLLMを実行しようとしている場合にのみ必要です。