GenAI overview¶

By leveraging DataRobot's AI platform for generative AI you can:

- Safely extend LLMs with proprietary data.

- Build and deploy generative AI solutions using your tool-of-choice.

- Confidently manage and govern LLMs in production.

- Unify generative and predictive AI workflows end-to-end.

- Continuously improve GenAI applications with predictive modeling and user feedback.

DataRobot's generative AI offering builds off of DataRobot's predictive AI experience to provide confidence scores and enable you to bring your favorite libraries, choose your LLMs, and integrate third-party tools. You can embed or deploy AI wherever it will drive value for your business, and leverage built-in governance for each asset in the pipeline.

While there is a perception that "it's all about the model," in reality the value depends more on the GenAI end-to-end strategy. Quality of the vector database (if used), prompting strategy, and monitoring, maintenance, and governance are all critical components of success.

With DataRobot GenAI capabilities, you can generate text content using a variety of pre-trained large language models (LLMs). Additionally, you can tailor the content to your data by building vector databases and leveraging them in the LLM blueprints.

For trial users

If you are a DataRobot trial user, see the FAQ for information on trial-specific capabilities. To start a DataRobot trial of predictive and generative AI, click Start a free trial at the top of this page.

See the list of considerations to keep in mind when working with DataRobot GenAI.

Best practices for prompt engineering¶

Prompt engineering refers to the process of carefully crafting the input prompts that you give to an LLM to maximize the usefulness of the output it generates. This can be a critical step in getting the most out of these models, as the way you phrase your prompt can significantly influence the response. The following are some best practices for prompt engineering:

| Characteristic | Explanation |

|---|---|

| Specificity | Make prompts as specific as possible. Instead of asking “What’s the weather like?”, ask “What’s the current temperature in San Francisco, California?” The latter is more likely to yield the information you’re looking for. |

| Explicit instructions | If you have a specific format or type of answer in mind, make that clear in your prompt. For example, if you want a list, ask for a list. If you want a yes or no answer, ask for that. |

| Contextual information | If relevant, provide some context to guide the model. For instance, if you’re asking for advice on writing a scientific type of content, make sure to mention that in your prompt. |

| Use of examples | When you want the model to generate in a particular style or format, giving an example can help guide the output. For instance, if you want a rhyming couplet, you could include an example of one in your prompt. |

| Prompt length | While it can be useful to provide context, remember that longer prompts may lead the model to focus more on the later parts of the prompt and disregard earlier information. Be concise and to the point. |

| Bias and ethical considerations | Be aware that the way you phrase your prompt can influence the output in terms of bias and harmful response. Ensure your prompts are as neutral and fair as possible, and be aware that the model can reflect biases present in its training data. |

| Temperature and Top P Settings | In addition to the prompt itself, you can also adjust the ‘temperature’ and ‘Top P’ settings. Higher temperature values make output more random, while lower values make it more deterministic. Top P controls the diversity of the output by limiting the model to consider only a certain percentile of most likely next words. |

| Token limitations | Be aware of the maximum token limitations of the model. For example, GPT-3.5 Turbo has a maximum token limit of 4096 tokens. If your prompt is too long, it could limit the length of the model’s response. |

These are some of the key considerations in prompt engineering, but the exact approach depends on the specific use case, model, and kind of output you’re looking for. It’s often a process of trial and error and can require a good understanding of both your problem domain and the capabilities and limitations of the model.

Example use case¶

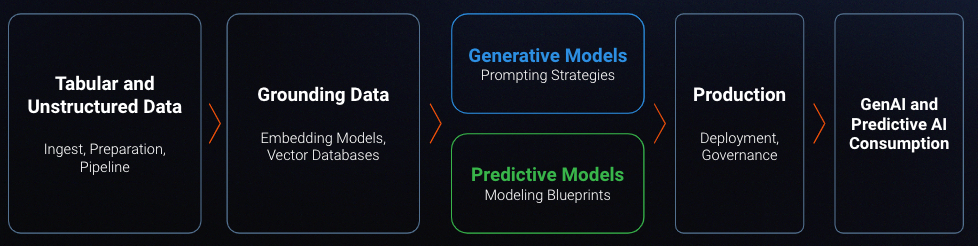

The following example illustrates how DataRobot leverages its own technology-agnostic, scalable, extensible, flexible, and repeatable framework to build an end-to-end generative AI solution at scale.

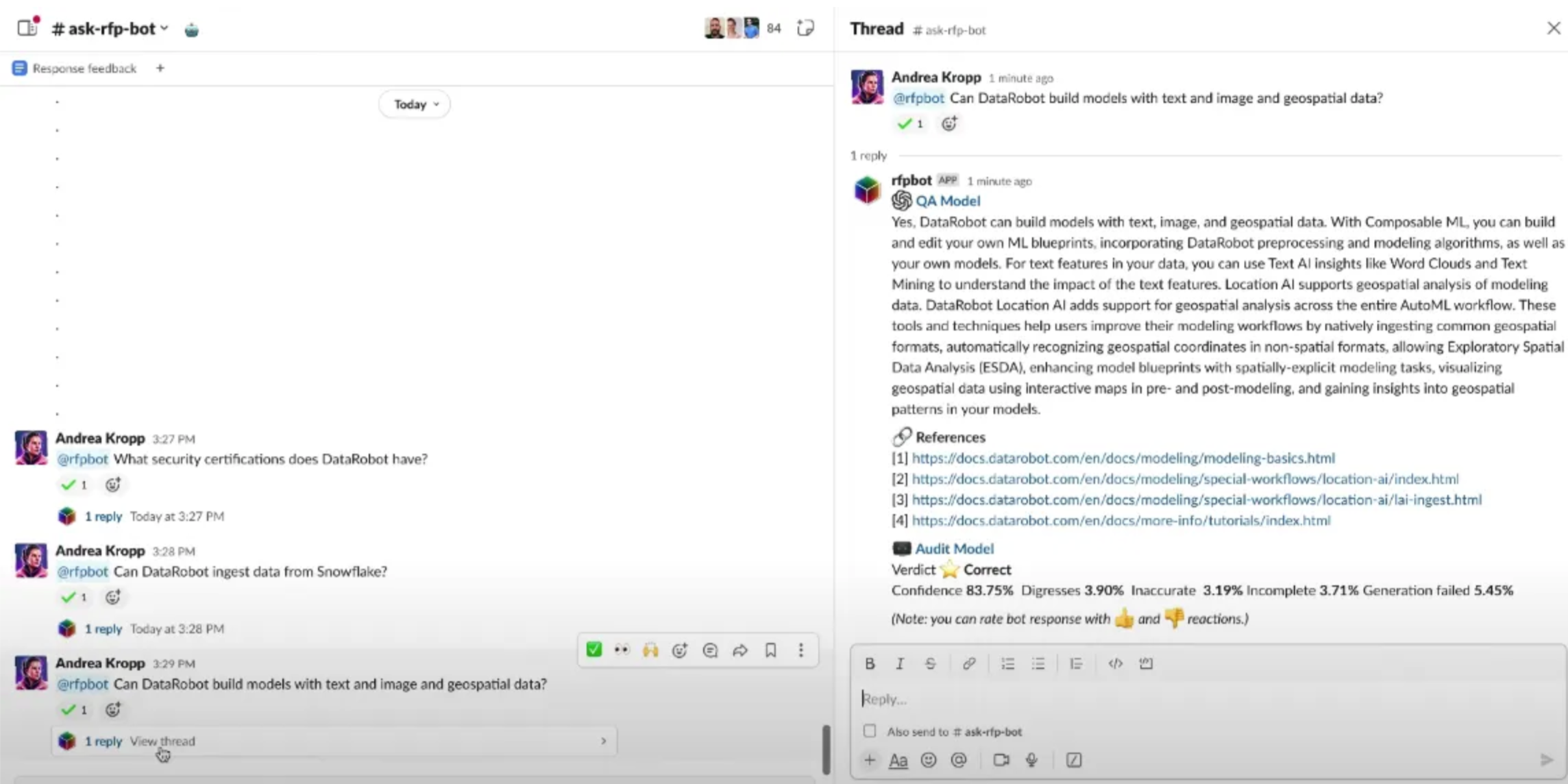

This article and the embedded video showcase a Request for Proposal Assistant named RFPBot. RFPBot has a predictive and a generative component and was built entirely within DataRobot in the course of a single afternoon.

In the image below, notice the content that follows the paragraph of generated text. There are four links to references, five subscores from the audit model, and an instruction to up-vote or down-vote the response.

RFPBot uses an organization’s internal data to help salespeople generate RFP responses in a fraction of the usual time. The speed increase is attributable to three sources:

-

The custom knowledge base underpinning the solution. This stands in for the experts that would otherwise be tapped to answer the RFP.

-

The use of Generative AI to write the prose.

-

Integration with the organization’s preferred consumption environment (Slack, in this case).

RFPBot integrates best-of-breed components during development. Post-development the entire solution is monitored in real-time. RFPBot both showcases the framework itself and the power of combining generative and predictive AI more generally to deliver business results.

Note that the concepts and processes are transferable to any other use case that requires accurate and complete written answers to detailed questions.

Applying the framework¶

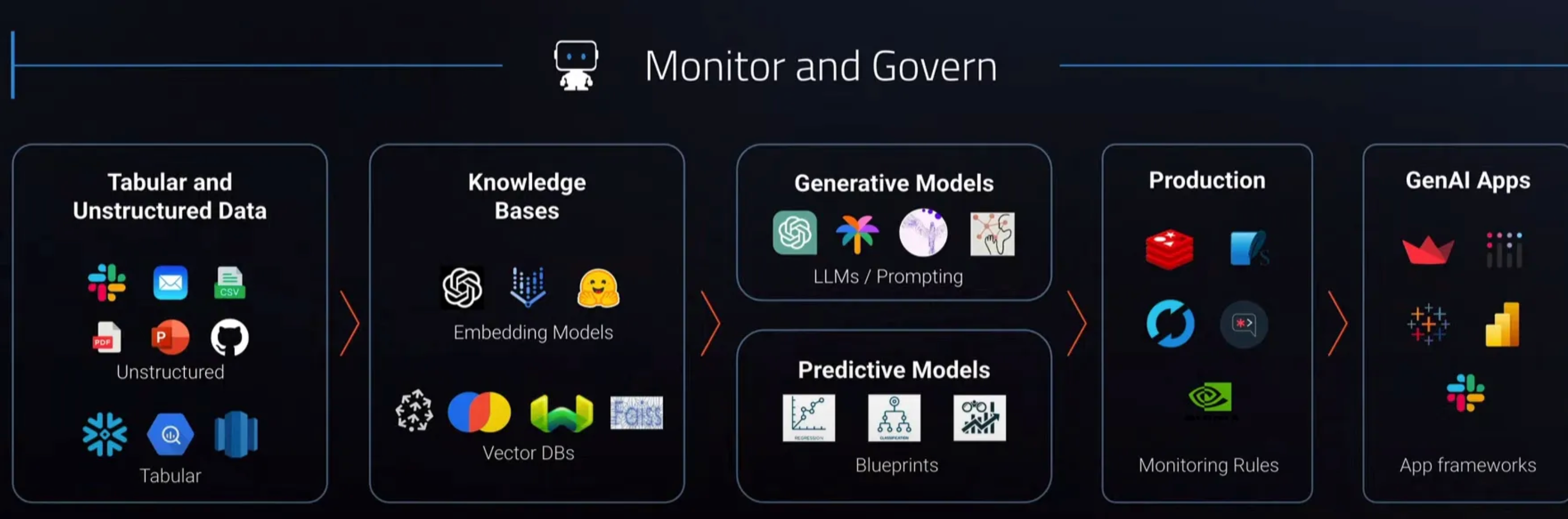

Within each major framework component, there are many choices of tools and technology. When implementing the framework, any choice is possible at each stage. Because organizations want to use best-of-breed—and which technology is best-of-breed will change over time—what really matters is flexibility and interoperability in a rapidly changing tech landscape. The icons shown are among the current possibilities.

RFPBot uses the following. Each choice at each stage in the framework is independent. The role of the DataRobot AI Platform is to orchestrate, govern, and monitor the whole solution.

- Word, Excel, and Markdown files as source content.

- An embedding model from Hugging Face (all-MiniLM-L6-v2)

- Facebook AI Similarity Search (FAISS) Vector Database

- ChatGPT 3.5 Turbo

- A Logistic Regression

- A Streamlit application

- A Slack integration.

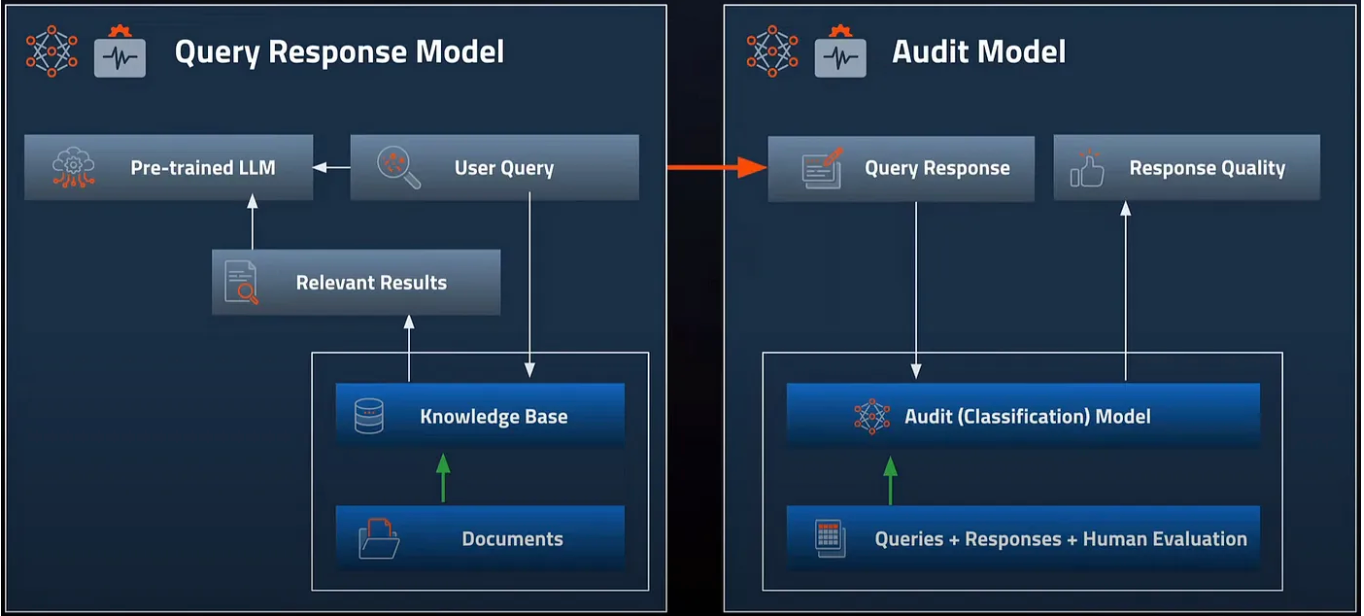

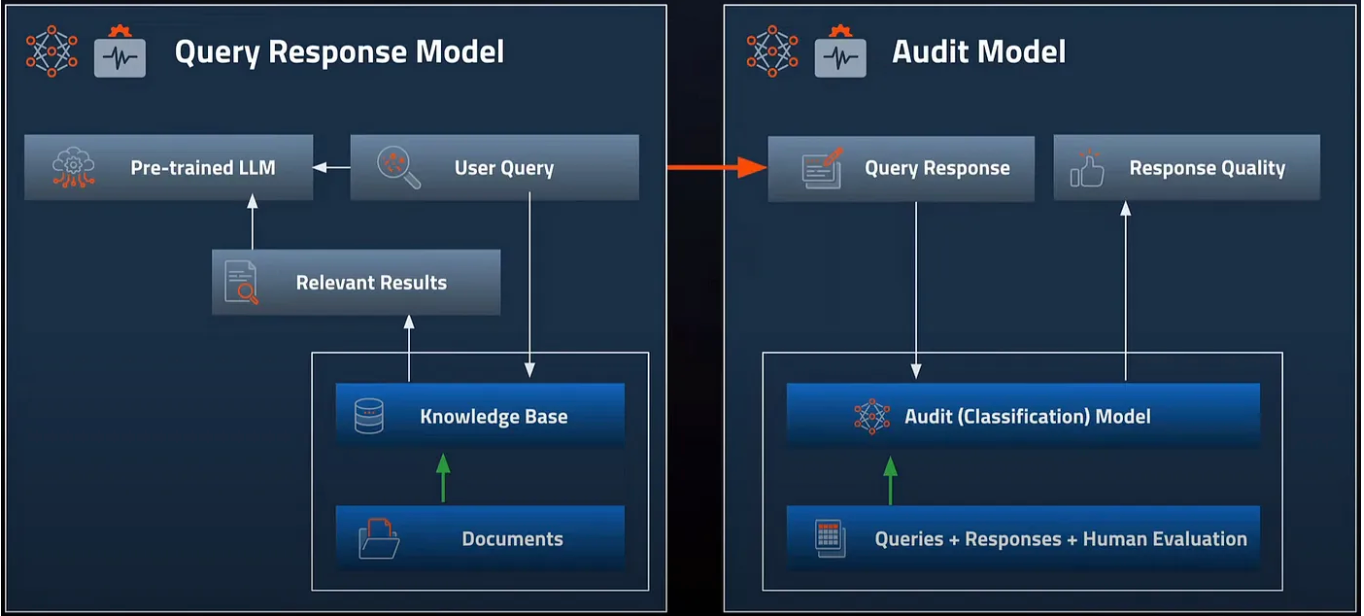

Generative and predictive models work together. Users are actually interacting with two models each time they type a question—a Query Response Model and an Audit Model.

- The Query Response Model is generative: It creates the answer to the query.

- The Audit Model is predictive: It evaluates the correctness of the answer given as a predicted probability.

The citations listed as resources in the RFPBot example are citations of internal documents drawn from the knowledge base. The knowledge base was created by applying an embedding model to a set of documents and files and storing the result in a vector database. This step solves the problem of LLMs being stuck in time and lacking the context from private data. When a user queries RFPBot, context-specific information drawn from the knowledge base is made available to the LLM and shown to the user as a source for the generation.

Orchestration and monitoring¶

The whole end-to-end solution integrating best-of-breed components is built in a DataRobot-hosted notebook, which has enterprise security, sharing, and version control.

Once built, the solution is monitored using standard and custom-defined metrics. In the image below notice the metrics specific to LLMOps such as Informative Response, Truthful Response, Prompt Toxicity Score, and LLM Cost.

By abstracting away infrastructure and environment management tasks, a single person can create an application such as RFPBot in hours or days, not weeks or months. By using an open, extensible platform for developing GenAI applications and following a repeatable framework organizations avoid vendor lock-in and the accumulation of technical debt. They also vastly simplify model lifecycle management by being able to upgrade and replace individual components within the framework over time.

Read more¶

- End-to-end Generative AI Applications with DataRobot (YouTube)

- Everything You Need to Know About LLMOps (White paper)

- 10 Key Considerations for Generative AI in Production (White paper)

- Future of Generative AI: A Frontline Practitioner’s Take on Adoption Trends (Blog, Medium)

- GenAI Accelerators GitHub repo