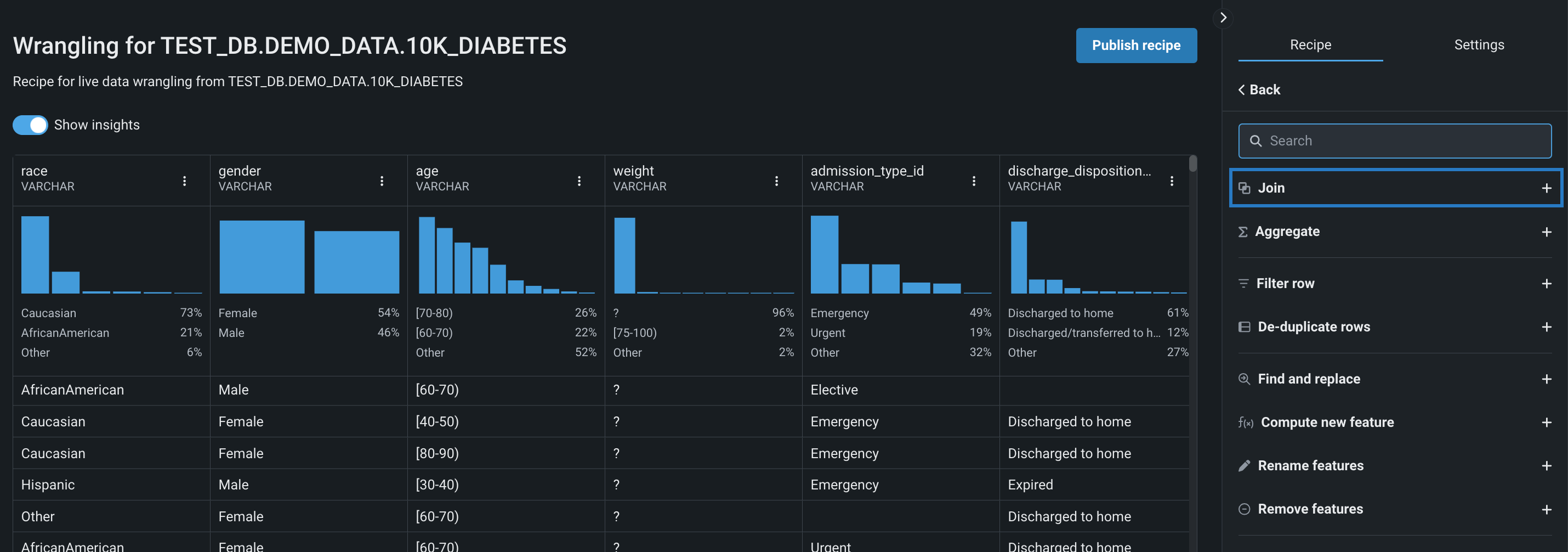

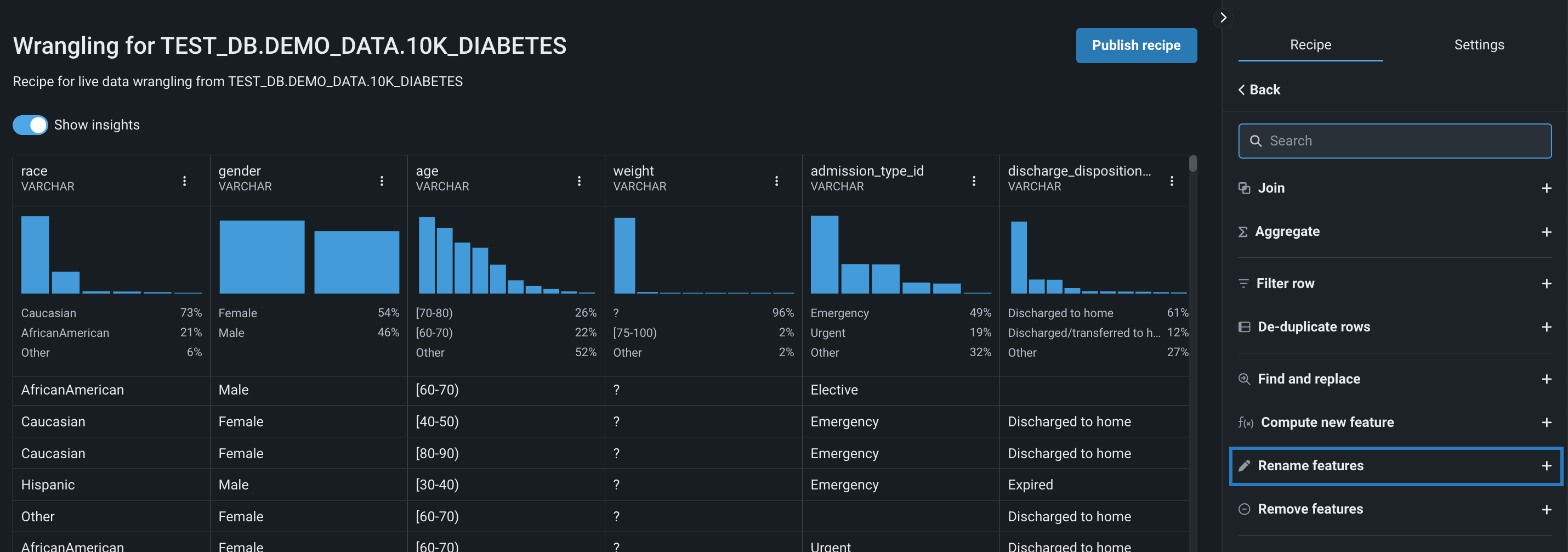

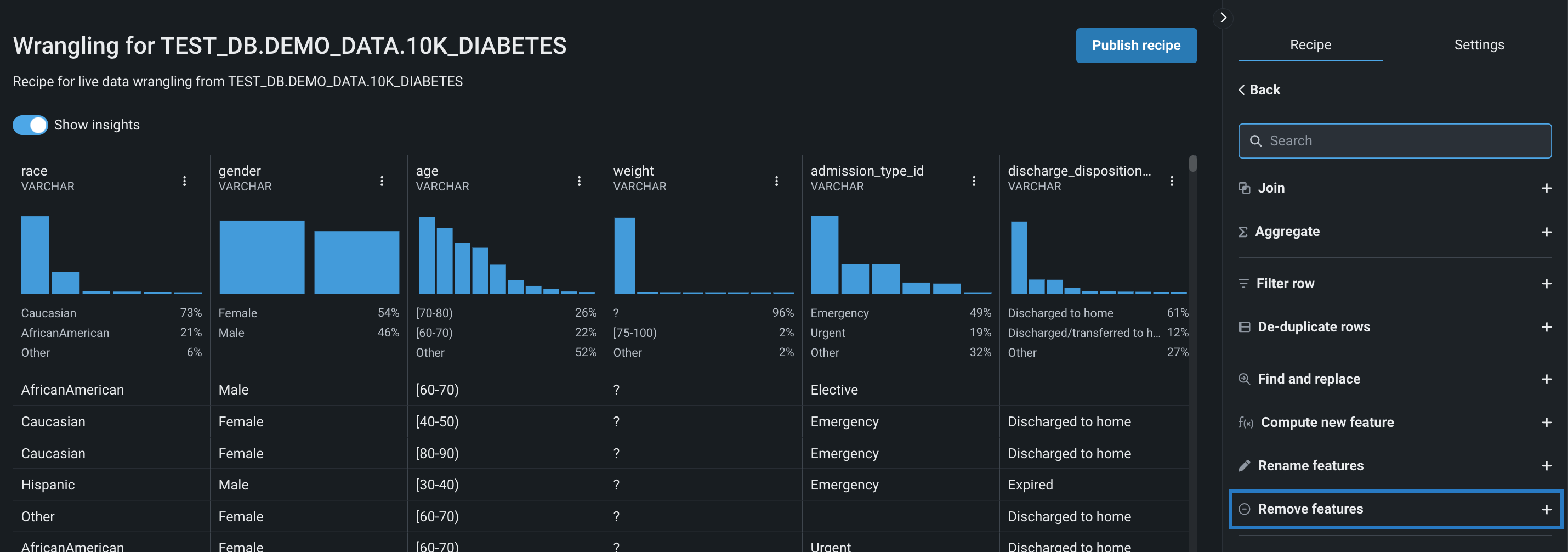

Build a recipe¶

Building a recipe is the first step in preparing your data. When you start a Wrangle session, DataRobot connects to your data source, pulls a live random sample, and performs exploratory data analysis on that sample. When you add operations to your recipe, the transformation is applied to the sample and the exploratory data insights are recalculated, allowing you to quickly iterate on and profile your data before publishing.

Wrangling requirement

To wrangle data, you must add a dataset using a configured data connection.

Availability information

The ability to perform wrangling and pushdown on datasets stored in the Data Registry is off by default. Contact your DataRobot representative or administrator for information on enabling the feature.

To wrangle Data Registry datasets, you must first add the dataset to your Use Case. Then, you can begin wrangling from the Actions menu next to the dataset. Note that this feature is only available for multi-tenant SaaS users and installations with AWS VPC or Google VPC environments.

Feature flag: Enable Wrangling Pushdown for Data Registry Datasets

Note that when you wrangle a dataset in your Use Case, including re-wrangling the same dataset, DataRobot creates and saves a copy of the recipe in the Data tab regardless of whether or not you add operations to it. Then, each time you modify the recipe, your changes are automatically saved. Additionally, you can open saved recipes to continue making changes.

See also:

- Associated considerations for important additional information.

- A complete list of available connections in Workbench and which features they support.

- Tips for improving the performance of wrangling large Snowflake datasets.

Modify wrangling settings¶

In a recipe, you can modify the settings to make the summary information more descriptive for future use, as well as the number of rows included in the live preview.

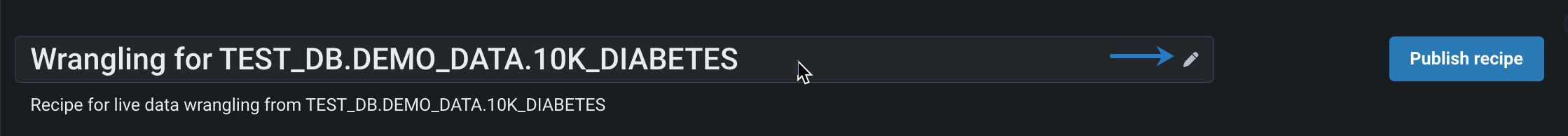

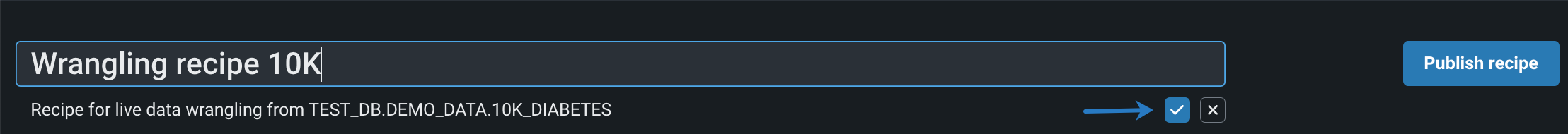

Edit the recipe metadata¶

By default, DataRobot assigns a name and description to each wrangling recipe based on the source data, however, you can modify this information to make it more applicable to your specific use case.

To edit the recipe metadata:

-

Hover on the field you want to edit—either the title or the description. Then, click the field or the pencil icon to the right.

-

Modify the name or description, and when you're done, click outside the field or the check mark on the right to save your changes.

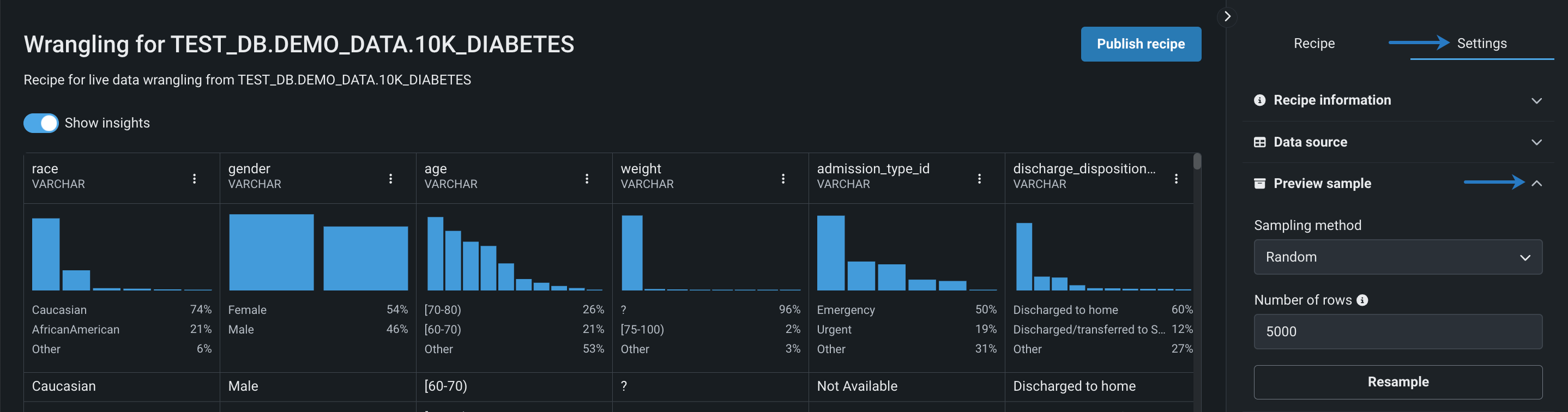

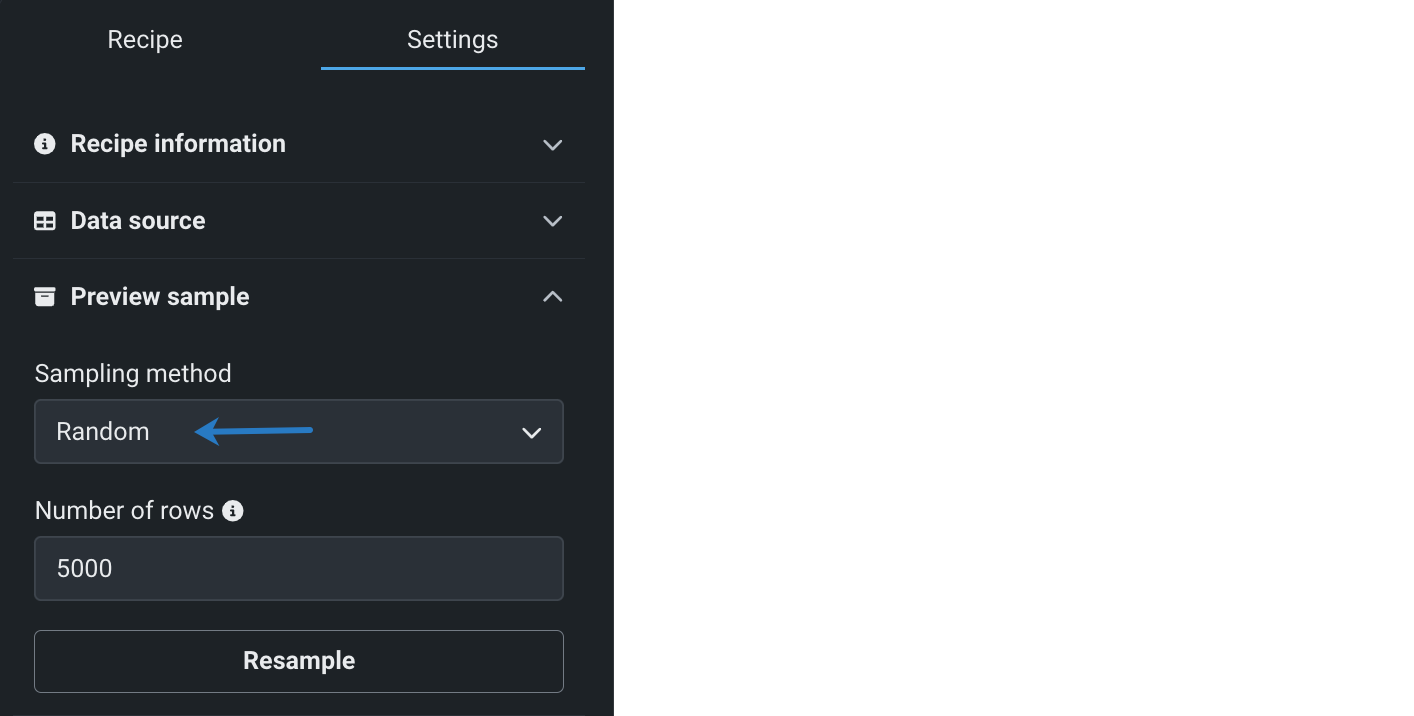

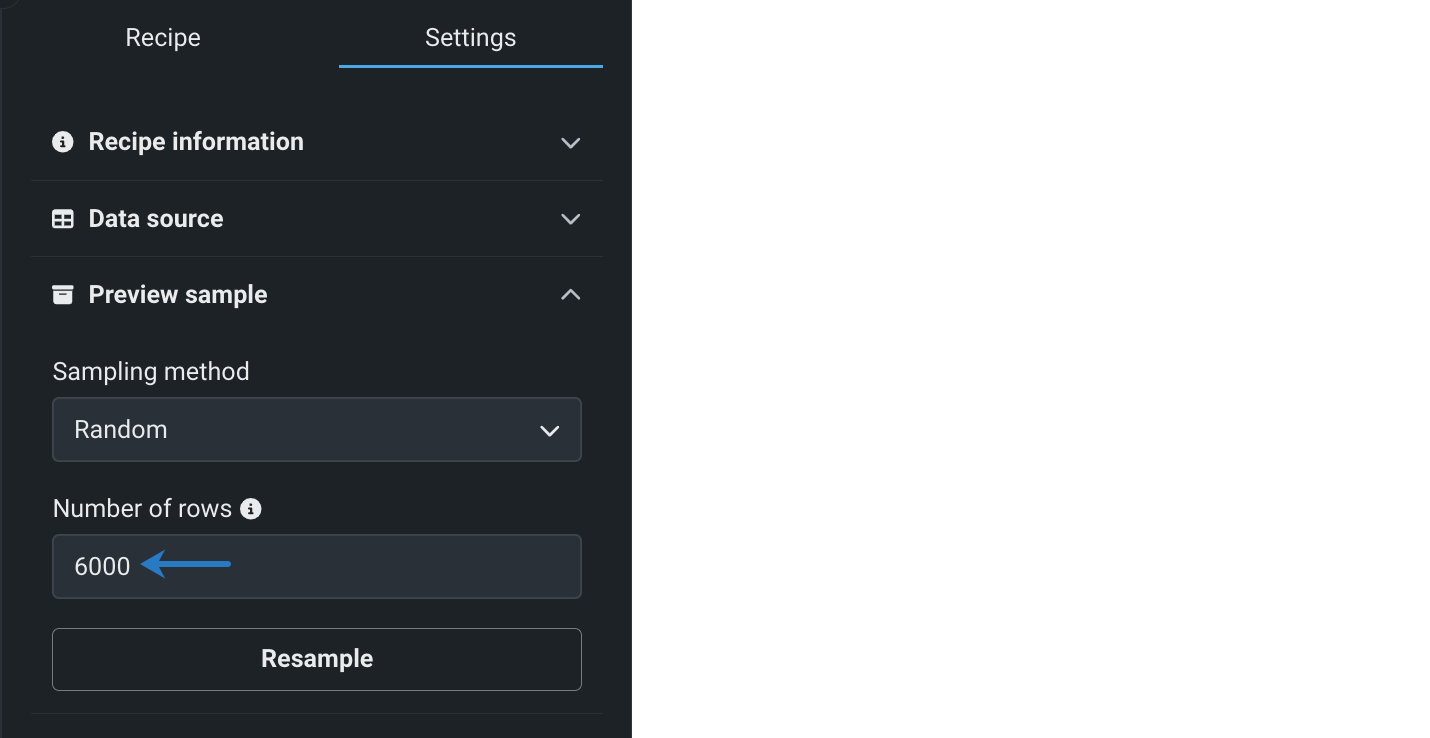

Configure the live sample¶

By default, DataRobot retrieves 10000 random rows for the live sample, however, you can modify this number and sampling method in the wrangling settings. Note that the more rows you retrieve, the longer it will take to render the live sample.

To configure the live sample:

-

Click Settings in the right panel and open Preview sample.

-

Select a Sampling method. Use the dropdown to select a sampling method—either Random or First-N Rows.

-

Specify the Number of rows to be retrieved from the source data. Enter the number of rows (under 10000) you want to include in the live sample and click Resample. The live sample updates to display the specified number of rows.

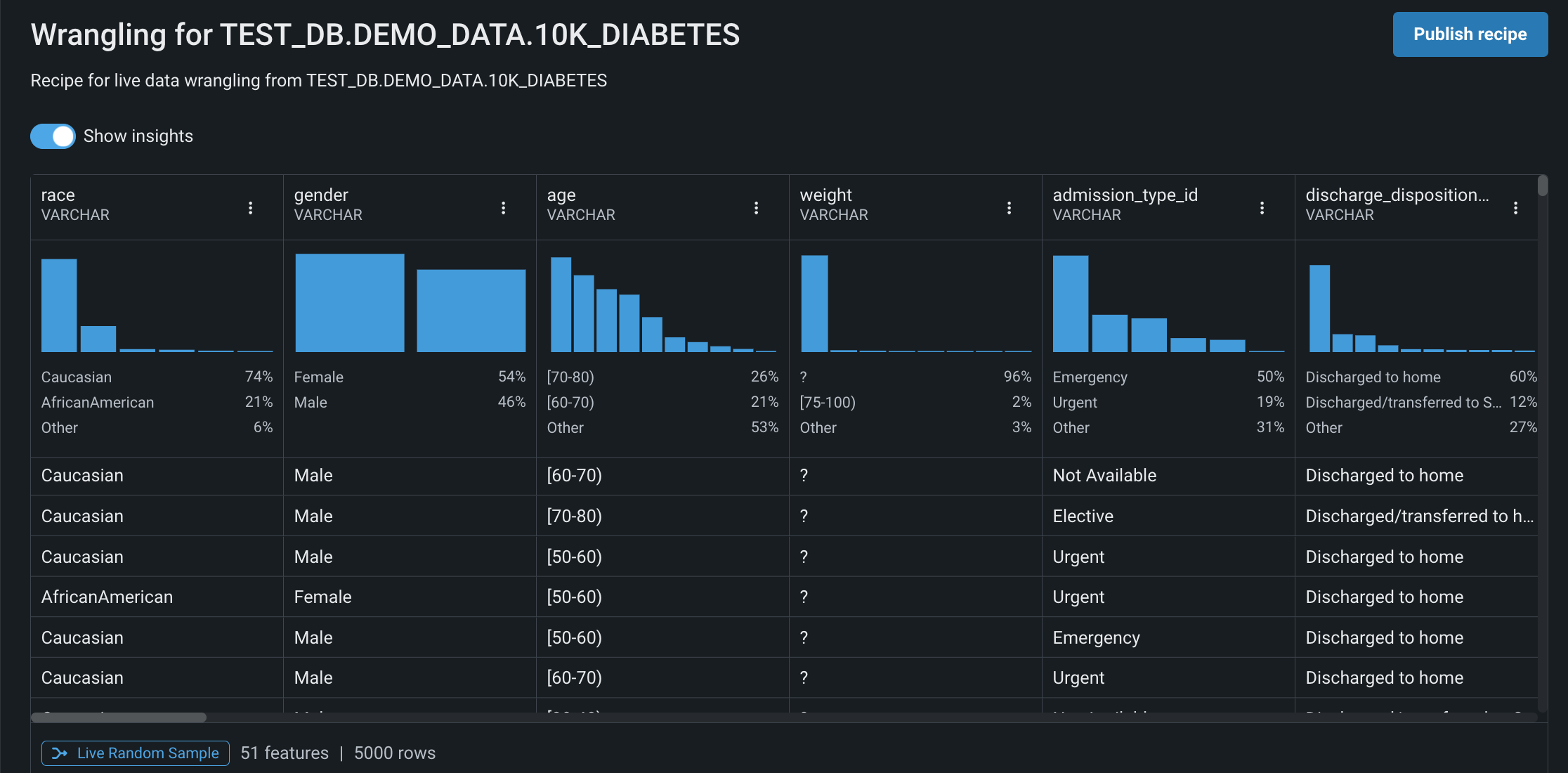

Analyze the live sample¶

During data wrangling, DataRobot performs exploratory data analysis on the live sample, generating table- and column-level summary statistics and visualizations that help you profile the dataset and recognize data quality issues as you apply operations. For more information on interacting with the live sample, see the section on exploratory data insights.

Speed up live sample

To speed up the time it takes to retrieve and render the live sample, use the toggle next to Show Insights to hide the feature distribution charts.

Live sample vs. exploratory data insights on the Data tab

Although both pages provide similar insights, you can specify the number of rows displayed in the live sample and it updates each time a transformation is added to your recipe.

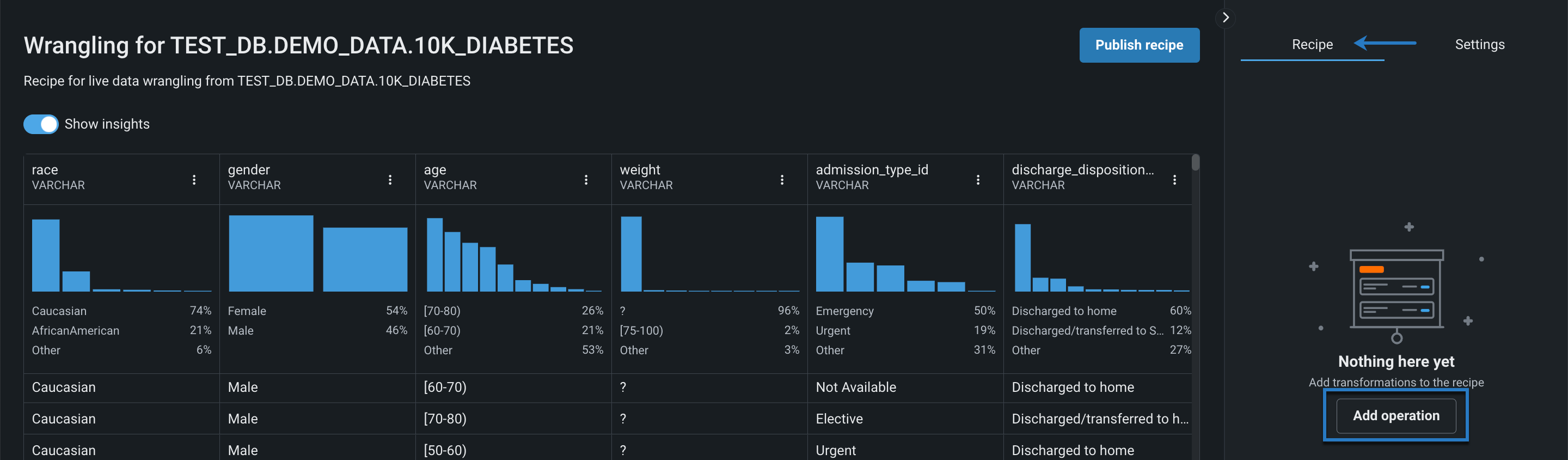

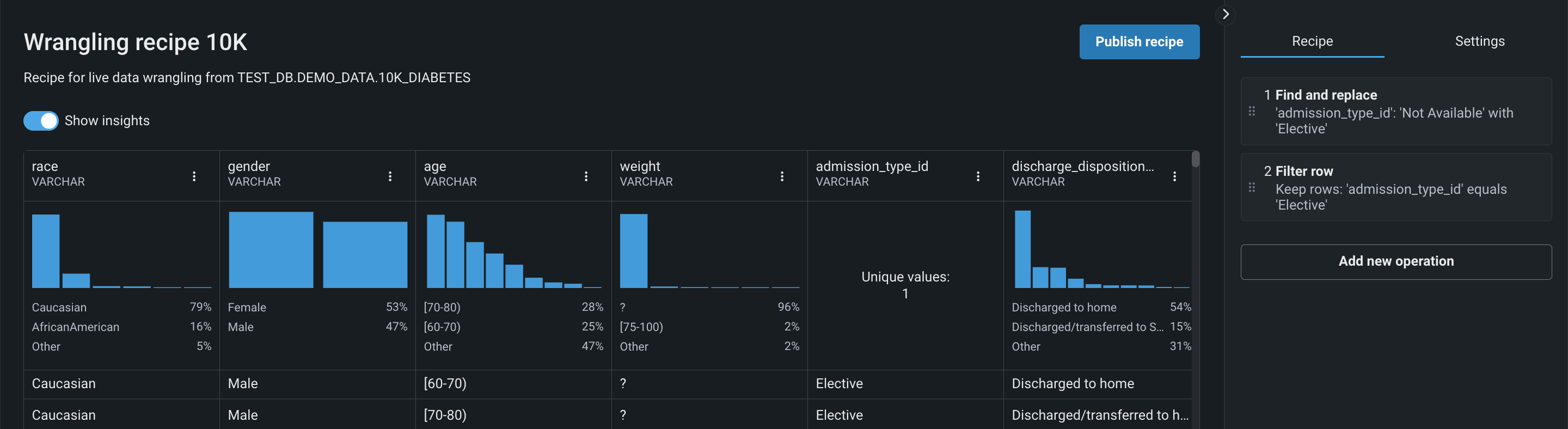

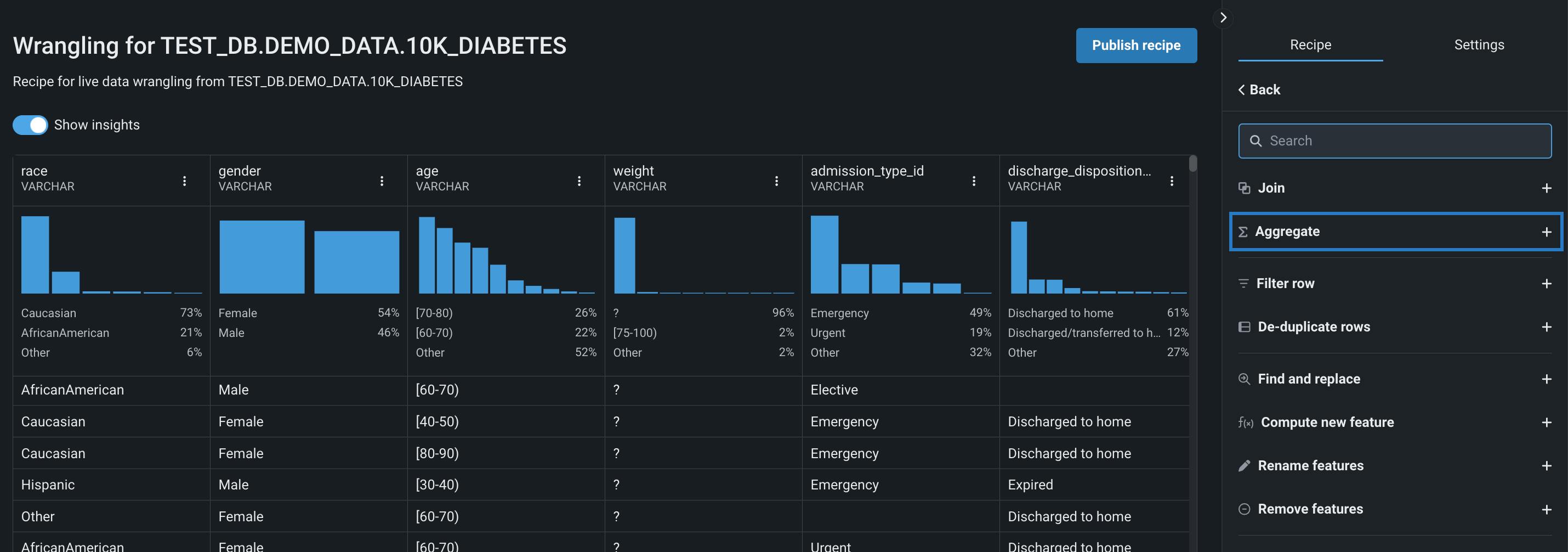

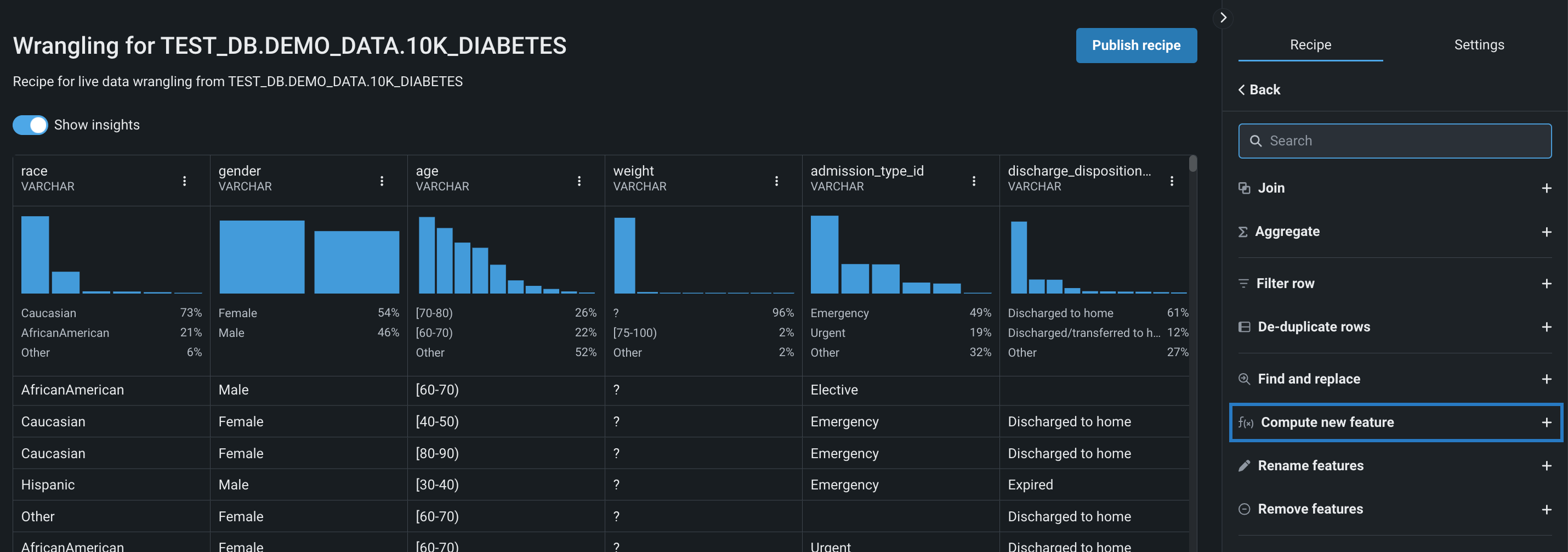

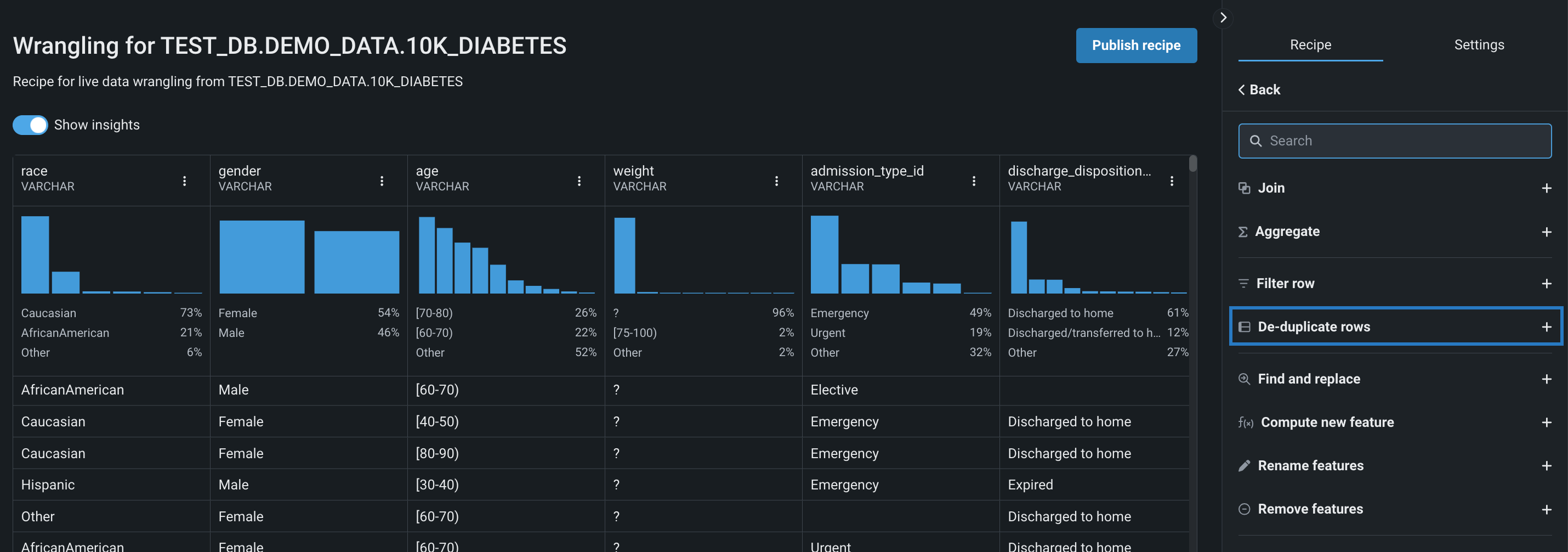

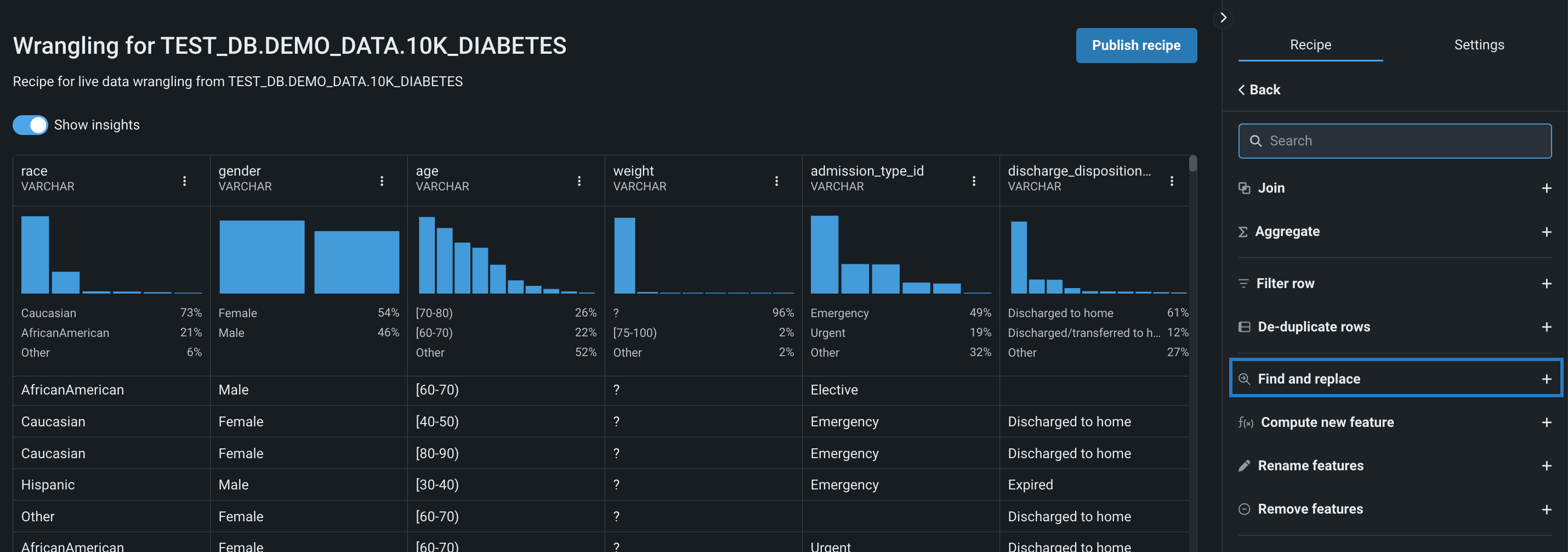

Add operations¶

A recipe is composed of operations—transformations that will be applied to the source data to prepare it for modeling. Note that operations are applied sequentially, so you may need to reorder the operations in your recipe to achieve the desired result.

Operation behavior

When a wrangling recipe is pushed down to the connected cloud data platform, the operations are executed in their environment. To understand how operations behave, refer to the documentation for your data platform:

Once the dataset is materialized in DataRobot and added to your Use Case, you can go to the Data tab and view which queries were executed by the cloud data platform during push down.

The table below describes the wrangling operations currently available in Workbench:

| Operation | Description |

|---|---|

| Join | Join datasets that are accessible via the same connection instance. |

| Aggregate | Apply mathematical aggregations to features in your dataset. |

| Compute new feature | Create a new feature using scalar subqueries, scalar functions, or window functions. |

| Filter row | Filter the rows in your dataset according to specified value(s) and conditions |

| De-duplicate rows | Automatically remove all duplicate rows from your dataset. |

| Find and replace | Replace specific feature values in a dataset. |

| Rename features | Change the name of one or more features in your dataset. |

| Remove features | Remove one or more features from your dataset. |

Can I perform majority class downsampling for unbalanced datasets?

Yes, you can enable majority class downsampling during the publishing phase of wrangling. In Workbench, downsampling happens in-source and sampling weight is generated. The target and weights are then passed along to the experiment.

To add an operation to your recipe:

-

With Recipe selected, click Add Operation in the right panel.

-

Select and configure an operation. Then, click Add to recipe.

The live sample updates after DataRobot retrieves a new sample from the data source and applies the operation, allowing you to review the transformation in realtime.

-

Continue adding operations while analyzing their effect on the live sample; when you're done, the recipe is ready to be published.

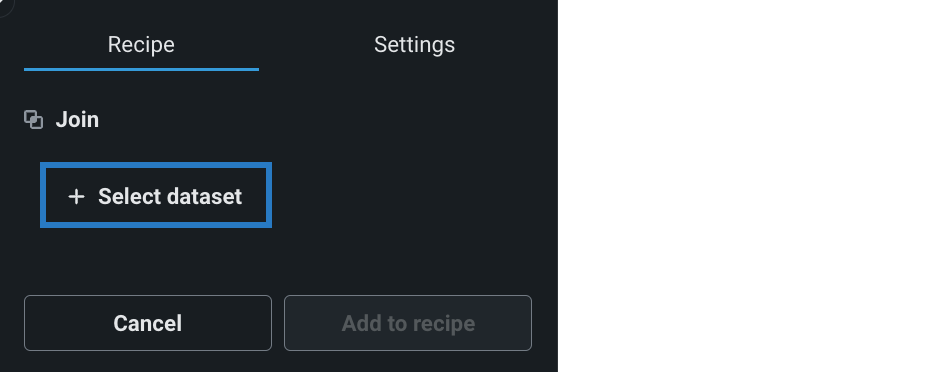

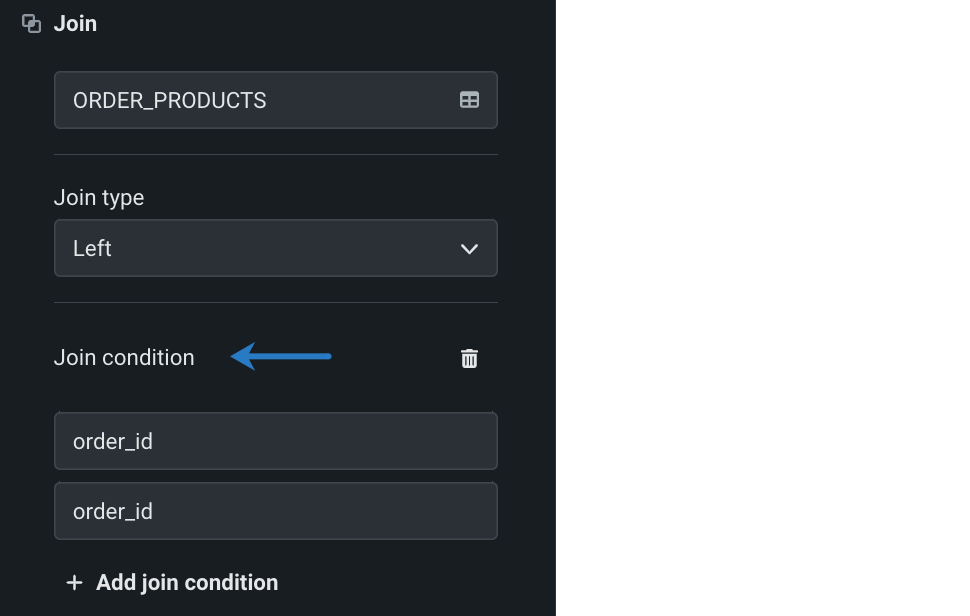

Join¶

Use the Join operation to combine datasets that are accessible via the same connection instance.

To join a table or dataset:

-

Click Join in the right panel.

-

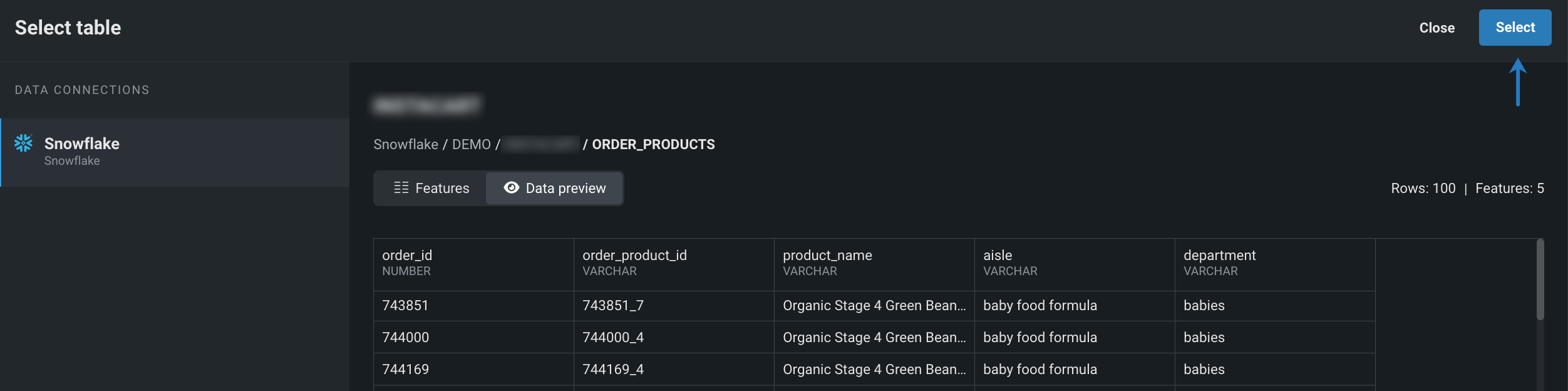

Click + Select dataset to browse and select a dataset from your connection instance.

-

Once you've opened and profiled the dataset you want to add, click Select.

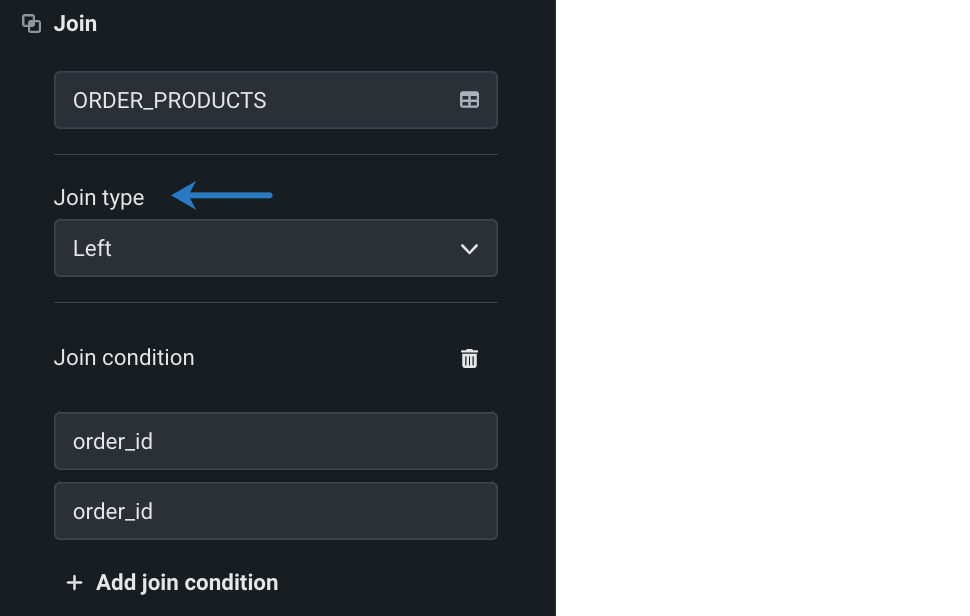

-

Select the appropriate Join type from the dropdown.

- Inner only returns rows that have matching values in both datasets, for example, any rows with matching values in the

order_idcolumn. - Left returns all rows from the left dataset (the original), and only the rows with matching values in the right dataset (joined).

- Inner only returns rows that have matching values in both datasets, for example, any rows with matching values in the

-

Select the Join condition, which defines how the two datasets are related. In this example, both the datasets are related by

order_id.

-

Click Add to recipe.

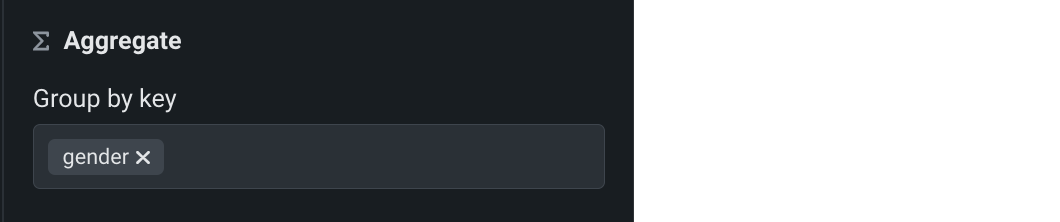

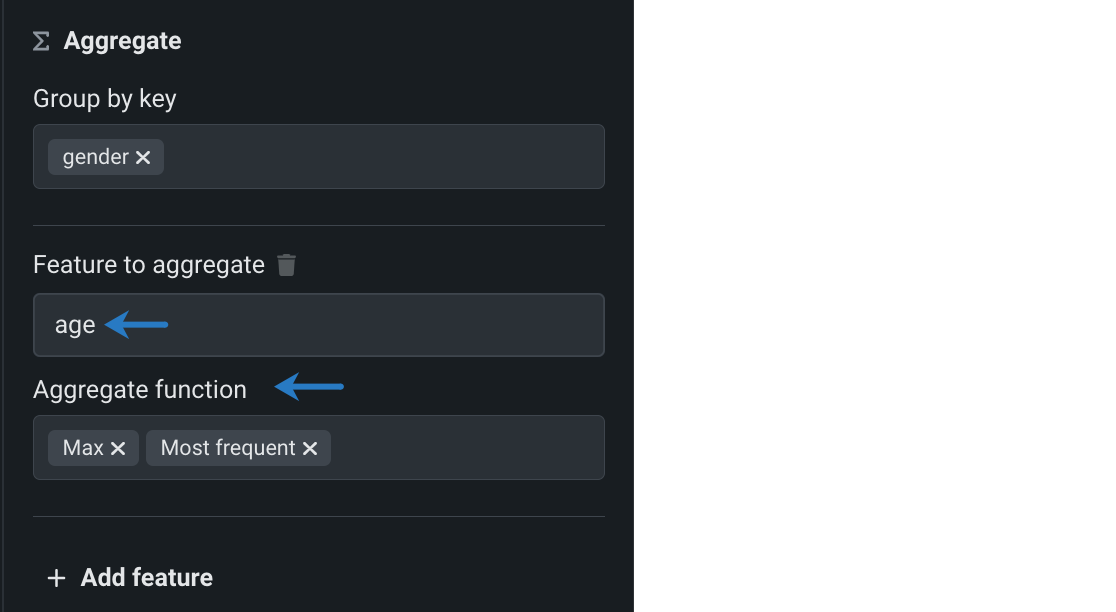

Aggregate¶

Use the Aggregate operation to apply the following mathematical aggregations to the dataset (available aggregations vary by feature type):

- Sum

- Min

- Max

- Avg

- Standard deviation

- Count

- Count distinct

- Most frequent (Snowflake only)

To add an aggregation:

-

Click Aggregate in the right panel.

-

Under Group by key, select the feature(s) you want to group your aggregation(s) by.

-

Click the field below Feature to aggregate and select a feature from the dropdown. Then, click the field below Aggregate function and choose one or more aggregations to apply to the feature.

-

(Optional) Click + Add feature to apply aggregations to additional features in this grouping.

-

Click Add to recipe.

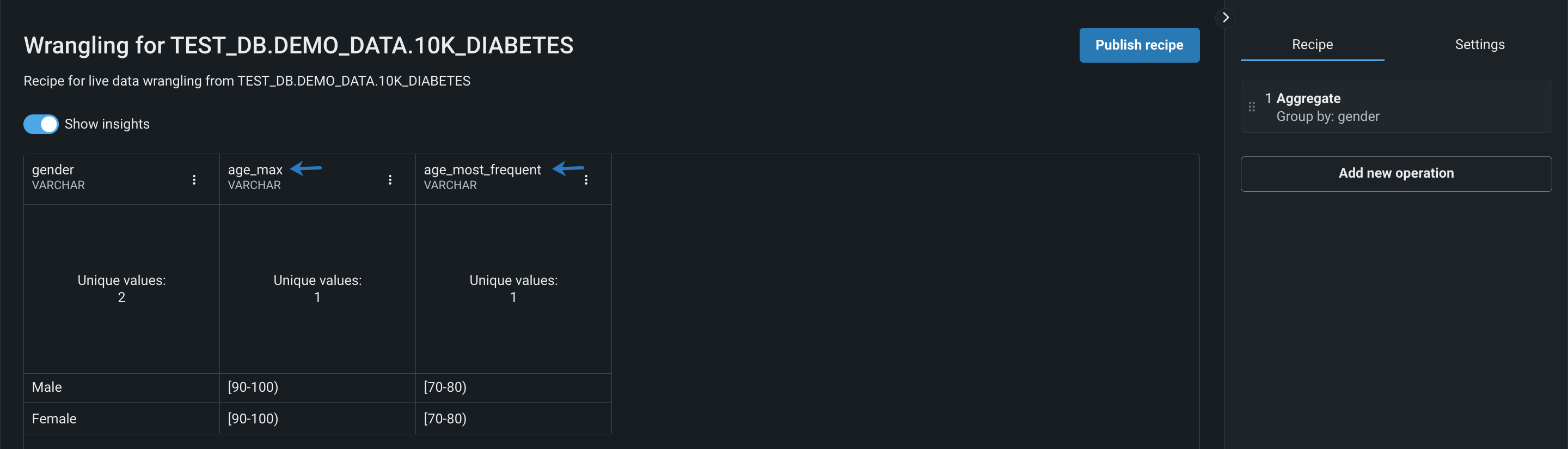

After adding the operation to the recipe, DataRobot renames aggregated features using the original name with the

_AggregationFunctionsuffix attached. In this example, the new columns areage_maxandage_most_frequent.

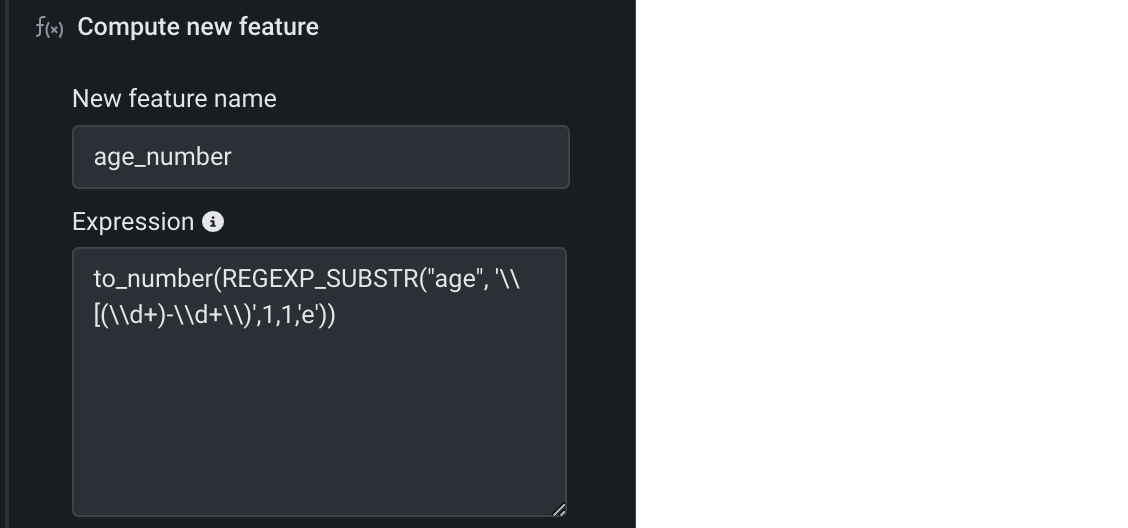

Compute a new feature¶

Use the Compute new feature operation to create a new output feature from existing features in your dataset. By applying domain knowledge, you can create features that do a better job of representing your business problem to the model than those in the original dataset.

To compute a new feature:

-

Click Compute new feature in the right panel.

-

Enter a name for the new feature, and under Expression, define the feature using scalar subqueries, scalar functions, or window functions for your chosen cloud data platform:

See the Snowflake documentation for:

See the BigQuery documentation for:

See the Databricks documentation for:

See the Spark SQL documentation for:

This example uses

REGEXP_SUBSTR, to extract the first number from the[<age_range_start> - <age_range_end>)from theagecolumn, andto_numberto convert the output from a string to a number.Expression formatting

For guidance on how to format your Compute new feature expressions, see the Expression field, which provides an example based on your data connection.

-

Click Add to recipe.

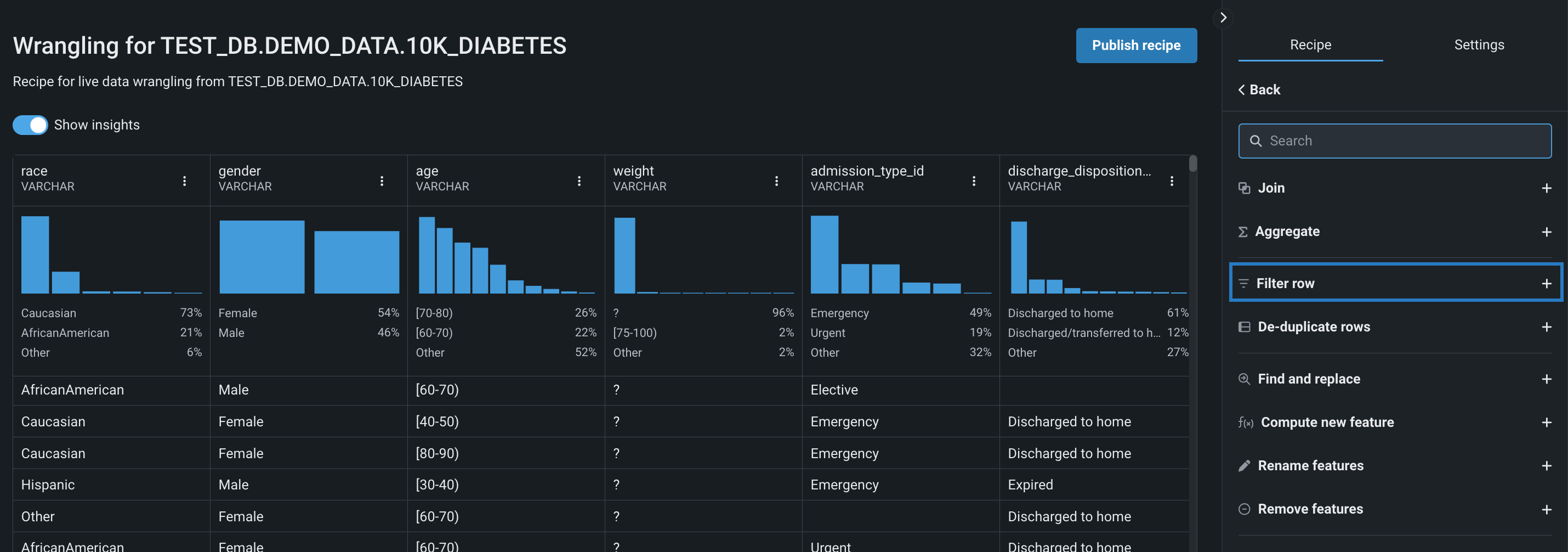

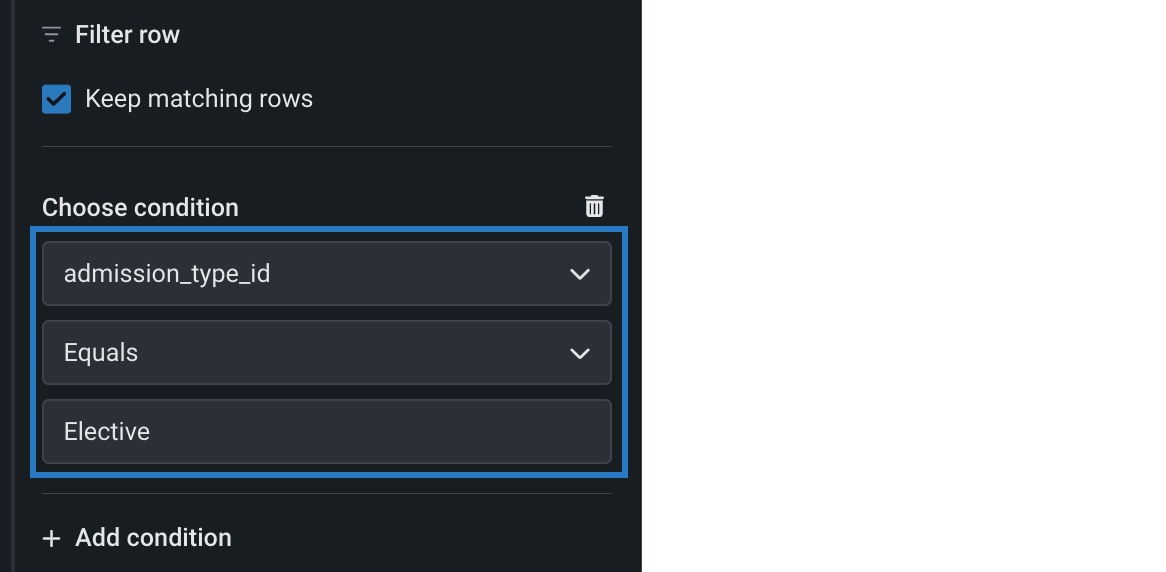

Filter row¶

Use the Filter row operation to filter the rows in your dataset according to specified value(s) and conditions.

To filter rows:

-

Click Filter row in the right panel.

-

Decide if you want to keep the rows that match the defined conditions or exclude them.

-

Define the filter conditions, by choosing the feature you want to filter, the condition type, and the value you want to filter by. DataRobot highlights the selected column.

-

(Optional) Click Add condition to define additional filtering criteria.

-

Click Add to recipe.

De-duplicate row¶

Use the De-duplicate rows operation to automatically remove all rows with duplicate information from the dataset.

To de-duplicate rows, click De-duplicate rows in the right panel. This operation is immediately added to your recipe and applied to the live sample.

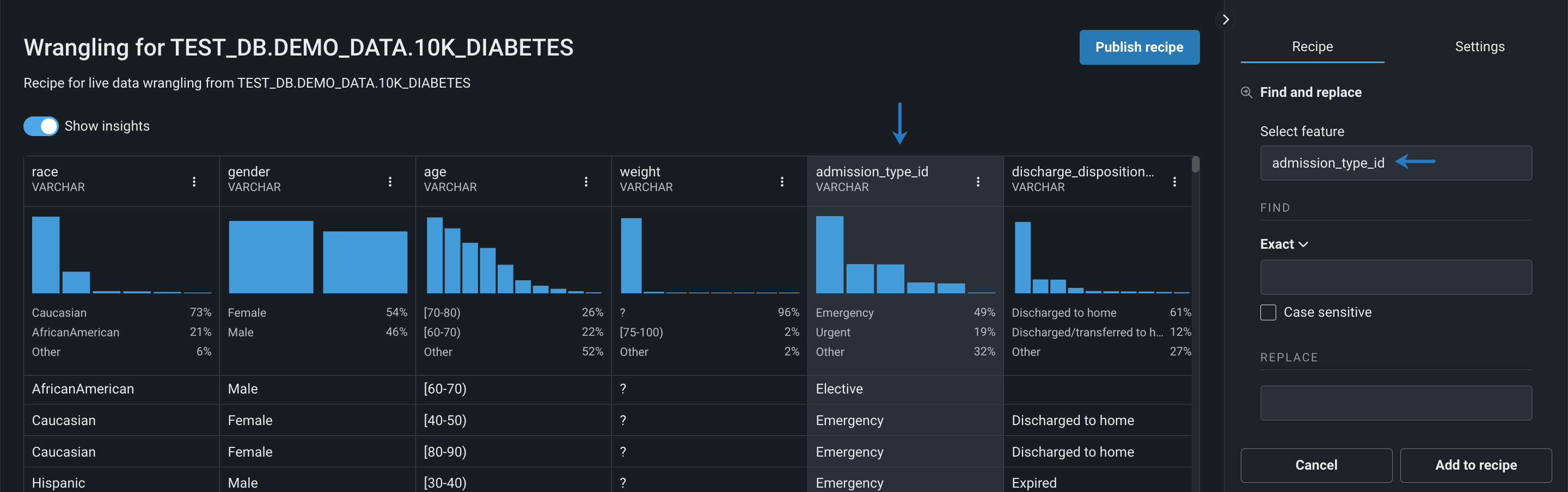

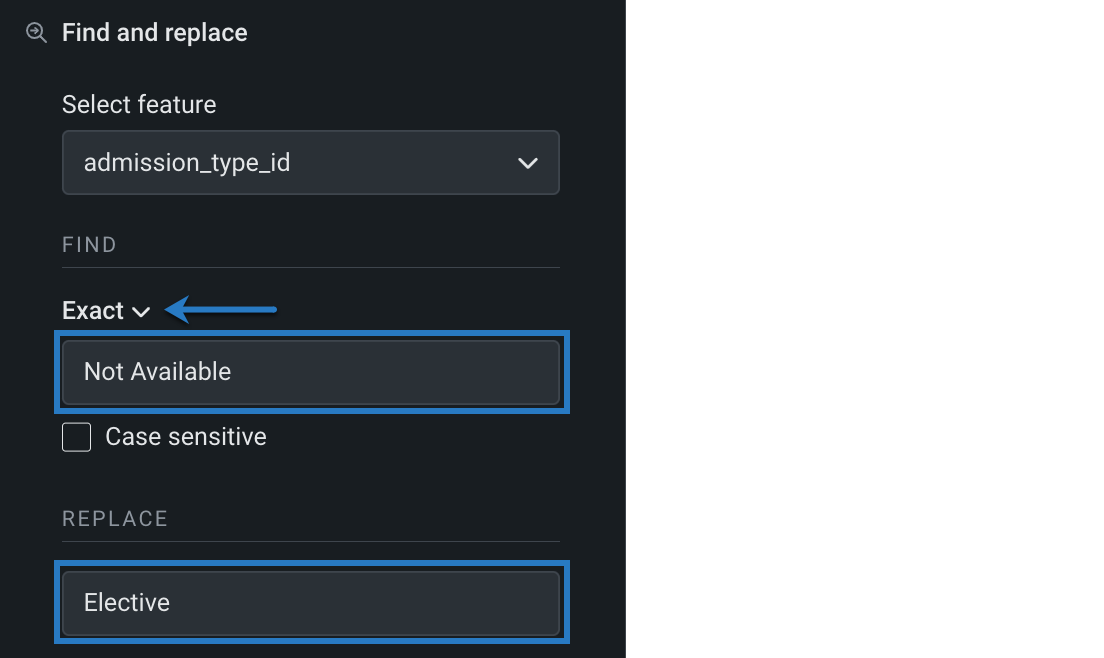

Find and replace¶

Use the Find and replace operation to quickly replace specific feature values in a dataset. This is helpful to, for example, fix typos in a dataset.

To find and replace a feature value:

-

Click Find and replace in the right panel.

-

Under Select feature, click the dropdown and choose the feature that contains the value you want to replace. DataRobot highlights the selected column.

-

Under Find, choose the match criteria—Exact, Partial, or Regular Expression—and enter the feature value you want to replace. Then, under Replace, enter the new value.

-

Click Add to recipe.

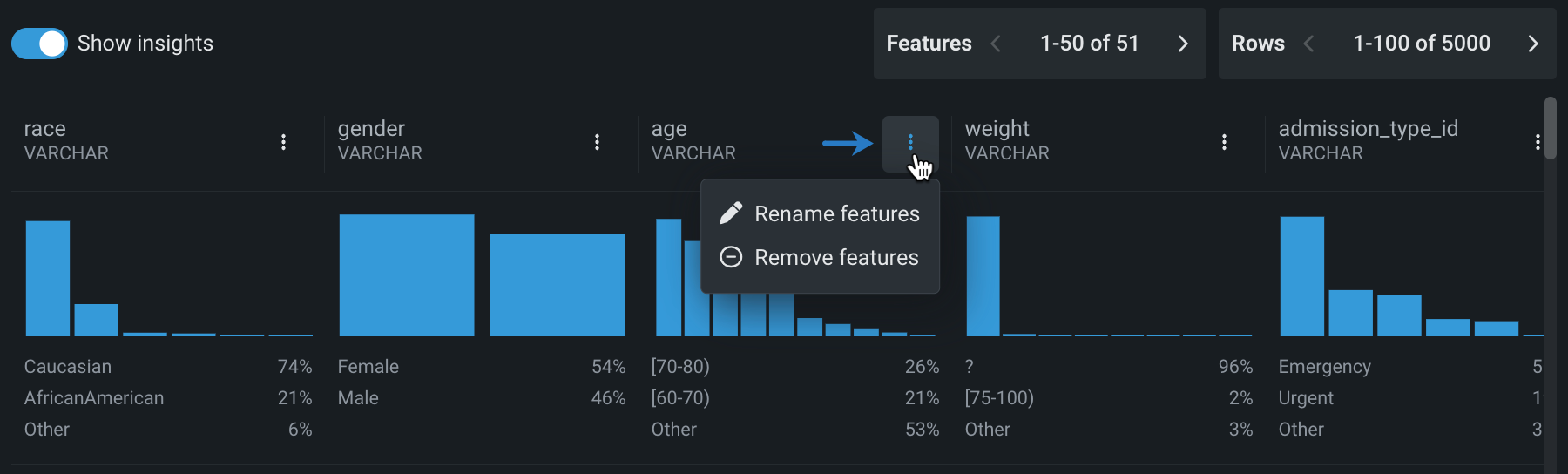

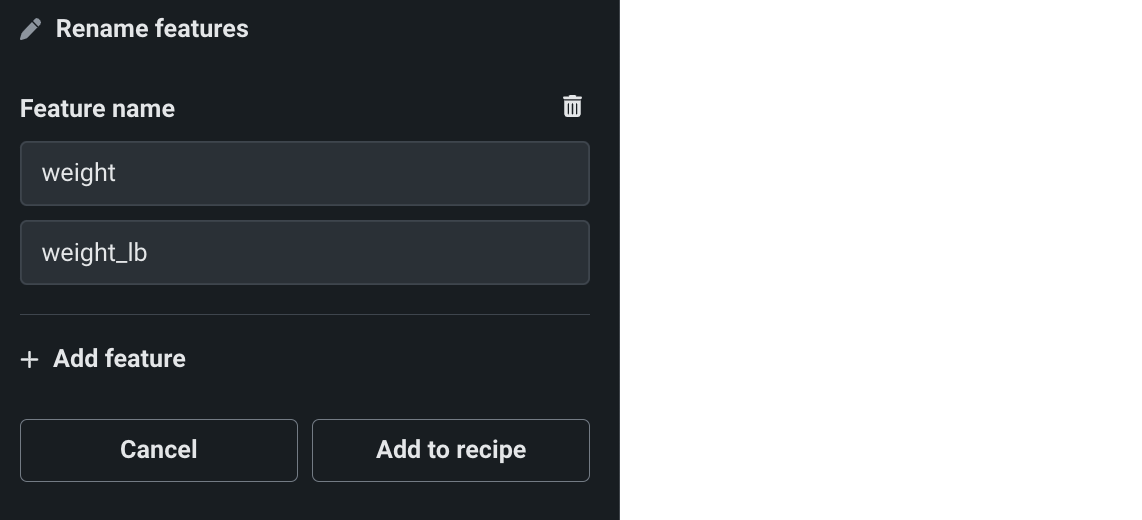

Rename features¶

Use the Rename features operation to rename one or more features in the dataset.

To rename features:

-

Click Rename features in the right panel.

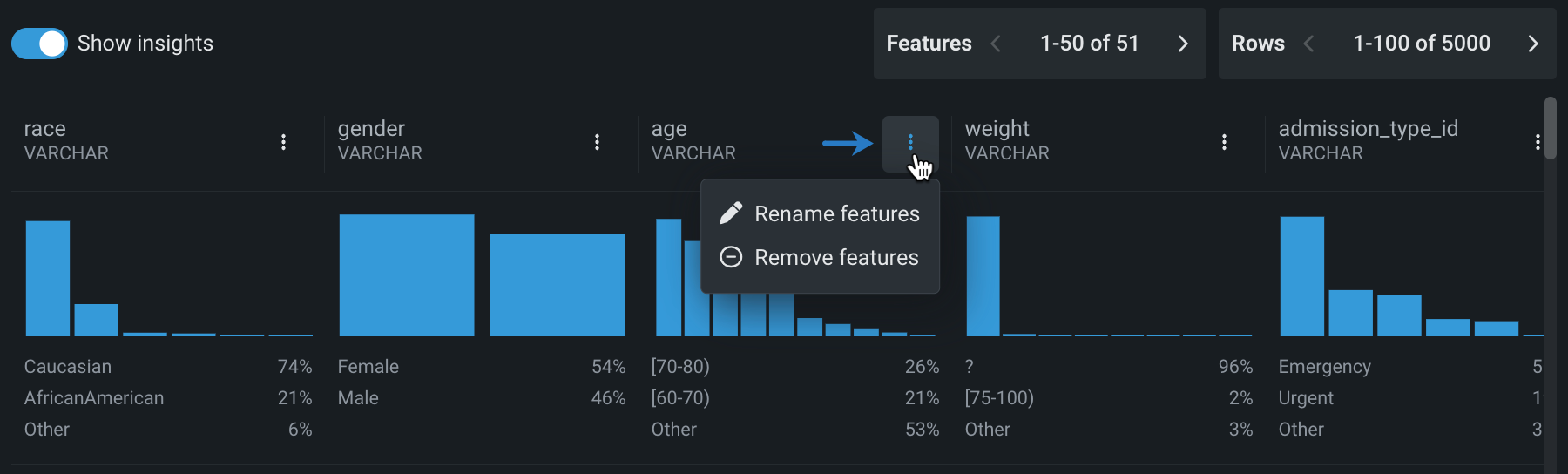

Rename specific features from the live sample

Alternatively, you can click the Actions menu next to the feature you want to rename. This opens the operation parameters in the right panel with the feature field already filled in.

-

Under Feature name, click the dropdown and choose the feature you want to rename. Then, enter the new feature name in the second field.

-

(Optional) Click Add feature to rename additional features.

-

Click Add to recipe.

Remove features¶

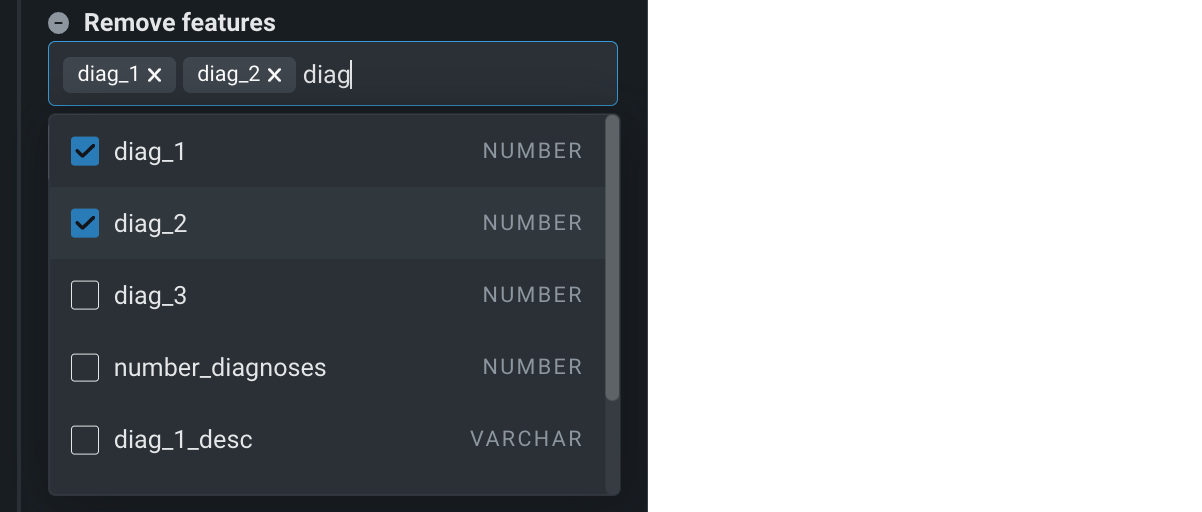

Use the Remove features operation to remove features from the dataset.

To remove features:

-

Click Remove features in the right panel.

Remove specific features from the live sample

Alternatively, you can click the Actions menu next to the feature you want to remove. This opens the operation parameters in the right panel with the feature field already filled in.

-

Under Feature name, click the dropdown and either start typing the feature name or scroll through the list to select the feature(s) you want to remove. Click outside of the dropdown when you're done selecting features.

-

Click Add to recipe.

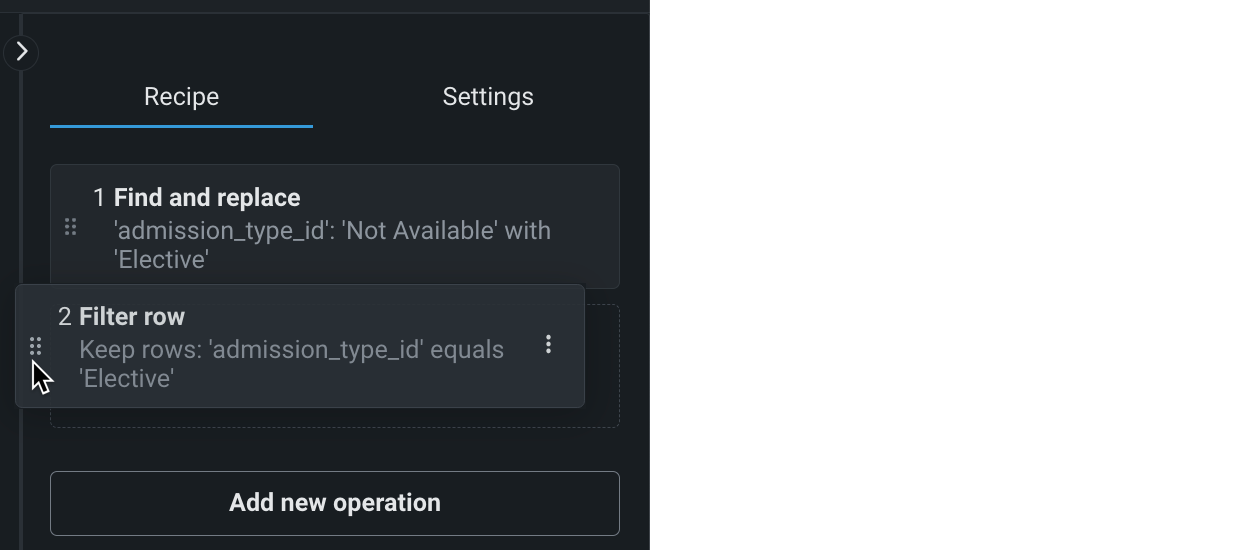

Reorder operations¶

All operations in a wrangling recipe are applied sequentially, therefore, the order in which they appear affects the results of the output dataset.

To move an operation to a new location, click and hold the operation you want to move, and then drag it to a new position.

The live sample updates to reflect the new order.

Next steps¶

From here, you can:

Read more¶

To learn more about the topics discussed on this page, see: